- Cataract

- Golucoma

- Age macular illness

- Regular

- others

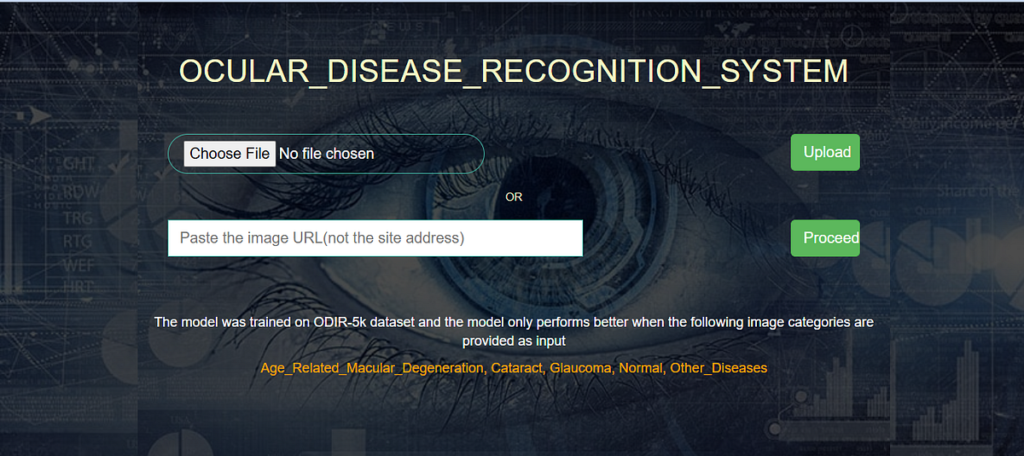

Introduction :

In recent times, the incidence of ocular ailments has elevated globally as a consequence of getting older populations, environmental components, and way of life modifications. Early prognosis and well timed therapy of eye ailments are important to forestall imaginative and prescient loss and enhance high quality of life. Nevertheless, diagnosing ocular ailments usually requires specialised tools and educated professionals, which will not be readily accessible, particularly in distant or underserved areas. This creates a essential want for automated, accessible, and correct diagnostic programs.

The Ocular Illness Recognition System goals to leverage developments in deep studying and laptop imaginative and prescient to help within the early detection and classification of varied eye situations. By utilizing a dataset of retinal or ocular pictures, this method can establish abnormalities corresponding to glaucoma, diabetic retinopathy, macular degeneration, and different frequent ailments that threaten imaginative and prescient. Automated recognition and classification of ocular ailments can empower healthcare suppliers with a software to display screen sufferers extra effectively and precisely, finally lowering the burden on healthcare programs and enabling sufferers to obtain sooner care.

little bit data of dataset :

Ocular Illness Clever Recognition (ODIR) is a structured ophthalmic database of 5,000 sufferers with age, shade fundus images from left and proper eyes and docs’ diagnostic key phrases from docs.

This dataset is supposed to characterize ‘‘real-life’’ set of affected person data collected by Shanggong Medical Expertise Co., Ltd. from completely different hospitals/medical facilities in China. In these establishments, fundus pictures are captured by numerous cameras available in the market, corresponding to Canon, Zeiss and Kowa, ensuing into diverse picture resolutions.

Annotations had been labeled by educated human readers with high quality management administration. They classify affected person into eight labels together with:

- Regular (N),

- Diabetes (D),

- Glaucoma (G),

- Cataract ©,

- Age associated Macular Degeneration (A),

- Hypertension (H),

- Pathological Myopia (M),

- Different ailments/abnormalities (O)

A problem with the information is that the prognosis encodings within the one-hot-encoded columns (columns labeled C, D, G…), specifically the numeric encoded prognosis are incorrect. Nevertheless the goal discipline is appropriate for the attention referenced in filename and matched the diagnostic key phrases for the respective eye.

We discovered that counting on the encoded prognosis classes “N D G C A H M O” could possibly be problematic within the sense that sthey are incorrect. The issue with that is that the recordsdata and prognosis are orientation particular and the row of information incorporates each eyes data.

Subsequently we are going to exclude these classes for this pocket book though they might actually be used for different investigations.

importing some significance library

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import matplotlib.picture as mpimg

import os

from tqdm import tqdm

import shutil

import random

import seaborn as sns

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import Mannequin

from tensorflow.keras import layers

from tensorflow.keras.fashions import Mannequin,Sequential

from tensorflow.keras.layers import GlobalAveragePooling2D, Conv2D, MaxPooling2D

from tensorflow.keras.layers import Dropout, Dense, Flatten, BatchNormalization,Enter

from tensorflow.keras.preprocessing.picture import ImageDataGenerator

from tensorflow.keras.optimizers import Adam, RMSprop, SGD

from keras.callbacks import ModelCheckpoint, EarlyStopping

from tensorflow.keras.functions.inception_v3 import InceptionV3

from tensorflow.keras.functions.resnet import ResNet50

from tensorflow.keras.functions.vgg16 import VGG16

from sklearn.model_selection import GridSearchCV

import numpy as np

Goal: Environment friendly dealing with of arrays, matrices, and mathematical operations.

- Widespread Use: Manipulating picture arrays, making use of transformations, computing statistics.

import pandas as pd

- Goal: Knowledge manipulation and evaluation utilizing DataFrames.

- Widespread Use: Managing labels, CSV metadata, coaching logs, or metrics.

import matplotlib.pyplot as plt

- Goal: Plotting graphs and visualizing knowledge.

- Widespread Use: Loss/accuracy curves, picture samples.

import matplotlib.picture as mpimg

- Goal: Learn and show pictures utilizing Matplotlib.

- Widespread Use: Visualizing enter pictures or mannequin outputs.

import seaborn as sns

- Goal: Statistical knowledge visualization, constructed on Matplotlib.

- Widespread Use: Correlation plots, confusion matrices, heatmaps.

import os

- Goal: Interacting with the file system.

- Widespread Use: Navigating directories, studying picture paths.

import shutil

- Goal: File operations (copy, transfer, delete).

- Widespread Use: Organizing prepare/val/take a look at folders.

import random

- Goal: Generate random numbers.

- Widespread Use: Shuffling datasets or making use of random augmentations.

from tqdm import tqdm

- Goal: Progress bars for loops.

- Widespread Use: Exhibiting progress throughout preprocessing or predictions.

import tensorflow as tf

- Goal: Most important deep studying framework.

- Widespread Use: Mannequin coaching, tensor ops, GPU help.

- TensorFlow is an open-source machine studying (ML) framework developed by Google Mind for constructing and coaching deep studying fashions. It gives a complete ecosystem of instruments, libraries, and neighborhood assets to assist researchers and builders create scalable ML and AI functions.

Key Options of TensorFlow

Versatile Structure

- Helps each high-level APIs (like Keras) and low-level operations for customized mannequin constructing.

- Works on CPUs, GPUs, and TPUs (Tensor Processing Items).

Cross-Platform Compatibility

- Runs on desktops, cellular gadgets (TensorFlow Lite), and browsers (TensorFlow.js).

- Helps deployment in manufacturing (TensorFlow Serving).

- Pre-trained Fashions & Switch Studying

- Provides pre-trained fashions (e.g., BERT, ResNet, EfficientNet) by way of TensorFlow Hub.

- Allows fine-tuning for customized duties.

- Scalability

- Helps distributed coaching throughout a number of machines.

- Works with massive datasets utilizing

tf.knowledgefor environment friendly enter pipelines.

- Visualization Instruments

- TensorBoard helps visualize mannequin coaching, metrics, and computational graphs.

- In depth Neighborhood & Ecosystem

- Massive open-source neighborhood with contributions from Google and others.

- Integrates with libraries like NumPy, Pandas, and OpenCV.

from tensorflow import keras

- Goal: Excessive-level API for TensorFlow fashions.

from tensorflow.keras import Mannequin, layers

- Goal: Defining customized fashions and utilizing frequent layers.

from tensorflow.keras.fashions import Mannequin, Sequential

- Goal:

Mannequin: Practical API (versatile architectures)Sequential: Stack layers linearly.

from tensorflow.keras.layers import ...

- Goal: Layers utilized in CNNs, corresponding to:

Conv2D: Convolutional layerMaxPooling2D: DownsamplingDropout: Forestall overfittingDense: Totally related layerFlatten: Flattening outputBatchNormalization: Normalize layer activationsEnter: Enter layer

from tensorflow.keras.preprocessing.picture import ImageDataGenerator

- Goal: Actual-time knowledge augmentation and loading.

- Widespread Use: Enhance mannequin generalization.

from tensorflow.keras.optimizers import Adam, RMSprop, SGD

- Goal: Optimizers utilized in coaching.

- Examples:

Adam: Adaptive studying charge (most used)RMSprop: Works nicely in RNNsSGD: Basic stochastic gradient descent

from keras.callbacks import ModelCheckpoint, EarlyStopping

- Goal: Coaching helpers.

ModelCheckpoint: Save finest mannequinEarlyStopping: Cease coaching when no enchancment

from tensorflow.keras.functions.inception_v3 import InceptionV3

Goal: Load the InceptionV3 mannequin, optionally with pre-trained weights.

from tensorflow.keras.functions.resnet import ResNet50

- Goal: Load ResNet50 mannequin (deep CNN, good for classification).

from tensorflow.keras.functions.vgg16 import VGG16

- Goal: Load VGG16 mannequin (easy but highly effective CNN structure).

from sklearn.model_selection import GridSearchCV

- Goal: Carry out hyperparameter tuning (not at all times used instantly with Keras).

- Widespread Use: Attempt completely different studying charges, optimizers, and so forth. with wrapper lessons.

df=pd.read_csv("/kaggle/enter/ocular-disease-recognition-odir5k/full_df.csv")

df.head(30)

full_df.csv from the ODIR-5K dataset on Kaggle right into a DataFrame known as df, after which displaying the primary 30 rows to know its construction.dast=df[df['labels']== "['N']"]

dast

dast that incorporates solely the data the place the prognosis is “[‘N’]”, which probably stands for “Regular” (no illness).df.tail(20).isna()

df.tail(20).isna() checks for lacking (NaN) values within the final 20 rows of the DataFrame and returns a True/False matrix indicating their presencprepare=df.drop(columns=['M', 'D', 'H', ],axis=1)prepare=prepare[((train['labels'] == "['N']") | (prepare['labels'] == "['G']") | (prepare['labels']== "['C']") | (prepare['labels'] == "['A']")| (prepare['labels']=="['O']"))]

# Courses

lessons=['N','G', 'C', 'A', 'O']

prepare = prepare.reset_index(drop=True)

prepare.head()

'M', 'D', and 'H', filters the DataFrame to maintain solely rows with particular labels ('N', 'G', 'C', 'A', 'O'), defines these as goal lessons, and resets the index of the ensuing DataFrame.Making the seprate seprate folder of every illness picture

glaucoma=[]

for i in vary(len(prepare)):

if(prepare['labels'][i]=="['G']"):

glaucoma.append(prepare['filename'][i])cataract=[]

for i in vary(len(prepare)):

if(prepare['labels'][i]=="['C']"):

cataract.append(prepare['filename'][i])

amd=[]

for i in vary(len(prepare)):

if(prepare['labels'][i]=="['A']"):

amd.append(prepare['filename'][i])

regular=[]

for i in vary(len(prepare)):

if(prepare['labels'][i]=="['N']"):

regular.append(prepare['filename'][i])

different=[]

for i in vary(len(prepare)):

if(prepare['labels'][i]=="['O']"):

different.append(prepare['filename'][i])

#describing the aim: copying categorized pictures to a brand new listing construction.

if os.path.isdir('./Knowledge/cataract/') is True:

# os.mkdir('./Knowledge')

print("exists")

#Verify if the ./Knowledge listing would not exist — if not, create it.# Copy pictures to new path

if os.path.isdir('./Knowledge') is False:

os.mkdir('./Knowledge')

# (Glaucoma, Cataract, Age associated Macular Degeneration, Hypertension)

glaucoma_dir='./Knowledge/glaucoma/' #Set path and create a folder for glaucoma pictures.

os.mkdir('./Knowledge/glaucoma/')

# Loop by means of glaucoma picture filenames and replica every to the glaucoma folder (with progress bar).

for filename in tqdm(glaucoma):

shutil.copy('../enter/ocular-disease-recognition-odir5k/preprocessed_images/'+filename,glaucoma_dir)

cataract_dir='./Knowledge/cataract/' #Set path and create a folder for cataract pictures.

os.mkdir('./Knowledge/cataract/')

#Loop by means of cataract picture filenames and replica every to the cataract folder.

for filename in tqdm(cataract):

shutil.copy('../enter/ocular-disease-recognition-odir5k/preprocessed_images/'+filename,cataract_dir)

macular_dir='./Knowledge/age_related_macular_degeneration/'

os.mkdir('./Knowledge/age_related_macular_degeneration/')

for filename in tqdm(amd):

shutil.copy('../enter/ocular-disease-recognition-odir5k/preprocessed_images/'+filename,macular_dir)

normal_dir='./Knowledge/regular/'

os.mkdir('./Knowledge/regular/')

for filename in tqdm(regular):

shutil.copy('../enter/ocular-disease-recognition-odir5k/preprocessed_images/'+filename,normal_dir)

other_dir='./Knowledge/other_diseases/'

os.mkdir('./Knowledge/other_diseases/')

for filename in tqdm(different):

shutil.copy('../enter/ocular-disease-recognition-odir5k/preprocessed_images/'+filename,other_dir)

base_dir = './Dataset/'

if os.path.isdir(base_dir) is False:

os.mkdir(base_dir)#Prepare, Testing and Validation set

train_dir = os.path.be part of(base_dir, 'Coaching')

os.mkdir(train_dir)

testing_dir = os.path.be part of(base_dir, 'Testing')

os.mkdir(testing_dir)

validation_dir = os.path.be part of(base_dir, 'Validation')

os.mkdir(validation_dir)

# Below prepare folder create 4 folders

# (Glaucoma, Cataract, Age associated Macular Degeneration, Regular, Others)

train_glaucoma_dir = os.path.be part of(train_dir, 'glaucoma')

os.mkdir(train_glaucoma_dir)

train_cataract_dir = os.path.be part of(train_dir, 'cataract')

os.mkdir(train_cataract_dir)

train_age_macular_dir = os.path.be part of(train_dir, 'age_related_macular_degeneration')

os.mkdir(train_age_macular_dir)

train_normal_dir = os.path.be part of(train_dir, 'regular')

os.mkdir(train_normal_dir)

train_other_diseases_dir = os.path.be part of(train_dir, 'other_diseases')

os.mkdir(train_other_diseases_dir)

# Below testing folder create 4 folders

testing_glaucoma_dir = os.path.be part of(testing_dir, 'glaucoma')

os.mkdir(testing_glaucoma_dir)

testing_cataract_dir = os.path.be part of(testing_dir, 'cataract')

os.mkdir(testing_cataract_dir)

testing_age_macular_dir = os.path.be part of(testing_dir, 'age_related_macular_degeneration')

os.mkdir(testing_age_macular_dir)

testing_normal_dir = os.path.be part of(testing_dir, 'regular')

os.mkdir(testing_normal_dir)

testing_other_diseases_dir = os.path.be part of(testing_dir, 'other_diseases')

os.mkdir(testing_other_diseases_dir)

# Below validation folder create 4 folders

# (Glaucoma, Cataract, Age associated Macular Degeneration, Pathological Myopia)

validation_glaucoma_dir = os.path.be part of(validation_dir, 'glaucoma')

os.mkdir(validation_glaucoma_dir)

validation_cataract_dir = os.path.be part of(validation_dir, 'cataract')

os.mkdir(validation_cataract_dir)

validation_age_macular_dir = os.path.be part of(validation_dir, 'age_related_macular_degeneration')

os.mkdir(validation_age_macular_dir)

validation_normal_dir = os.path.be part of(validation_dir, 'regular')

os.mkdir(validation_normal_dir)

validation_other_diseases_dir = os.path.be part of(validation_dir, 'other_diseases')

os.mkdir(validation_other_diseases_dir)

glaucoma_source_dir = '/kaggle/enter/ocr-filtred/Knowledge/glaucoma/'

training_glaucoma_dir = './Dataset/Coaching/glaucoma/'

testing_glaucoma_dir = './Dataset/Testing/glaucoma/'

valid_glaucoma_dir = './Dataset/Validation/glaucoma/'cataract_source_dir = '/kaggle/enter/ocr-filtred/Knowledge/cataract/'

training_cataract_dir = './Dataset/Coaching/cataract/'

testing_cataract_dir = './Dataset/Testing/cataract/'

valid_cataract_dir = './Dataset/Validation/cataract/'

age_related_macular_degeneration_source_dir = '/kaggle/enter/ocr-filtred/Knowledge/age_related_macular_degeneration/'

training_age_related_macular_degeneration_dir = './Dataset/Coaching/age_related_macular_degeneration/'

testing_age_related_macular_degeneration_dir = './Dataset/Testing/age_related_macular_degeneration/'

valid_age_related_macular_degeneration_dir = './Dataset/Validation/age_related_macular_degeneration/'

normal_source_dir = '/kaggle/enter/ocr-filtred/Knowledge/regular/'

training_normal_dir = './Dataset/Coaching/regular/'

testing_normal_dir = './Dataset/Testing/regular/'

valid_normal_dir = './Dataset/Validation/regular/'

other_diseases_source_dir = '/kaggle/enter/ocr-filtred/Knowledge/other_diseases/'

training_other_diseases_dir = './Dataset/Coaching/other_diseases/'

testing_other_diseases_dir = './Dataset/Testing/other_diseases/'

valid_other_diseases_dir = './Dataset/Validation/other_diseases/'

def split_data(SOURCE, TRAINING, TESTING, VALIDATION, SPLIT_SIZE):

recordsdata = []

for filename in os.listdir(SOURCE):

file = SOURCE + filename

if os.path.getsize(file) > 0:

recordsdata.append(filename)

else:

print(filename + " is zero size, so ignoring.")SIZE = (1 - SPLIT_SIZE) / 2

training_length = int(len(recordsdata) * SPLIT_SIZE)

testing_length = int(len(recordsdata) * SIZE)

valid_length = int(len(recordsdata) * SIZE)

shuffled_set = random.pattern(recordsdata, len(recordsdata))

training_set = shuffled_set[0:training_length]

testing_set = shuffled_set[training_length:training_length+testing_length]

valid_set = shuffled_set[training_length+testing_length:]

for filename in training_set:

this_file = SOURCE + filename

vacation spot = TRAINING + filename

shutil.copy(this_file, vacation spot)

for filename in testing_set:

this_file = SOURCE + filename

vacation spot = TESTING + filename

shutil.copy(this_file, vacation spot)

for filename in valid_set:

this_file = SOURCE + filename

vacation spot = VALIDATION + filename

shutil.copy(this_file, vacation spot)

split_size = 0.80split_data(glaucoma_source_dir, training_glaucoma_dir, testing_glaucoma_dir, valid_glaucoma_dir, split_size)

split_data(cataract_source_dir, training_cataract_dir, testing_cataract_dir, valid_cataract_dir, split_size)

split_data(age_related_macular_degeneration_source_dir, training_age_related_macular_degeneration_dir, testing_age_related_macular_degeneration_dir, valid_age_related_macular_degeneration_dir, split_size)

split_data(other_diseases_source_dir, training_other_diseases_dir, testing_other_diseases_dir, valid_other_diseases_dir, split_size)

split_data(normal_source_dir, training_normal_dir, testing_normal_dir, valid_normal_dir, split_size)

def img_aug(img_height,img_width):

train_datagen = ImageDataGenerator(rescale = 1.0/255.0,

rotation_range = 30,

width_shift_range = 0.1,

height_shift_range = 0.1,

zoom_range = 0.1,

horizontal_flip = True,

vertical_flip=True,

shear_range=0.2,

brightness_range=[0.3,1],

fill_mode='nearest'

)

train_generator = train_datagen.flow_from_directory('./Dataset/Coaching/',

target_size=(img_height,img_width),

class_mode='categorical')

validation_datagen = ImageDataGenerator(rescale=1.0/255.0)

validation_generator = validation_datagen.flow_from_directory('./Dataset/Validation/',

target_size=(img_height,img_width),

class_mode='categorical')

test_datagen = ImageDataGenerator(rescale=1.0/255.0)

test_generator = test_datagen.flow_from_directory('./Dataset/Testing/',

target_size=(img_height,img_width),

class_mode='categorical')return train_generator,validation_generator,test_generator

train_generator,validation_generator,test_generator = img_aug(224,224)

Mannequin Coaching :-

import tensorflow as tf

from tensorflow.keras import fashions,layers

from tensorflow.keras.fashions import Sequential

from tensorflow.keras.layers import Dense,Conv2D,MaxPooling2D,Flatten,Dropout,BatchNormalization

from tensorflow.keras.preprocessing.picture import ImageDataGenerator,img_to_array,load_img

from tensorflow.keras.callbacks import EarlyStopping,ModelCheckpoint

import numpy as np

from sklearn.preprocessing import OneHotEncoder

# from sklearn.preprocessing import confusion_matrix

import matplotlib.pyplot as pltIMAGE_SIZE=224

BATCH_SIZE=32

CHANNLES= 3

epochs=50knowledge=tf.keras.preprocessing.image_dataset_from_directory("/kaggle/working/Knowledge",

shuffle=True,

image_size=(IMAGE_SIZE,IMAGE_SIZE),

batch_size= BATCH_SIZE

)

class_names = knowledge.class_names

class_names

for image_batch, label_batch in knowledge.take(1):

plt.imshow(image_batch[0].numpy().astype("uint8"))# the pictures will change each time due to suffle

plt.title(class_names[label_batch[0]])

plt.axis("off")

# print(image_batch[0].form)

# print(image_batch[0].numpy()) # right here picture convert to tensor 3D and the is characterize 0 to 255# print(label_batch.numpy())

plt.determine(figsize=(15,15))

for image_batch, label_batch in knowledge.take(1):

for i in vary(20):

ax=plt.subplot(4,5,i+1)

plt.imshow(image_batch[i].numpy().astype("uint8"))# the pictures will change each time due to suffle

plt.title(class_names[label_batch[i]])

plt.axis("off")

import tensorflow as tf

from tensorflow.keras.fashions import Mannequin

from tensorflow.keras.layers import Enter, Dense, GlobalAveragePooling2D, Dropout# Load the InceptionV3 base mannequin (excluding the highest dense layers)

base_model = tf.keras.functions.InceptionV3(weights='imagenet', include_top=False, input_shape=(299, 299, 3))

# Unfreeze some layers for fine-tuning

for layer in base_model.layers[-30:]:

layer.trainable = True

# Add customized layers on prime of the bottom mannequin

x = GlobalAveragePooling2D()(base_model.output)

x = Dense(1024, activation='relu')(x)

x = Dropout(0.5)(x) # Add dropout for regularization

output = Dense(num_classes, activation='softmax')(x)

# Create the customized InceptionV3 mannequin

custom_inception = Mannequin(inputs=base_model.enter, outputs=output)

# Implement studying charge scheduling

lr_schedule = tf.keras.optimizers.schedules.ExponentialDecay(

initial_learning_rate=1e-4,

decay_steps=1000,

decay_rate=0.9

)

optimizer = tf.keras.optimizers.Adam(learning_rate=lr_schedule)

# Compile the mannequin with the brand new optimizer and loss perform

custom_inception.compile(optimizer=optimizer, loss='categorical_crossentropy', metrics=['accuracy'])

# Implement early stopping

early_stopping = tf.keras.callbacks.EarlyStopping(monitor='val_loss', endurance=5, restore_best_weights=True)

# Prepare the mannequin

historical past = custom_inception.match(

train_generator,

epochs=100, # Enhance the variety of epochs

validation_data=validation_generator,

callbacks=[early_stopping]

)

mannequin=custom_inception.consider(test_generator)

mannequin=custom_inception.consider(validation_generator)

mannequin=custom_inception.consider(train_generator)

class_dict = checklist(test_generator.class_indices.keys())

print(class_dict)

Mannequin Prediction :

import numpy as np

from tensorflow.keras.preprocessing import picture

import matplotlib.pyplot as plt# Load and preprocess the brand new picture

new_image_path = '/kaggle/working/Knowledge/age_related_macular_degeneration/1862_right.jpg' # Change with the precise path to your new picture

new_image = picture.load_img(new_image_path, target_size=(299, 299))

new_image = picture.img_to_array(new_image)

new_image = np.expand_dims(new_image, axis=0)

new_image = new_image / 255.0 # Normalize the pixel values

# Make a prediction utilizing the customized InceptionV3 mannequin

prediction = custom_inception.predict(new_image)

# Get the anticipated class label

predicted_class_index = np.argmax(prediction)

predicted_class = class_names[predicted_class_index]

# Show the unique picture

plt.determine(figsize=(10, 5))

plt.subplot(1, 2, 1)

plt.imshow(picture.load_img(new_image_path))

plt.title('Unique Picture')

# Show the anticipated picture

plt.subplot(1, 2, 2)

plt.imshow(new_image[0])

plt.title(f'Predicted as {predicted_class}')

plt.present()

filepath='Ocular_disease.h5'

custom_inception.save(filepath)

m=keras.fashions.load_model("/kaggle/enter/new-model-with-accuracy-5/Ocular_disease.h5")

acc=m.consider(validation_generator,verbose=1)[1]print(f"Take a look at accuracy of your mannequin is = {acc*100} %")

from sklearn.metrics import accuracy_score, precision_score, recall_score, f1_score

import numpy as np

from tensorflow.keras.preprocessing import picture# Load and preprocess the brand new picture

new_image_path = '/kaggle/enter/ocular-disease-recognition-odir5k/preprocessed_images/0_left.jpg' # Change with the precise path to your new picture

new_image = picture.load_img(new_image_path, target_size=(299, 299))

new_image = picture.img_to_array(new_image)

new_image = np.expand_dims(new_image, axis=0)

new_image = new_image / 255.0 # Normalize the pixel values

# Make a prediction utilizing the customized InceptionV3 mannequin

prediction = custom_inception.predict(new_image)

# Get the anticipated class label

predicted_class_index = np.argmax(prediction)

# Print the anticipated class label

print("Predicted Class:", class_names[predicted_class_index])

youtube video:- https://youtu.be/0i5pyRdgh1A