A handful of school college students who had been a part of OpenAI’s testing cohort—hailing from Princeton, Wharton, and the College of Minnesota—shared constructive critiques of Examine Mode, saying it did an excellent job of checking their understanding and adapting to their tempo.

The training approaches that OpenAI has programmed into Examine Mode, that are based mostly partially on Socratic strategies, seem sound, says Christopher Harris, an educator in New York who has created a curriculum aimed toward AI literacy. They may grant educators extra confidence about permitting, and even encouraging, their college students to make use of AI. “Professors will see this as working with them in assist of studying versus simply being a means for college students to cheat on assignments,” he says.

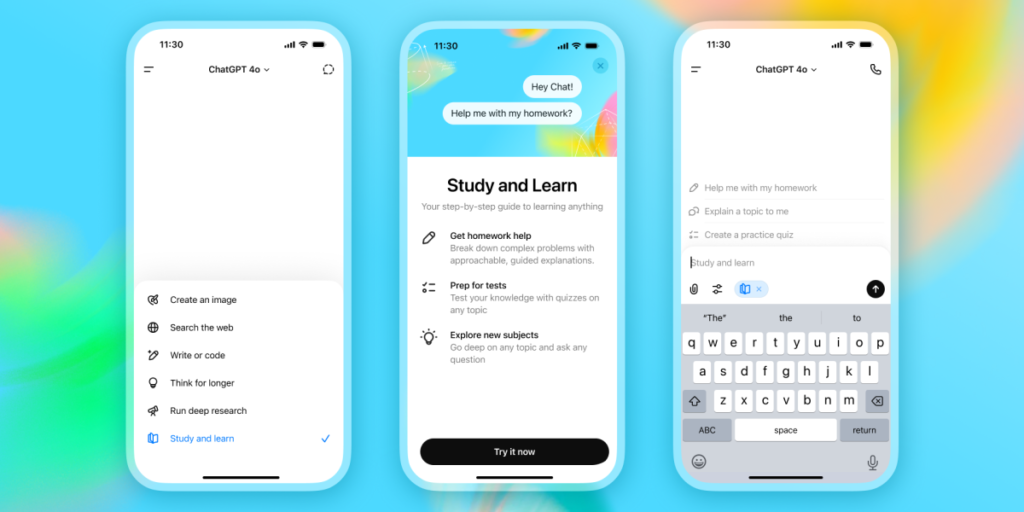

However there’s a extra formidable imaginative and prescient behind Examine Mode. As demonstrated in OpenAI’s latest partnership with main academics’ unions, the corporate is presently making an attempt to rebrand chatbots as instruments for personalised studying reasonably than dishonest. A part of this promise is that AI will act just like the costly human tutors that presently solely probably the most well-off college students’ households can usually afford.

“We are able to start to shut the hole between these with entry to studying assets and high-quality training and people who have been traditionally left behind,” says OpenAI’s head of training. Leah Belsky.

However portray Examine Mode as an training equalizer obfuscates one obvious downside. Beneath the hood, it’s not a instrument educated completely on tutorial textbooks and different permitted supplies—it’s extra like the identical previous ChatGPT, tuned with a brand new dialog filter that merely governs the way it responds to college students, encouraging fewer solutions and extra explanations.

This AI tutor, subsequently, extra resembles what you’d get in the event you employed a human tutor who has learn each required textbook, but additionally each flawed clarification of the topic ever posted to Reddit, Tumblr, and the farthest reaches of the net. And due to the way in which AI works, you possibly can’t count on it to tell apart proper data from mistaken.

Professors encouraging their college students to make use of it run the danger of it educating them to method issues within the mistaken means—or worse, being taught materials that’s fabricated or fully false.