I’m presently working as a Machine Studying researcher on the College of Iowa, and the precise modality I’m working with is the audio modality. Since I’m simply beginning the undertaking, I’ve been studying present state-of-the-art papers and different related papers to grasp the panorama. Though this paper isn’t about audio, it has the potential to be utilized to the audio modality. This paper was launched by Google Analysis and has develop into an influential paper in Supervised Contrastive Studying(because the title states…)

Initially, Contrastive Studying was profitable in self-supervised studying. Lately, batch contrastive studying is outperforming unique contrastive studying strategies resembling triplet, max-margin, and N-pairs loss. Now, this batch contrastive studying is being utilized to the supervised setting.

Cross-entropy is probably the most broadly used loss for supervised classification and remains to be utilized in many state-of-the-art fashions for ImageNet. It does have some points, although:

- Lack of robustness to noisy labels.

- Poor margins

- Lowered generalization efficiency.

Widespread Concept of Contrastive Studying:

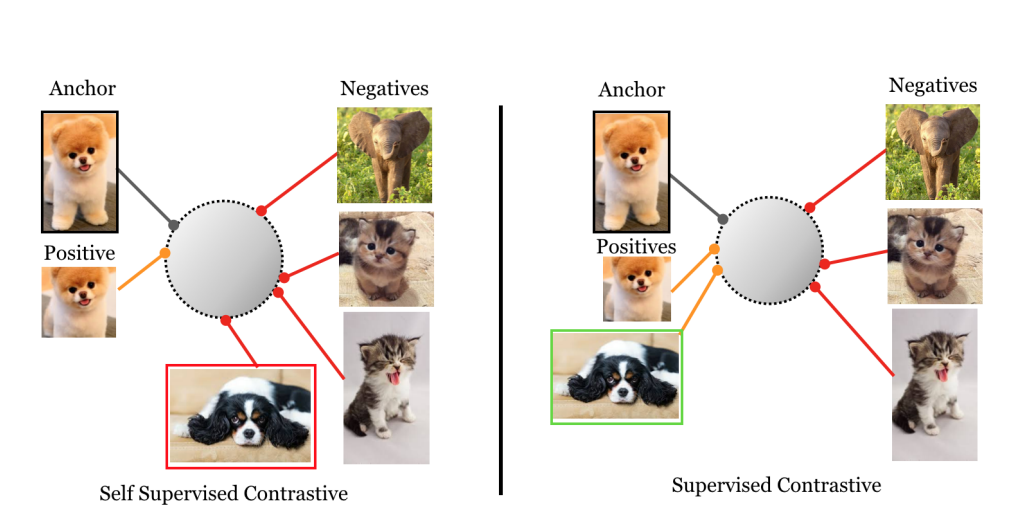

Pull collectively an anchor and a optimistic pattern within the embedding house, whereas pushing away destructive pairs.

- In self-supervised studying, knowledge augmentations are used because the optimistic pairs whereas random samples from the minibatch are used as destructive pairs.

The novel technique proposed on this paper is Supervised Contrastive Loss(SupConLoss). SupConLoss is a generalization of the triplet and N-pair loss:

- Triplet makes use of one optimistic and one destructive per anchor(pattern).

- N-pair makes use of one optimistic and lots of destructive pairs.

Let i be the index of an arbitrary augmented pattern, and j(i) be the index of the opposite augmented pattern from the identical supply pattern. z is the encoder. j(i) is the optimistic pair. Let there be 2N complete gadgets within the batch; then there’s 1 optimistic pair and 2N-2 destructive pairs. Then, the denominator has 2N-1 phrases, which is the sum of the optimistic pairs and the destructive pairs.

Difficulty: Self-Supervised Contrastive Loss doesn’t deal with the situation when a number of samples can belong to the identical class, because it solely offers with one optimistic pair and no labels.

The authors proposed 2 types of supervised contrastive loss once they carry out normalization at totally different phases of the formulation.

P(i) is the set of all positives within the batch distinct from i. Equation 2 is the place the summation over positives is situated exterior of the log(L_out) and equation 3 is the place the summation is situated contained in the log(L_in). These 2 losses nonetheless comply with these identical properties:

- Generalization to an arbitrary variety of positives: The loss considers a number of optimistic pairs slightly than only one within the numerator. All entries in the identical class might be extra prone to cluster collectively within the illustration house.

- Contrastive energy will increase with extra negatives. With an elevated variety of negatives within the denominator, the loss is healthier in a position to discriminate between destructive and optimistic pairs.

- Exhausting positives and exhausting negatives produce a bigger gradient; thus the mannequin will carry out higher in harder conditions.

Each formulation usually are not the identical since log is a concave perform. By Jensen Inequality:

- In the event you common first, then take log, you get the next worth.

- In the event you take log first, then common, you get a decrease worth.

Thus L_out ≥ L_in. L_out is the superior loss perform and even carried out higher than in ImageNet Top1 classification in comparison with L_in.

L_in has a construction that’s much less optimum for coaching. Since L_in has normalization contained in the log, it doesn’t have an effect on the gradient, thus the gradient is extra prone to bias in positives. For the remainder of the paper, the authors confer with L_out loss.