By Erica Duh, Henry Keeler, Zeke Buckholz

Function

This course has launched us to a variety of functions for synthetic neural networks. After reviewing the tasks from earlier semesters, our group grew to become taken with exploring how effectively neural networks can draw summary conclusions from visible information. One weblog put up, which targeted on classifying film genres based mostly on poster pictures, led us to marvel: would neural networks carry out higher or worse if the medium have been switched from films to video video games? This weblog put up research online game style classification via pictures of online game posters, using 4 convolutional neural community architectures throughout 1000 video video games and 10 style lessons.

Information

We used a Video Games dataset from kaggle with over 64,000 information entries on video video games. From this information set we have been in a position to extract the field cowl picture of the online game and the style of the online game. As a result of the dataset had 20 completely different genres with the majority of them being “misc”, we determined to take the highest 10 genres (Motion, Journey, Position-Taking part in, Sports activities, Shooter, Platform, Technique, Puzzle, Racing, Simulation) and prepare our mannequin(s) on that. We used the primary 1,000 information factors.

For our X values, we wished to make use of the photographs of the online game field artwork. As our pictures have been listed as urls within the information set, we needed to obtain and course of every of the photographs by grabbing it from the web site, resizing it to a typical dimension (200, 200, 3), after which changing it to a 3D numpy array with (200, 200, 3) in order that its parts are preserved. We then added every of those arrays to a single numpy array X for use as our X enter values. For our Y values, we wished to make use of the style labels. So we encoded these string labels (Motion, Journey, Position-Taking part in, Sports activities, Shooter, Platform, Technique, Puzzle, Racing, Simulation) into numbers which may very well be used as enter for our fashions. We constructed a mapping system to map the style to its encoded quantity to the decoding clearer later within the course of.

Logistic Regression Classifier

We first used a logistic regression classifier mannequin to categorize our pictures. We wished to make use of this mannequin as a comparability to our extra complete fashions. First we needed to flatten the photographs and normalize its pixel values so as to have the ability to use it for enter into the mannequin. In operating our mannequin, we acquired a take a look at accuracy of 34.40%. We additionally ran a confusion matrix for this information to interrupt down which of them the mannequin was getting appropriate. We acknowledge that the efficiency of this mannequin shouldn’t be as excessive since this mannequin is a linear classifier, so it isn’t intaking spatial info (we have to flatten the photographs so as to have the ability to use them for enter). After this mannequin, we transfer onto utilizing CNNs and Switch Studying with a purpose to benefit from the spatial info of our pictures.

Convolutional Neural Community Mannequin

We additionally made a convolutional neural community to sort out this activity. Our first inside layer makes use of 32 filters of dimension 5×5 and is adopted by a ReLU activation perform to detect fundamental options of the posters akin to edges. Subsequent, our CNN makes use of a 2×2 max pooling layer to make the function extraction extra environment friendly going into the second convolutional layer which has 64 filters (additionally of dimension 5×5). We then included a dropout layer to forestall overfitting earlier than flattening the output right into a vector for the totally linked layer. This dense layer has 256 totally linked nodes and makes use of ReLU activation to mix the extracted options for classification. A second dropout layer was added after this step for regularization, and our remaining layer features a softmax output layer to provide the likelihood distribution of the sport poster throughout every style.

This CNN was educated over 10 epochs on half of our information set (500 posters). The mannequin improved its coaching accuracy from 14.7% to 96% by the ultimate epoch, which signifies that it efficiently discovered to categorise the coaching information. Nonetheless, the validation accuracy peaked at 39.8% and even declined in later epochs. This disparity between coaching efficiency and validation efficiency means that the mannequin started to overt to the coaching information after a number of epochs. To enhance the efficiency of this mannequin, we might attempt increasing the info set, utilizing extra regularization strategies, or making use of information augmentation.

Switch Studying Technique 1: Coaching Output Layer Solely

For our subsequent mannequin, we used Switch Studying. Our base mannequin is DenseNet, which incorporates the entire function extraction discovered from coaching on ImageNet, the huge information set of pictures. We used this base mannequin since we would like these function extraction capabilities as we’re coaching on pictures as effectively, however we now have a smaller information set. So we used your entire DenseNet mannequin, freezing all of those layers and their weights, simply with out the ultimate output classification layer. We then added our personal classification layer: classifying the photographs into 1 of 10 online game classes. Solely this layer is educated on the online game pictures. This final layer is a Dense (totally linked) layer with 10 models so there’s 1 for every class. It’s a softmax layer that decides how doubtless every picture is to belong in a sure style.

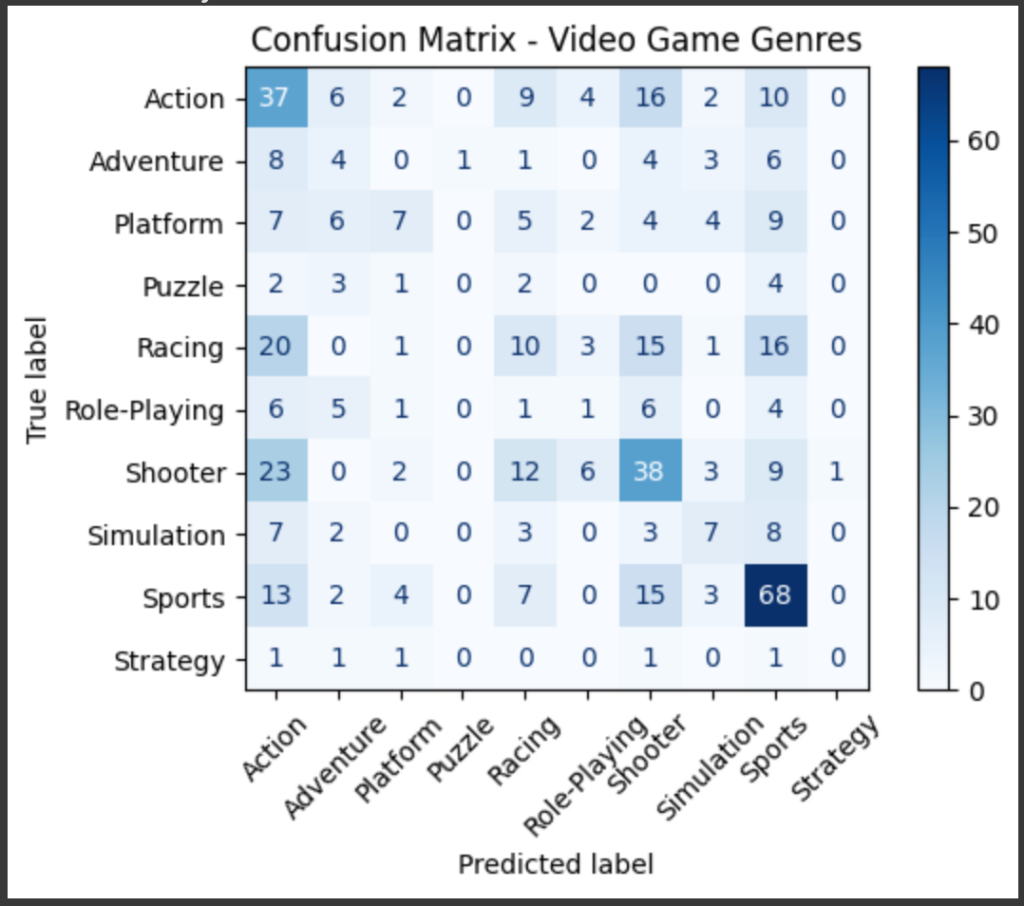

With this mannequin, our top1 accuracy is 50.80% and our top3 accuracy is 79.60%. In analyzing the confusion matrix, we observed that the mannequin carried out considerably higher on sure genres akin to Sports activities, Racing, and Shooter video games. This implies that DenseNet’s pretrained filters — developed on ImageNet, which incorporates many real-world objects — are notably efficient at recognizing distinct visible parts like automobiles, weapons, and sports activities tools which might be generally featured within the cowl artwork of those genres. The robust efficiency on these classes demonstrates how switch studying leverages prior function extraction information, particularly when the goal dataset shares visible similarities with the unique ImageNet information. Conversely, genres that lack such clear, object-level visible cues (e.g., Puzzle or Technique video games) might profit much less from these pretrained options, leading to increased misclassification charges.

Switch Studying Technique 2: Coaching A number of Layers with Diminished Studying Price

To construct upon the preliminary switch studying method, we additional enhanced our community by experimenting with fine-tuning. After coaching the brand new classification layer on prime of the frozen DenseNet base, we selectively unfroze the previous few convolutional blocks of the DenseNet mannequin to permit these higher-level function representations to adapt extra intently to the particular traits of online game pictures. This fine-tuning step was performed with a considerably decreased studying charge to rigorously replace the pretrained weights with out overwriting the dear generic picture options discovered from ImageNet. Whereas this method led to near-perfect coaching accuracy, the validation and take a look at accuracy plateaued round 40–50%, indicating clear overfitting. This efficiency hole highlights the restrictions of fine-tuning with a comparatively small and probably imbalanced dataset, and suggests the necessity for regularization methods akin to information augmentation, early stopping, or utilizing smaller base fashions to enhance generalization.

Switch Studying Technique 3: Incorporating L2 Regularization to Forestall Overfitting

Constructing on the earlier method, we launched L2 regularization into our DenseNet121 mannequin to fight the overfitting noticed throughout fine-tuning. As an alternative of unfreezing the final layers like earlier than, this time we stored all layers of the DenseNet base frozen, focusing solely on coaching the customized classification head. L2 regularization was utilized to assist forestall overfitting by penalizing giant weight values, encouraging the mannequin to be taught less complicated, extra generalizable patterns. The mannequin was educated for 15 epochs, and whereas the coaching accuracy elevated to almost 70%, the validation accuracy hovered round 50%, with little change in validation loss. This pattern continued into testing, the place we noticed a remaining take a look at accuracy of fifty.60%. Though freezing the bottom and utilizing L2 regularization helped barely cut back overfitting in comparison with Technique 2, the general efficiency was nonetheless restricted. This was most likely as a result of dataset’s small dimension and sophistication imbalance. Incorporating extra regularization methods like information augmentation or attempting smaller, extra environment friendly architectures might assist enhance generalization additional.

Switch Studying Technique 4: New Structure

After not getting nice outcomes when coaching and testing our different fashions, we wished to match them to one thing completely different. To do that, we requested ChatGPT to assist us construct an optimized mannequin utilizing a special structure than DenseNet121. It advised utilizing EfficientNetB0 together with strategies like early stopping, dropout, information augmentation, and L2 regularization. We educated the mannequin on 5,000 complete pictures — half for coaching and half for testing. Though we set it to run for 30 epochs, early stopping kicked in at 25. The ultimate testing accuracy was 51.52%. Nonetheless, throughout coaching, each the coaching and validation accuracy have been round 75–80% towards the tip, which was encouraging. The confusion matrix additionally confirmed stable outcomes. Just like what we noticed with our different fashions, racing and sports activities video games have been categorised essentially the most precisely, which is smart since switch studying (like DenseNet121 and EfficientNetB0) helps the mannequin acknowledge extra basic visible options.

Difficulties

Our major issue was the time constraints. We ideally would’ve appreciated to coach and take a look at on a a lot bigger information set. Downloading the photographs to be used took a very long time in itself. We then additionally had very future instances for coaching our fashions, even with solely utilizing 1000 information factors. With eager to check out a number of completely different fashions to match their efficiency in addition to adjusting every of the fashions and retraining them once more, we caught with 1000 information factors.

We additionally had difficulties with processing our information. We had bother even determining obtain the photographs to be used. Our information set included the photographs as urls, and we found out entry these. For utilizing the photographs, we first tried downloading them regionally, then onto the disk in Google Colab. We finally discovered a workaround by downloading them to Google Colab’s reminiscence. Doing this, nevertheless, took numerous time and was considered one of our most proscribing difficulties. We even ended up reaching colab’s processing restrict for the free plan so we weren’t in a position to prepare all of the fashions we wished to.

One other issue we encountered was overfitting. We achieved very excessive coaching accuracy for our first switch studying mannequin and ideal coaching accuracy for our second switch studying mannequin, however a a lot decrease precise testing accuracy, indicating overfitting. We then tried out potential options for this overfitting in our third switch studying mannequin.

Reflection

It took a really very long time to obtain the photographs after which prepare the fashions with every of our small changes, which held us again fairly a bit. This meant we didn’t use as massive of a knowledge set as we might’ve appreciated and we didn’t get to check out as many mannequin changes as we might’ve appreciated. From the outcomes that we did get with our restricted information set, we expect our fashions are promising, and with much more information and much more time, we expect our fashions might do higher. Our prime 1 accuracy remained round 50% for every of our fashions, however we do assume that every of our changes addressed the problems that we wished, and if given extra time we might proceed bettering factoring in these changes. Our prime 3 accuracy for our first switch studying mannequin, which we selected to analyze as an alternative of prime 5 accuracy for a extra significant metric since we solely had 10 classes, was very thrilling at about 80% testing accuracy. Our confusion matrices have been particularly fascinating since they confirmed that our fashions have been good at figuring out sure genres, so it was cool to see the capabilities and specializations of those mannequin varieties.

GitHub Repository