Medical Picture Processing

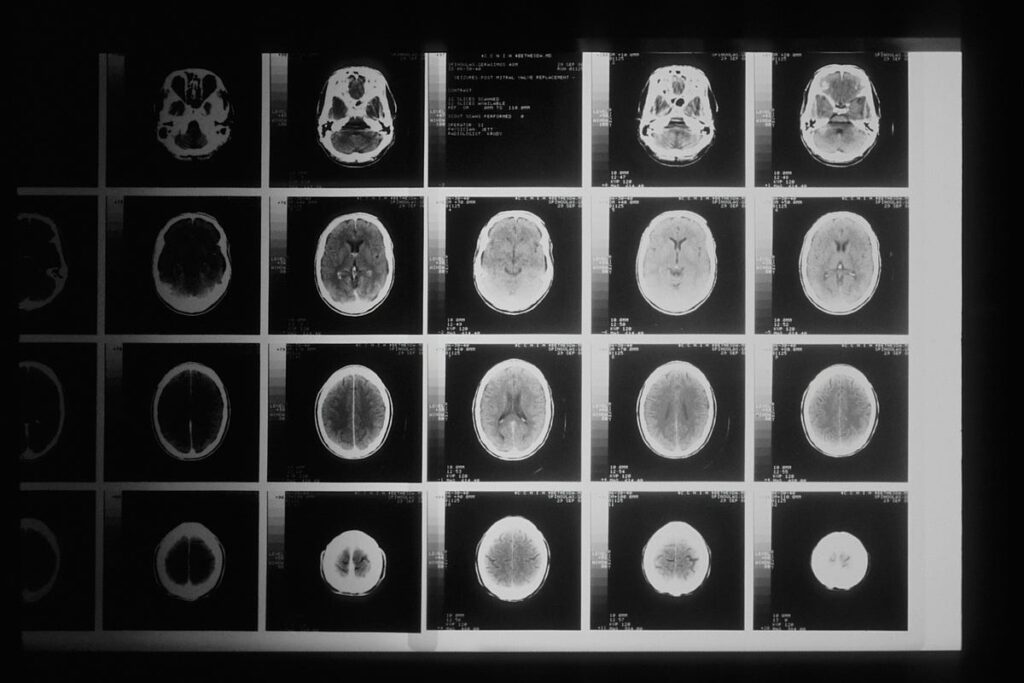

Within the period of synthetic intelligence-driven healthcare, the correct and environment friendly evaluation of medical pictures has change into pivotal in diagnostics, therapy planning, and analysis. Nevertheless, the uncooked information from imaging modalities similar to MRI, CT, and ultrasound typically include noise, artefacts, or inconsistencies that may hinder the efficiency of AI algorithms. Preprocessing, the essential first step within the medical imaging pipeline, transforms this uncooked information right into a standardised and high-quality format appropriate for machine studying fashions. This stage includes a spread of strategies, from picture normalisation and denoising to geometric corrections and segmentation, every tailor-made to boost the constancy and value of the information. The alternatives made throughout preprocessing straight affect the accuracy, generalisability, and reliability of AI fashions. As the sector of medical imaging continues to evolve, understanding the ideas and greatest practices of preprocessing is significant for researchers and practitioners aiming to unlock the complete potential of AI in medical functions. On this article, we delve into the important steps and methodologies for getting ready medical pictures. There are a number of preprocessing steps in relation to dealing with medical pictures however we received’t cowl all of them. We are going to primarily discuss

- Modality Particular Pre processing

- Orientation

- Spatial resampling/resizing

- Depth normalisation and standardisation

It is a very primary article on pre-processing for absolute starters. We are going to discuss some superior matters in future articles

Modality Particular Preprocessing

Totally different modalities seize the photographs by way of totally different working ideas. MRI makes use of the magnetic properties of the water current in our tissues. USG makes use of sound waves to generate pictures. CT makes use of X-Ray pictures to compute a 3D illustration of the anatomy. I’ve talked about how CT scanners work in a easy method in this article.

Every modality after capturing the picture reconstructs the picture right into a human readable format these reconstruction algorithms are particular to modality. One of many reconstruction strategies is described briefly within the CT article linked above.

One other step which may be particular to the modality is denoising each modality introduces some noise. Whereas the ultimate output from the modality may be additional denoised by sure strategies like utilizing gaussian or median filters, non native means denoising (utilized in MRI) or wavelet based mostly denoising in quite a lot of instances some stage of denoising is completed by the modality itself.

One other vital step in preprocessing is the advanced artefact correction. This refers to varied distortions or errors which happen within the closing output from the modality.

Correcting a few of these artefacts will not be a trivial activity. Correcting totally different artefacts make use of totally different strategies and since we intend to speak about solely the essential introduction of pre-processing, we received’t get into the main points of these strategies.

Orientation

Correcting orientation in medical pictures is a crucial pre-processing step. The purpose of orientation is to get a standardised orientation for the entire dataset i.e. the information axes ought to all the time correspond to the identical bodily dimensions. For example you may get a dataset the place the primary instance may very well be a chest X-Ray the place the primary axis strikes from prime to backside and the subsequent instance of the chest X-Ray in your dataset might need that axis oriented from backside to prime. This would scale back the coaching efficiency of your algorithm. It could study inconsistent illustration of the anatomical constructions, the mannequin may fail to generalise properly and so on.

Resampling

The purpose of resampling, like orientation correction, is to get a standardised bodily decision and dimension. i.e. every pixel of the information ought to characterize the identical bodily dimension. Totally different scanners have totally different pixel spacing, decision, and slice thicknesses. Resampling ensures that this variability is lowered for our fashions.

Normalisation and Standardisation

Normalisation refers to rescaling of the depth values of a picture to a particular vary, normally [0,1] or [-1,1]. This ensures that every one pictures have comparable depth distributions no matter their authentic scale. Totally different modalities have totally different depth ranges therefore you will need to normalise these depth values in order that the mannequin will get constant inputs. For example min-max normalisation makes use of the next technique.

In CT pictures as an illustration windowing is completed to obviously present totally different anatomical constructions. CT pictures retailer Hounsfield Unit (HU) values, however displaying the complete HU vary without delay reduces distinction. Windowing adjusts the brightness and distinction to spotlight particular tissues. Windowing may be utilized to boost sure anatomies like comfortable tissues or lung.

Now earlier than entering into some examples of those preprocessing strategies lets discuss an vital idea which is the relation between the voxels within the information array and the bodily coordinates.

Affine Matrix

Let’s imagine your picture is outlined by a matrix I. On this matrix a voxel is outlined by I[i,j,k] that is the place of a voxel within the information array. How can we map this voxel place within the information array to an precise bodily place within the coordinate system? Every voxel has a one to 1 mapping to a degree within the bodily coordinate system. Bodily coordinates have SI models like mm and a standardised reference with respect to the scanner (origin and axis orientation).

To attain the mapping from voxels to bodily coordinate system (and vice versa) we use affine mapping. Affine mapping does a linear transformation of the voxels. This includes scaling, rotation and shearing. These 3 operations are outlined by way of a 3×3 matrix referred to as the affine matrix. Along with this we additionally add an offset. Mixed with an offset the affine matrix turns into 4×4.

Preprocessing medical pictures utilizing Python

Lets see just a few examples of preprocessing utilizing python. We are going to use the nifti file format of captured pictures for example among the strategies. We are going to import the required libraries.

%matplotlib inline

import nibabel as nib

import matplotlib.pyplot as plt

import numpy as np

To load the dataset utilizing the nifti library we use. You’ll be able to obtain many freely out there datasets for experimentation. Some hyperlinks are

mr = nib.load(".nii.gz")

mr_data = mr.get_fdata()

To see the affine matrix for the dataset you possibly can run

print(mr.affine)

[[ 5.07338792e-02 9.42116231e-02 1.19216144e+00 -1.00070503e+02]

[-9.36126232e-01 5.32572996e-03 6.45813793e-02 1.35501907e+02]

[ 2.20677117e-04 9.32739019e-01 -1.20783418e-01 -9.81251144e+01]

[ 0.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00]]

You’ll be able to see the affine matrix is a 4×4 matrix which converts the voxel positions to bodily coordinates and vice versa. We are going to see how shortly.

To see the voxel dimensions nibabel offers the next technique

print(mr.header.get_zooms())

(0.9375, 0.9375, 1.2000035)

The output implies that the voxel dimension is 0.9375 mm x 0.9375 mm x 1.2 mm. To see how the dataset is oriented we are able to run the next

nib.aff2axcodes(mr.affine)

('P', 'S', 'R')

The output implies that the information array has the orientation as follows. The primary axis goes from Anterior to Posterior the second axis strikes from Inferior to Superior and the third axis is from Left to Proper. Which means that the primary axis of the array would current us with the coronal view, the second axis of the array exhibits the axial view and the third axis exhibits the saggital view. Lets take a look at all three views, for reference the information which we’re working with has array dimension of 256 x 256 x 150

fig, axis = plt.subplots(1, 2)

axis[0].imshow(mr_data[20, :, :], cmap="grey")

axis[1].imshow(mr_data[140, :, :], cmap="grey")

We’re going to view the coronal view first so the primary few slices will correspond to the photographs of the face and the later slices would present the photographs in the direction of the again of our cranium.

As you possibly can see the slice on the left exhibits the coronal view in the direction of the entrance of the face (you possibly can see the eyes) and the slice on the precise is a scan of the rear a part of our mind. One peculiar factor about these slices is that they’re the other way up as a result of as we noticed that the second axis strikes from Inferior to Superior which implies that the primary few slices on the second axis could be across the neck area and the later slices would cowl the crown of the top.

The views alongside the second axis (axial view)

fig, axis = plt.subplots(1, 2)

axis[0].imshow(mr_data[:, 40, :], cmap="grey")

axis[1].imshow(mr_data[:, 180, :], cmap="grey")

And eventually the saggital view seems like

fig, axis = plt.subplots(1, 2)

axis[0].imshow(mr_data[:, :, 50], cmap="grey")

axis[1].imshow(mr_data[:, :, 100], cmap="grey")

Now lets convert the voxel indexes to the bodily coordinates and again utilizing affine matrix

vcoords = np.array((0, 0, 0, 1))

pcoords = affine @ vcoords

print(pcoords)

[-100.07050323 135.50190735 -98.12511444 1. ]

The output implies that the voxel (0,0,0) corresponds to (-100,135,98) level in our bodily coordinate system. Now lets convert the bodily coordinates again to voxel house.

vcoords = (np.linalg.inv(mr.affine) @ pcoords).spherical()

print(vcoords)

[0. 0. 0. 1.]

As you possibly can see that the bodily coordinates we computed are mapped again to the voxel indices.

Now lets take a look at one other vital preprocessing step.

Resampling

Resampling operation merely refers to alter the size of our picture as an illustration our authentic dataset had the next dimensions 256 x 256 x 150. Now we’ll resize this to 128 x 128 x 75. nibabel library offers a easy technique to resize the information. To attain this we additionally have to outline a goal voxel dimension and a goal orientation. Right here we outline a goal voxel dimension of two x 2 x 2 and a goal orientation as the unique orientation of PSR.

target_voxel_size = (2, 2, 2)

mr_resized = nibabel.processing.conform(mr, (128, 128, 75), target_voxel_size, orientation="PSR")

brain_mri_resized_data = brain_mri_resized.get_fdata()

On the left is the unique picture and proper is the resized picture. You’ll be able to discover the change in decision. The slices are for barely totally different areas of the cranium though the slice numbers are the identical. Lets take a look at some examples of windowing in CT information.

Windowing

Windowing, often known as grey-level mapping, distinction stretching, histogram modification or distinction enhancement is the method during which the CT picture greyscale element of a picture is manipulated; doing it will change the looks of the image to spotlight explicit constructions. The brightness of the picture is adjusted by way of the window stage. The distinction is adjusted by way of the window width. Let see how windowing might help us visualise totally different constructions in the identical scan. As regular we load the scan utilizing nibabel and apply a clip perform to clip values past -900 and -200 HU. This helps to spotlight the constructions in lung properly however compromises different constructions.

lung_ct_window = np.clip(lung_ct_data, -900, -200)fig, axis = plt.subplots(1, 2)

axis[0].imshow(np.rot90(lung_ct_lung_window[:,:,50]), cmap="grey")

axis[1].imshow(np.rot90(lung_ct_data[:,:,50]), cmap="grey")

Equally we are able to do a comfortable tissue windowing to spotlight the opposite constructions however compromise the lungs

lung_ct_soft_tissue_window = np.clip(lung_ct_data, -250, 250)fig, axis = plt.subplots(1, 2)

axis[0].imshow(np.rot90(lung_ct_soft_tissue_window[:,:,50]), cmap="grey")

axis[1].imshow(np.rot90(lung_ct_soft_tissue_window[:,:,5]), cmap="grey")

Right here we are able to see that the lungs are nearly black however the different tissues are visualised properly.