Usually, an object detection mannequin is skilled with a hard and fast vocabulary, which means it may well solely acknowledge a predefined set of object classes. Nevertheless, in our pipeline, since we will’t predict prematurely which objects will seem within the picture, we want an object detection mannequin that’s versatile and able to recognizing a variety of object lessons. To realize this, I take advantage of the OWL-ViT mannequin [11], an open-vocabulary object detection mannequin. This mannequin requires textual content prompts that specifies the objects to be detected.

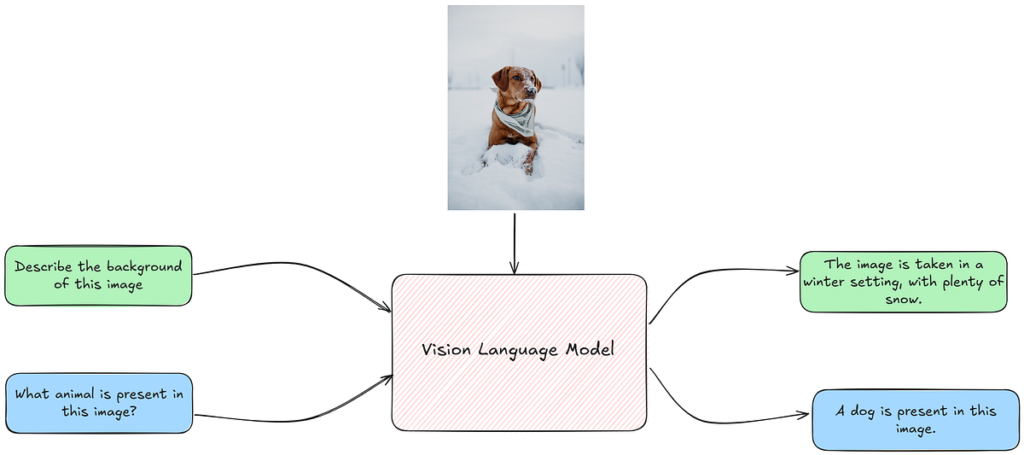

One other problem that must be addressed is acquiring a high-level concept of the objects current within the picture earlier than using the OWL-ViT mannequin, because it requires a textual content immediate describing the objects. That is the place VLMs come to the rescue! First, we cross the picture to the VLM with a immediate to determine the high-level objects within the picture. These detected objects are then used as textual content prompts, together with the picture, for the OWL-ViT mannequin to generate detections. Subsequent, we plot the detections as bounding bins on the identical picture and cross this up to date picture to the VLM, prompting it to generate a caption. The code for inference is partially tailored from [12].

# Load mannequin straight

from transformers import AutoProcessor, AutoModelForZeroShotObjectDetectionprocessor = AutoProcessor.from_pretrained("google/owlvit-base-patch32")

mannequin = AutoModelForZeroShotObjectDetection.from_pretrained("google/owlvit-base-patch32")

I detect the objects current in every picture utilizing the VLM:

IMAGE_QUALITY = "excessive"

system_prompt_object_detection = """You're supplied with a picture. You could determine all essential objects within the picture, and supply a standardized listing of objects within the picture.

Return your output as follows:

Output: object_1, object_2"""user_prompt = "Extract the objects from the supplied picture:"

detected_objects = process_images_in_parallel(image_paths, system_prompt=system_prompt_object_detection, user_prompt=user_prompt, mannequin = "gpt-4o-mini", few_shot_prompt= None, element=IMAGE_QUALITY, max_workers=5)

detected_objects_cleaned = {}for key, worth in detected_objects.gadgets():

detected_objects_cleaned[key] = listing(set([x.strip() for x in value.replace("Output: ", "").split(",")]))

The detected objects at the moment are handed as textual content prompts to the OWL-ViT mannequin to acquire the predictions for the photographs. I implement a helper perform that predicts the bounding bins for the photographs, after which plots the bounding field on the unique picture.

from PIL import Picture, ImageDraw, ImageFont

import numpy as np

import torchdef detect_and_draw_bounding_boxes(

image_path,

text_queries,

mannequin,

processor,

output_path,

score_threshold=0.2

):

"""

Detect objects in a picture and draw bounding bins over the unique picture utilizing PIL.

Parameters:

- image_path (str): Path to the picture file.

- text_queries (listing of str): Record of textual content queries to course of.

- mannequin: Pretrained mannequin to make use of for detection.

- processor: Processor to preprocess picture and textual content queries.

- output_path (str): Path to avoid wasting the output picture with bounding bins.

- score_threshold (float): Threshold to filter out low-confidence predictions.

Returns:

- output_image_pil: A PIL Picture object with bounding bins and labels drawn.

"""

img = Picture.open(image_path).convert("RGB")

orig_w, orig_h = img.measurement # unique width, top

inputs = processor(

textual content=text_queries,

photos=img,

return_tensors="pt",

padding=True,

truncation=True

).to("cpu")

mannequin.eval()

with torch.no_grad():

outputs = mannequin(**inputs)

logits = torch.max(outputs["logits"][0], dim=-1) # form (num_boxes,)

scores = torch.sigmoid(logits.values).cpu().numpy() # convert to chances

labels = logits.indices.cpu().numpy() # class indices

boxes_norm = outputs["pred_boxes"][0].cpu().numpy() # form (num_boxes, 4)

converted_boxes = []

for field in boxes_norm:

cx, cy, w, h = field

cx_abs = cx * orig_w

cy_abs = cy * orig_h

w_abs = w * orig_w

h_abs = h * orig_h

x1 = cx_abs - w_abs / 2.0

y1 = cy_abs - h_abs / 2.0

x2 = cx_abs + w_abs / 2.0

y2 = cy_abs + h_abs / 2.0

converted_boxes.append((x1, y1, x2, y2))

draw = ImageDraw.Draw(img)

for rating, (x1, y1, x2, y2), label_idx in zip(scores, converted_boxes, labels):

if rating < score_threshold:

proceed

draw.rectangle([x1, y1, x2, y2], define="purple", width=3)

label_text = text_queries[label_idx].change("A picture of ", "")

text_str = f"{label_text}: {rating:.2f}"

text_size = draw.textsize(text_str) # If no font used, take away "font=font"

text_x, text_y = x1, max(0, y1 - text_size[1]) # place textual content barely above field

draw.rectangle(

[text_x, text_y, text_x + text_size[0], text_y + text_size[1]],

fill="white"

)

draw.textual content((text_x, text_y), text_str, fill="purple") # , font=font)

img.save(output_path, "JPEG")

return img

for key, worth in tqdm(detected_objects_cleaned.gadgets()):

worth = ["An image of " + x for x in value]

detect_and_draw_bounding_boxes(key, worth, mannequin, processor, "images_with_bounding_boxes/" + key.break up("/")[-1], score_threshold=0.15)

The photographs with the detected objects plotted at the moment are handed to the VLM for captioning:

IMAGE_QUALITY = "excessive"

image_paths_obj_detected_guided = [x.replace("downloaded_images", "images_with_bounding_boxes") for x in image_paths] system_prompt="""You're a useful assistant that may analyze photos and supply captions. You're supplied with photos that additionally comprise bounding field annotations of the essential objects in them, together with their labels.

Analyze the general picture and the supplied bounding field info and supply an acceptable caption for the picture.""",

user_prompt="Please analyze the next picture:",

obj_det_zero_shot_high_quality_captions = process_images_in_parallel(image_paths_obj_detected_guided, mannequin = "gpt-4o-mini", few_shot_prompt= None, element=IMAGE_QUALITY, max_workers=5)

On this activity, given the straightforward nature of the photographs we use, the placement of the objects doesn’t add any important info to the VLM. Nevertheless, Object Detection Guided Prompting generally is a highly effective software for extra complicated duties, resembling Doc Understanding, the place structure info will be successfully supplied by means of object detection to the VLM for additional processing. Moreover, Semantic Segmentation will be employed as a way to information prompting by offering segmentation masks to the VLM.

VLMs are a robust software within the arsenal of AI engineers and scientists for fixing a wide range of issues that require a mix of imaginative and prescient and textual content abilities. On this article, I discover prompting methods within the context of VLMs to successfully use these fashions for duties resembling picture captioning. That is on no account an exhaustive or complete listing of prompting methods. One factor that has turn into more and more clear with the developments in GenAI is the limitless potential for artistic and modern approaches to immediate and information LLMs and VLMs in fixing duties.

[1] J. Chen, H. Guo, Ok. Yi, B. Li and M. Elhoseiny, “VisualGPT: Knowledge-efficient Adaptation of Pretrained Language Fashions for Picture Captioning,” 2022 IEEE/CVF Convention on Laptop Imaginative and prescient and Sample Recognition (CVPR), New Orleans, LA, USA, 2022, pp. 18009–18019, doi: 10.1109/CVPR52688.2022.01750.

[2] Luo, Z., Xi, Y., Zhang, R., & Ma, J. (2022). A Frustratingly Easy Method for Finish-to-Finish Picture Captioning.

[3] Jean-Baptiste Alayrac, Jeff Donahue, Pauline Luc, Antoine Miech, Iain Barr, Yana Hasson, Karel Lenc, Arthur Mensch, Katie Millicah, Malcolm Reynolds, Roman Ring, Eliza Rutherford, Serkan Cabi, Tengda Han, Zhitao Gong, Sina Samangooei, Marianne Monteiro, Jacob Menick, Sebastian Borgeaud, Andrew Brock, Aida Nematzadeh, Sahand Sharifzadeh, Mikolaj Binkowski, Ricardo Barreira, Oriol Vinyals, Andrew Zisserman, and Karen Simonyan. 2022. Flamingo: a visible language mannequin for few-shot studying. In Proceedings of the thirty sixth Worldwide Convention on Neural Info Processing Methods (NIPS ‘22). Curran Associates Inc., Crimson Hook, NY, USA, Article 1723, 23716–23736.

[4] https://huggingface.co/blog/vision_language_pretraining

[5] Piyush Sharma, Nan Ding, Sebastian Goodman, and Radu Soricut. 2018. Conceptual Captions: A Cleaned, Hypernymed, Image Alt-text Dataset For Automatic Image Captioning. In Proceedings of the 56th Annual Assembly of the Affiliation for Computational Linguistics (Quantity 1: Lengthy Papers), pages 2556–2565, Melbourne, Australia. Affiliation for Computational Linguistics.

[6] https://platform.openai.com/docs/guides/vision

[7] Chin-Yew Lin. 2004. ROUGE: A Package for Automatic Evaluation of Summaries. In Textual content Summarization Branches Out, pages 74–81, Barcelona, Spain. Affiliation for Computational Linguistics.

[8]https://docs.ragas.io/en/stable/concepts/metrics/available_metrics/traditional/#rouge-score

[9] Wei, J., Wang, X., Schuurmans, D., Bosma, M., Xia, F., Chi, E., … & Zhou, D. (2022). Chain-of-thought prompting elicits reasoning in giant language fashions. Advances in Neural Info Processing Methods, 35, 24824–24837.

[11] Matthias Minderer, Alexey Gritsenko, Austin Stone, Maxim Neumann, Dirk Weissenborn, Alexey Dosovitskiy, Aravindh Mahendran, Anurag Arnab, Mostafa Dehghani, Zhuoran Shen, Xiao Wang, Xiaohua Zhai, Thomas Kipf, and Neil Houlsby. 2022. Easy Open-Vocabulary Object Detection. In Laptop Imaginative and prescient — ECCV 2022: seventeenth European Convention, Tel Aviv, Israel, October 23–27, 2022, Proceedings, Half X. Springer-Verlag, Berlin, Heidelberg, 728–755. https://doi.org/10.1007/978-3-031-20080-9_42

[13] https://learn.microsoft.com/en-us/azure/ai-services/openai/concepts/gpt-4-v-prompt-engineering