Within the age of data-driven hiring, recruiters are overwhelmed — not by an absence of expertise, however by an abundance of it. What if we may mechanically establish the highest candidates for a job utilizing pure language understanding and machine studying? That’s precisely what this challenge got down to discover.

This text outlines how I constructed an clever rating system that scores candidates primarily based on how nicely they match a job title — and even dynamically re-ranks them when preferences change.

The shopper for this challenge is requesting an automatic system to foretell how match a candidate is for a particular position primarily based on their availabe data.

- Routinely rank candidates by job match.

- Dynamically re-rank candidates when one is “starred” by a consumer

The challenge was organized into a number of Jupyter notebooks, every specializing in totally different strategies:

├── EDA_Embeddings.ipynb # Knowledge cleansing, EDA, and vectorization

├── Learn_to_rank.ipynb # PyTorch RankNet mannequin for candidate rating

├── starred_candidates.ipynb # Reranking primarily based on starred candidates

├── HF_qwen/Llama/Gemma.ipynb # LLM-based rating with totally different Hugging Face fashions

├── HF_gemma_finetuning.ipynb # Enhancing LLMs with instruction tuning and fine-tuning

├── API_groq.ipynb # Quick candidate rating utilizing Groq-hosted LLMs

- Editor Used: Google Colab

- Python Model: 3.11.12

- Python Packages: pandas, numpy, matplotlib, seaborn, warnings, loggin, requests, sys, regex, plotly, fasttext, sklearn, wordcloud, sentence_transformers, gensim, tensorflow, torch, torchview, transformers, huggingface_hub, random, peft, trl, groq

The dataset was anonymized to guard candidate privateness and included:

id: Candidate IDtitle: Job title (textual content)location: Nationscreening_score: A numeric rating from 0–100

Whereas some information had been eliminated as a result of lacking or invalid job titles, the remaining information provided wealthy floor for modeling and exploration.

We began with some visible insights:

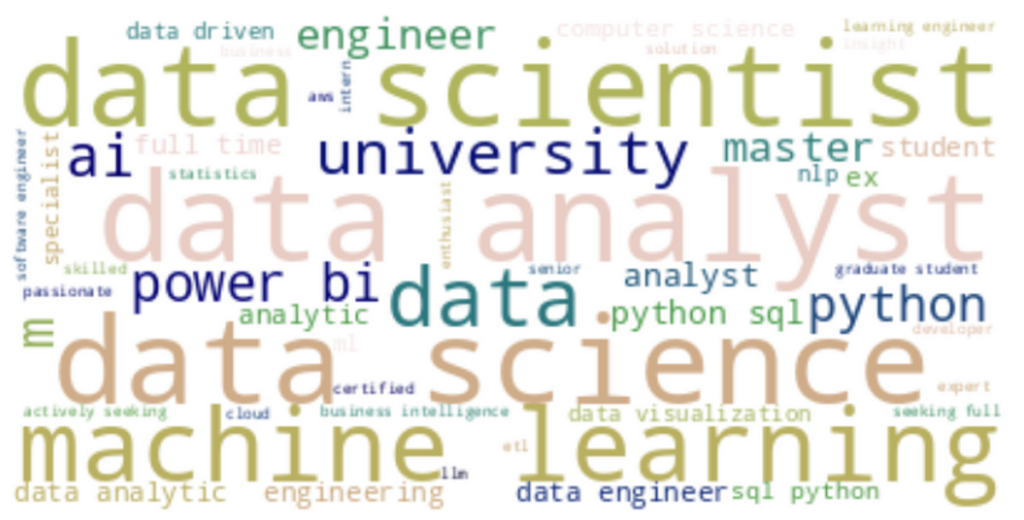

- Phrase clouds revealed frequent roles like information scientist and machine studying engineer.

- Boxplots and choropleth maps confirmed how screening scores assorted by location, serving to establish expertise hotspots.

Embedding Fashions: Evaluating Candidates to Job Titles

To match job titles semantically, we examined each phrase embeddings (TF-IDF, Word2Vec, GloVe, FastText) and sentence embeddings (Sentence-BERT). The duty: compute cosine similarity between a candidate’s job title and a goal title (or one other candidate).

Takeaway:

Whereas phrase embeddings work, they deal with phrases in isolation. When evaluating the common cosine similarity of the phrase embeddings, title with related phrase counts are given the next rating whatever the context. Sentence-BERT, alternatively, captures the complete which means of phrases, making it considerably higher fitted to this process with minimal preprocessing.

Study to Rank with PyTorch + RankNet

To maneuver past one-off comparisons, we constructed a learn-to-rank mannequin utilizing:

- SentenceBERT for embeddings

- RankNet for coaching on candidate pairs

This mannequin outputs a likelihood that one candidate ought to rank decrease than one other. Candidates with a rating close to 0.5 are possible a very good match to the goal; these close to 1.0 are much less related.

🧪 Strengths and Weaknesses:

- RankNet excels at filtering out poor suits.

- It struggles with fine-grained rating amongst carefully matched candidates leading to a number of cadidates with similar RankNet scores 0.50.

Personalised Re-Rating: Starred Candidates

Generally recruiters “star” a couple of standout profiles. We constructed a reranking system the place the consumer enters:

- As much as 5 starred candidate IDs (non-obligatory)

We common the embeddings of starred candidates and compute cosine similarity to all others. This enables for dynamic personalization of rankings with out retraining.

To push issues additional, we built-in LLMs through Hugging Face utilizing a Immediate-based search for prime 5 candidates utilizing consumer enter like:

“You might be an AI hiring assistant. Rank the highest 5 job titles primarily based on their similirality to the search time period in desecnding order.”

First we evaluated three widespread LLMs: Qwen, LLama(Meta), Gemma(Google):

- Qwen3–0.6B: We had been in a position to get a significant output displaying the ouptut in JSON format. With extra submit output processing the output might be cleaned as much as present a extra concise and comprehendible format. Further immediate tuning can be wanted so as to add candidate ID to the output.

- Llama-3.2–1B-Instruct: The output was clear and concise displaying the highest candidates by rank and title with similarity rating, but it surely was unable to point out the job id. Further immediate tuning can be wanted so as to add candidate ID to the output. The output does embody extra reasoning which might be cleaned post-output.

- gemma-3–1b-it: The output at first look seems to offer essentially the most desired end result in comparison with the earlier two LLMs displaying a prime 5 checklist by rank, id, and job title. Nonetheless, after a fast cross-reference with the supply information it was discovered that the job titles didn’t match with the ids. Further promp-tuning is required to get a suitable response as nicely output formatting to take away the reasoning after the highest 5 checklist.

Takeaways:

The LLMs used had been on the smaller finish of <1B as a result of limitation of the free Colab atmosphere. With lesser LLM parameter dimension there may be lesser understanding and in consequence decrease accuracy outcomes than the next parameter mannequin.

- All three LLMs required a number of makes an attempt utilizing totally different prompts to get a suitable output.

- All three LLMs require some cleanup of the output.

- Gemma offered essentially the most acceptable response format though inaccurate and was chosen for additional refinement.

Instruction-tuning

To handle the sooner mismatch of id and job title, the 2 fields had been mixed into one string known as job_pairs. The immediate was modified to incorporate the job_pairs area to let the system know to maintain id and title tied collectively.

# Create a mixed string of job ID and job title pairs

job_pairs = "n".be part of([f"{job_id}: {job_title}" for job_id, job_title in zip(job_ids_short, job_titles_short)])

immediate = f"""

Return an inventory of the highest 5 job candidates with full job title and job id from a job titles checklist ranked by their similirality to the search time period in desecnding order. Don't repeat the immediate. Solely present the reply. Don't cause or clarify.

**Search time period**

{target_title}**Job candidates (ID: Title)**

{job_pairs}

Present reply in following format:

Rank Job ID Job Title

1 - [id]: Rating Expertise with AI. Constructing a Candidate Match Prediction… | by Paul Drobny | Jul, 2025

Instance of reply:

Rank Job ID Job Title

1 - 431: aspiring information science skilled centered on information evaluation machine studying and information visualization actively looking for alternatives.

2 - ...

...

Reply: Prime 5 candidates are:

"""

output = generator(immediate, max_new_tokens=200, num_return_sequences=1)

print(output[0],['generated_text'])

The output was modified to solely present textual content following “Reply:”. Now the outcomes reveals a prime 5 checklist with correct ids and titles. There’s nonetheless residual textual content displaying rank with out job id that will must be cleaned submit output.

Positive-tuning with QLoRA

QLoRA is a fine-tuning approach that reduces reminiscence utilization by combining quantization (compressing mannequin weights to decrease precision) with Low-Rank Adaptation (LoRA), which introduces small, trainable matrices, enabling the environment friendly fine-tuning of enormous language fashions on much less highly effective {hardware} whereas sustaining efficiency.

Following fine-tuning the response did present a repeat of the immediate directions, however after modifying the output to solely present textual content following “Reply:” we achieved the specified end result.

Takeaways:

Each instruction-tuning and fine-tuning with Qlora did enhance the output end result. Nonetheless, each these strategies are time consuming and would want to repeatedly monitor to substantiate the outcomes for subsequent queries. High quality of the output could also be improved if assets enable the next par

If restricted on laptop assets chat bot APIs present a technique to entry increased parameter LLMs. By means of Groq API the “llama-3.1–8b-instant” and “deepseek-r1-distill-llama-70b” had been evaluated.

Each LLMs delivered almost instantaneous outcomes taking just a few second to run and submit the outcomes.

On the primary try “deepseek-r1-distill-llama-70b” gave the specified end result,

whereas “llama-3.1–8b-instant” gave promising outcomes however the rank numbers didn’t appear logical.

This challenge demonstrates the facility of mixing classical machine studying, deep studying, and enormous language fashions (LLMs) to unravel real-world issues like candidate rating. Sentence embeddings alone go a great distance — however with fashions like RankNet and native LLMs, we will personalize and scale the expertise for recruiters. With Native LLMs, these benefits include actual prices: excessive reminiscence utilization, slower inference speeds (until well-optimized), and elevated complexity in setup and upkeep. For groups with ample assets or strict privateness wants, native deployment is a useful path — but it surely requires a deeper technical funding.

LLMs accessed by means of APIs (like Groq-hosted fashions) proved particularly compelling. They drastically scale back infrastructure overhead whereas delivering near-instant inference and high-quality outcomes — offered the prompts are well-crafted. This makes them perfect for fast prototyping, scalable deployment, or when compute assets are restricted. Nonetheless, API utilization might increase issues round information privateness, price, or fee limits, particularly for enterprise-scale options. Nonetheless, for a lot of use instances, API-based LLMs strike a robust stability between ease of use, pace, and class.