On the coronary heart of each profitable AI Agent lies one important talent: prompting (or “immediate engineering”). It’s the methodology of instructing LLMs to carry out duties by rigorously designing the enter textual content.

Immediate engineering is the evolution of the inputs for the primary text-to-text NLP fashions (2018). On the time, builders usually centered extra on the modeling aspect and have engineering. After the creation of huge GPT fashions (2022), all of us began utilizing largely pre-trained instruments, so the main target has shifted on the enter formatting. Therefore, the “immediate engineering” self-discipline was born, and now (2025) it has matured into a mix of artwork and science, as NLP is blurring the road between code and immediate.

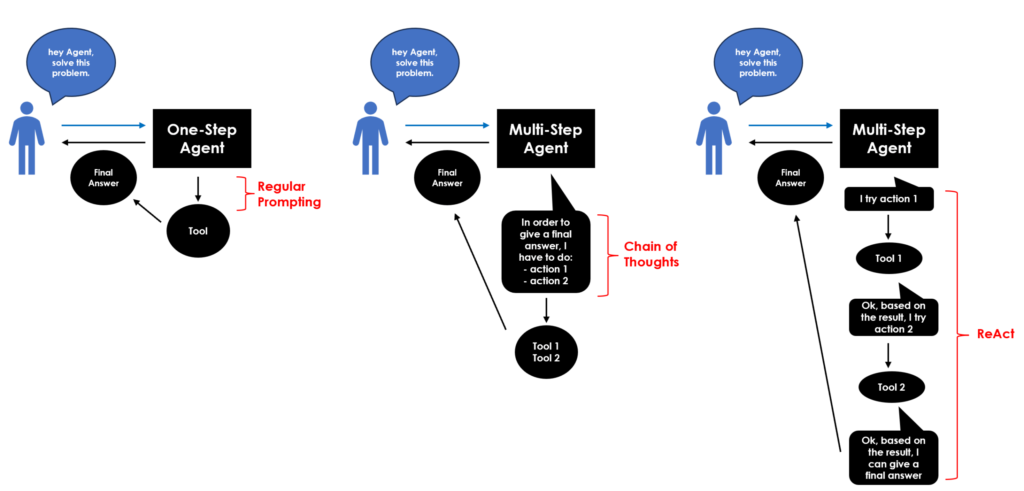

Several types of prompting methods create several types of Brokers. Every methodology enhances a particular talent: logic, planning, reminiscence, accuracy, and Instrument integration. Let’s see all of them with a quite simple instance.

## setup

import ollama

llm = "qwen2.5"

## query

q = "What's 30 multiplied by 10?"Principal methods

1) “Common” prompting – simply ask a query and get a simple reply. Additionally referred to as “Zero-Shot” prompting particularly when the mannequin is given a job with none prior examples of how you can resolve it. This fundamental method is designed for One-Step Brokers that execute the duty with out intermediate reasoning, particularly early fashions.

response = ollama.chat(mannequin=llm, messages=[

{'role':'user', 'content':q}

])

print(response['message']['content'])

2) ReAct (Reason+Act) – a mix of reasoning and motion. The mannequin not solely thinks by means of an issue but in addition takes motion based mostly on its reasoning. So, it’s extra interactive because the mannequin alternates between reasoning steps and actions, refining its strategy iteratively. Mainly, it’s a loop of thought-action-observation. Used for extra difficult duties, like looking the net and making selections based mostly on the findings, and usually designed for Multi-Step Brokers that carry out a sequence of reasoning steps and actions to reach at a last outcome. They’ll break down advanced duties into smaller, extra manageable components that progressively construct upon each other.

Personally, I actually like ReAct Brokers as I discover them extra much like people as a result of they “f*ck round and discover out” similar to us.

immediate = '''

To unravel the duty, you have to plan ahead to proceed in a sequence of steps, in a cycle of 'Thought:', 'Motion:', and 'Remark:' sequences.

At every step, within the 'Thought:' sequence, you need to first clarify your reasoning in direction of fixing the duty, then the instruments that you just need to use.

Then within the 'Motion:' sequence, you shold use certainly one of your instruments.

Throughout every intermediate step, you need to use 'Remark:' discipline to avoid wasting no matter vital info you'll use as enter for the subsequent step.

'''

response = ollama.chat(mannequin=llm, messages=[

{'role':'user', 'content':q+" "+prompt}

])

print(response['message']['content'])

3) Chain-of-Thought (CoT) – a reasoning sample that includes producing the method to achieve a conclusion. The mannequin is pushed to “suppose out loud” by explicitly laying out the logical steps that result in the ultimate reply. Mainly, it’s a plan with out suggestions. CoT is probably the most used for superior duties, like fixing a math downside that may want step-by-step reasoning, and usually designed for Multi-Step Brokers.

immediate = '''Let’s suppose step-by-step.'''

response = ollama.chat(mannequin=llm, messages=[

{'role':'user', 'content':q+" "+prompt}

])

print(response['message']['content'])

CoT extensions

From the Chain-of-Method derived a number of different new prompting approaches.

4) Reflexion prompting that provides an iterative self-check or self-correction section on prime of the preliminary CoT reasoning, the place the mannequin opinions and critiques its personal outputs (recognizing errors, figuring out gaps, suggesting enhancements).

cot_answer = response['message']['content']

response = ollama.chat(mannequin=llm, messages=[

{'role':'user', 'content': f'''Here was your original answer:nn{cot_answer}nn

Now reflect on whether it was correct or if it was the best approach.

If not, correct your reasoning and answer.'''}

])

print(response['message']['content'])

5) Tree-of-Thoughts (ToT) that generalizes CoT right into a tree, exploring a number of reasoning chains concurrently.

num_branches = 3

immediate = f'''

You'll consider a number of reasoning paths (thought branches). For every path, write your reasoning and last reply.

After exploring {num_branches} totally different ideas, choose one of the best last reply and clarify why.

'''

response = ollama.chat(mannequin=llm, messages=[

{'role':'user', 'content': f"Task: {q} n{prompt}"}

])

print(response['message']['content'])

6) Graph‑of‑Thoughts (GoT) that generalizes CoT right into a graph, contemplating additionally interconnected branches.

class GoT:

def __init__(self, query):

self.query = query

self.nodes = {} # node_id: textual content

self.edges = [] # (from_node, to_node, relation)

self.counter = 1

def add_node(self, textual content):

node_id = f"Thought{self.counter}"

self.nodes[node_id] = textual content

self.counter += 1

return node_id

def add_edge(self, from_node, to_node, relation):

self.edges.append((from_node, to_node, relation))

def present(self):

print("n--- Present Ideas ---")

for node_id, textual content in self.nodes.objects():

print(f"{node_id}: {textual content}n")

print("--- Connections ---")

for f, t, r in self.edges:

print(f"{f} --[{r}]--> {t}")

print("n")

def expand_thought(self, node_id):

immediate = f"""

You might be reasoning concerning the job: {self.query}

Here's a earlier thought node ({node_id}):"""{self.nodes[node_id]}"""

Please present a refinement, an alternate viewpoint, or a associated thought that connects to this node.

Label your new thought clearly, and clarify its relation to the earlier one.

"""

response = ollama.chat(mannequin=llm, messages=[{'role':'user', 'content':prompt}])

return response['message']['content']

## Begin Graph

g = GoT(q)

## Get preliminary thought

response = ollama.chat(mannequin=llm, messages=[

{'role':'user', 'content':q}

])

n1 = g.add_node(response['message']['content'])

## Broaden preliminary thought with some refinements

refinements = 1

for _ in vary(refinements):

enlargement = g.expand_thought(n1)

n_new = g.add_node(enlargement)

g.add_edge(n1, n_new, "enlargement")

g.present()

## Closing Reply

immediate = f'''

Listed here are the reasoning ideas to date:

{chr(10).be a part of([f"{k}: {v}" for k,v in g.nodes.items()])}

Based mostly on these, choose one of the best reasoning and last reply for the duty: {q}

Clarify your selection.

'''

response = ollama.chat(mannequin=llm, messages=[

{'role':'user', 'content':q}

])

print(response['message']['content'])

7) Program‑of‑Thoughts (PoT) that focuses on programming, the place the reasoning occurs through executable code snippets.

import re

def extract_python_code(textual content):

match = re.search(r"```python(.*?)```", textual content, re.DOTALL)

if match:

return match.group(1).strip()

return None

def sandbox_exec(code):

## Create a minimal sandbox with security limitation

allowed_builtins = {'abs', 'min', 'max', 'pow', 'spherical'}

safe_globals = {ok: __builtins__.__dict__[k] for ok in allowed_builtins if ok in __builtins__.__dict__}

safe_locals = {}

exec(code, safe_globals, safe_locals)

return safe_locals.get('outcome', None)

immediate = '''

Write a brief Python program that calculates the reply and assigns it to a variable named 'outcome'.

Return solely the code enclosed in triple backticks with 'python' (```python ... ```).

'''

response = ollama.chat(mannequin=llm, messages=[

{'role':'user', 'content': f"Task: {q} n{prompt}"}

])

print(response['message']['content'])

sandbox_exec(code=extract_python_code(textual content=response['message']['content']))

Conclusion

This text has been a tutorial to recap all the key prompting methods for AI Brokers. There’s no single “finest” prompting method because it relies upon closely on the duty and the complexity of the reasoning wanted.

For instance, easy duties, like summarization and translation, are easiy carried out with a Zero-Shot/Common prompting, whereas CoT works effectively for math and logic duties. Then again, Brokers with Instruments are usually created with ReAct mode. Furthermore, Reflexion is most applicable when studying from errors or iterations improves outcomes, like gaming.

When it comes to versatility for advanced duties, PoT is the actual winner as a result of it’s solely based mostly on code era and execution. The truth is, PoT Brokers are getting nearer to exchange people in a number of workplace taks.

I imagine that, within the close to future, prompting gained’t simply be about “what you say to the mannequin”, however about orchestrating an interactive loop between human intent, machine reasoning, and exterior motion.

Full code for this text: GitHub

I hope you loved it! Be happy to contact me for questions and suggestions or simply to share your attention-grabbing initiatives.

👉 Let’s Connect 👈

(All photographs are by the writer until in any other case famous)