On this weblog, we’ll discover how a mannequin processes and understands your phrases — a vital side of each giant language mannequin and pure language processing (NLP). This space of NLP is deep and evolving, with researchers constantly engaged on extra optimized strategies. I intention to offer an intuitive understanding of those ideas, providing a high-level overview to make them approachable.

Let’s start with tokenization.

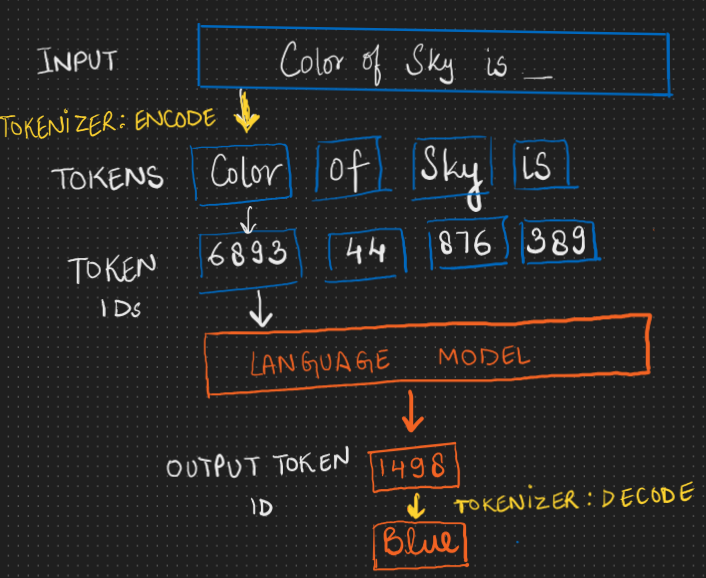

Since machines can’t immediately perceive phrases as we do, they depend on a course of known as tokenization to interrupt down textual content into smaller, machine-readable models.

Tokenization is the method of breaking enter textual content into smaller models known as tokens (akin to phrases, subwords, or characters). Every token is then assigned a numerical worth from the vocabulary, referred to as a singular token ID.

Once you ship your question to an LLM(e.g. ChatGpt), the mannequin doesn’t immediately learn your textual content. These fashions can solely take numbers as inputs, so the textual content should first be transformed right into a sequence of numbers utilizing a tokenizer after which fed to the mannequin.

The mannequin processes enter as numerical values, that are distinctive token IDs assigned throughout coaching. It generates output as a sequence of numerical values, that are then transformed again into human-readable textual content.

Consider tokenization as slicing a loaf of bread to make a sandwich. You may’t unfold butter or add toppings to the entire loaf. As an alternative, you narrow it into slices, course of every slice (apply butter, cheese, and many others.), after which reassemble them to make a sandwich.

Equally, tokenization breaks textual content into smaller models (tokens), processes every one, after which combines them to type the ultimate output.

One essential factor to bear in mind is that tokenizers have vocabularies, that are lists of all of the phrases or elements of phrases (known as “tokens”) that the tokenizer can perceive.

For instance, a tokenizer is likely to be skilled to deal with frequent phrases like “apple” or “canine.” Nevertheless, if it hasn’t been skilled on much less frequent phrases, like “pikachu,” it might not know course of it.

When the tokenizer encounters the phrase “pikachu,” it may not have it as a token in its vocabulary. As an alternative, it may break it into smaller elements or fail to course of it completely, resulting in tokenization failure. This example can also be known as out-of-vocabulary (OOV).

In brief, a tokenizer’s capacity to deal with textual content is dependent upon the phrases realized throughout coaching. If it encounters a phrase outdoors its vocabulary, it’d battle to course of it correctly.

1. Phrase Tokens

A phrase tokenizer breaks down a sentence or corpus into particular person phrases. Every phrase is then assigned a distinctive token ID, which helps fashions perceive and course of the textual content.

Phrase tokenization’s most important profit is that it’s simple to grasp and visualize. Nevertheless, it has just a few shortcomings:

Redundant Tokens: Phrases with small variations, like “apology”, “apologize”, and “apologetic”, every change into a separate token. This makes vocabulary giant, leading to much less environment friendly coaching and inferencing.

New Phrases Problem: Phrase tokenizers can battle with phrases that weren’t current within the unique coaching knowledge. For instance, if a brand new phrase like “apologized” seems, the mannequin may not perceive. Therefore, the tokenizer might be required to be retrained to include this phrase.

For these causes, language fashions normally don’t choose this tokenisation method.

2. Character Tokens

Character tokenization splits textual content into particular person characters. The logic behind this tokenization is {that a} language has many alternative phrases however has a set variety of characters. This ends in a really small vocabulary.

For instance, in English, we use 256 completely different characters (letters, numbers, particular characters), whereas it has almost 170,000 phrases in its vocabulary.

Key benefits of character tokenization:

Handles Unknown Phrases (OOV): It doesn’t run into points with unknown phrases (phrases not seen throughout coaching). As an alternative, it represents such phrases by breaking them into characters.

Offers with Misspellings: Misspelled phrases aren’t marked as unknown. The mannequin can nonetheless work with them utilizing particular person character tokens.

Easier Vocabulary: A smaller vocabulary means much less reminiscence and easier coaching.

Though character tokenization has the above benefits, it’s nonetheless not the commonly most popular technique due to two shortcomings.

Lack of Which means: In contrast to phrases, characters don’t carry a lot which means. For instance, splitting “information” into ["k", "n", "o", "w", "l", "e", "d", "g", "e"] loses the context of the complete phrase.

Longer Tokenized Sequences: In character-based tokenization, every phrase is damaged down into a number of characters, leading to for much longer token sequences in comparison with the unique textual content. This turns into a limitation when working with transformer fashions, as their context size is mounted. Longer sequences imply that much less textual content can match inside the mannequin’s context window, which might scale back the effectivity and effectiveness of coaching.

3. Subword Tokens

Subword tokenization splits textual content into chunks smaller than or equal to phrase size. This system is taken into account as a center floor for phrase and character tokenization by sustaining the vocabulary measurement and size of sequences in addition to contextual which means.

The subword-based tokenization algorithms don’t break up the often used phrases into smaller subwords. It moderately splits the uncommon phrases into smaller significant subwords, which are typically a part of the vocabulary. This permits fashions to generalize higher and deal with new or domain-specific phrases.

Under is an instance of subword tokenization utilized by GPT fashions. Try this hyperlink and check out just a few examples for higher understanding: Tokenizer.

4. Byte Tokens

Breaking down tokens into particular person bytes which are used to symbolize the Unicode characters. Researchers are nonetheless exploring this technique, and in just a few phrases, this tokenization approach supplies a aggressive edge as in comparison with others, particularly within the case of multilingual eventualities.

By understanding tokenization, you’ve taken your first step towards exploring how giant language fashions work below the hood. Keep tuned for extra insights into NLP and LLMs!