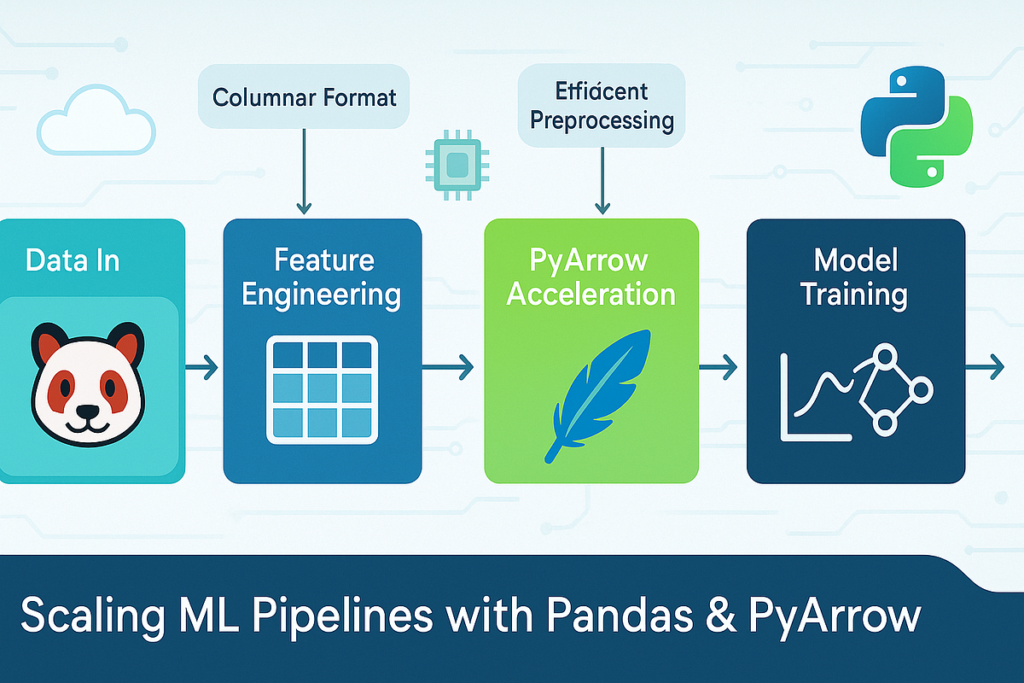

Enhance machine studying efficiency by scaling your pipelines with Pandas and PyArrow. Learn the way Apache Arrow allows quick, memory-efficient information processing for ML workflows.

Fashionable machine studying workflows are pushing the boundaries of knowledge processing instruments. As datasets swell into the tens or lots of of gigabytes, even seasoned information scientists discover their trusted pandas scripts grinding to a halt. However what in case your favourite information manipulation software might be turbocharged for scale — with out rewriting every thing from scratch? Enter PyArrow — the Python interface to Apache Arrow — which brings columnar in-memory information interchange to pandas, remodeling sluggish pipelines into blazing-fast engines.

On this article, we’ll discover find out how to scale machine studying pipelines utilizing Pandas and PyArrow, harnessing the velocity and reminiscence effectivity of Apache Arrow whereas maintaining the acquainted flexibility of pandas. Whether or not you’re constructing function engineering workflows, preprocessing coaching datasets, or exporting mannequin outputs — this strategy is a game-changer.