Textual content Tokenization

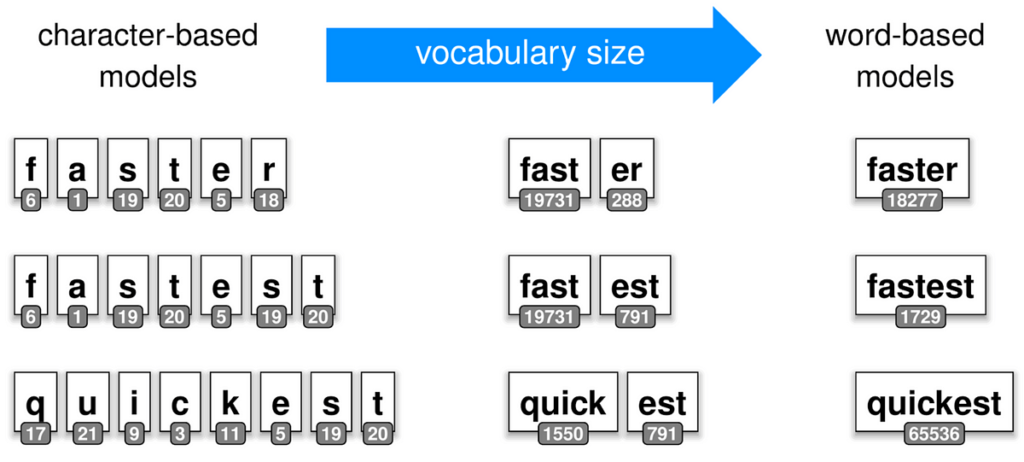

Textual content tokenization is the method of breaking textual content into smaller items (tokens) for processing by language fashions. Widespread approaches embrace:

– Phrase-level: Every token represents a whole phrase

– Subword-level: Phrases are damaged into frequent subword items (e.g., BPE, WordPiece)

– Character-level: Every character is a separate token

Fashionable LLMs sometimes use subword tokenization because it balances vocabulary measurement and dealing with of uncommon phrases.

Giant Language Fashions (LLMs) are superior neural community architectures educated on huge corpora of textual content knowledge to know, generate, and manipulate human language. These fashions have revolutionized pure language processing (NLP) by means of their exceptional talents to understand context, comply with directions, and generate coherent, related textual content throughout numerous domains.

Key Traits

- Scale: Modern LLMs include billions to trillions of parameters, enabling them to seize complicated linguistic patterns and world information.

- Transformer Structure: Most LLMs make the most of transformer-based architectures with self-attention mechanisms that permit them to course of long-range dependencies in sequential knowledge.

- Autoregressive Technology: They sometimes generate textual content by predicting one token at a time, with every prediction conditioned on all earlier tokens.

- Subsequent-Token Prediction: LLMs are basically educated to foretell the following token in a sequence, a easy but highly effective goal that results in emergent capabilities.

- Context Window: They’ll course of and preserve context over hundreds of tokens, enabling understanding of lengthy paperwork and conversations.

- Few-Shot Studying: Fashionable LLMs can adapt to new duties with minimal examples by means of in-context studying, with out parameter updates.