Help Vector Machine

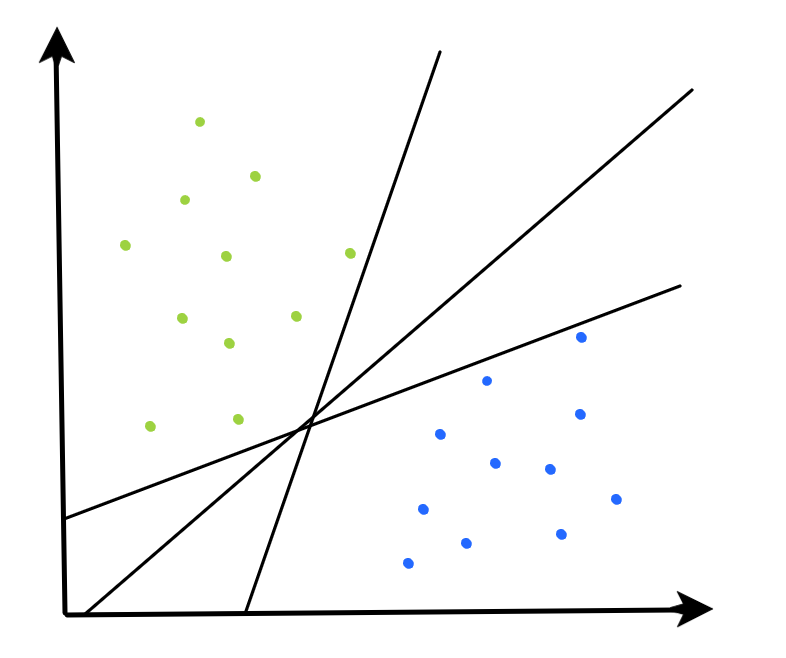

Normally, there are two methods which are generally used when attempting to categorise non-linear knowledge:

- Match a non-linear classification algorithm to the information in its unique function house.

- Enlarge the function house to the next dimension the place a linear determination boundary exists.

SVMs purpose to discover a linear determination boundary in the next dimensional house, however they do that in a computationally environment friendly method utilizing Kernel features, which permit them to search out this determination boundary with out having to use the non-linear transformation to the observations.

There exist many alternative choices to enlarge the function house by way of some non-linear transformation of options (greater order polynomial, interplay phrases, and so on.). Let’s have a look at an instance the place we increase the function house by making use of a quadratic polynomial growth.

Suppose our unique function set consists of the p options beneath.

Our new function set after making use of the quadratic polynomial growth consists of the twop options beneath.

Now, we have to resolve the next optimization drawback.

It’s the identical because the SVC optimization drawback we noticed earlier, however now we’ve quadratic phrases included in our function house, so we’ve twice as many options. The answer to the above can be linear within the quadratic house, however non-linear when translated again to the unique function house.

Nonetheless, to resolve the issue above, it could require making use of the quadratic polynomial transformation to each statement the SVC can be match on. This may very well be computationally costly with excessive dimensional knowledge. Moreover, for extra advanced knowledge, a linear determination boundary could not exist even after making use of the quadratic growth. In that case, we should discover different greater dimensional areas earlier than we are able to discover a linear determination boundary, the place the price of making use of the non-linear transformation to our knowledge may very well be very computationally costly. Ideally, we’d have the ability to discover this determination boundary within the greater dimensional house with out having to use the required non-linear transformation to our knowledge.

Fortunately, it seems that the answer to the SVC optimization drawback above doesn’t require specific information of the function vectors for the observations in our dataset. We solely must know the way the observations examine to one another within the greater dimensional house. In mathematical phrases, this implies we simply must compute the pairwise internal merchandise (chap. 2 here explains this intimately), the place the internal product could be considered some worth that quantifies the similarity of two observations.

It seems for some function areas, there exists features (i.e. Kernel features) that enable us to compute the internal product of two observations with out having to explicitly rework these observations to that function house. Extra element behind this Kernel magic and when that is doable could be present in chap. 3 & chap. 6 here.

Since these Kernel features enable us to function in the next dimensional house, we’ve the liberty to outline determination boundaries which are way more versatile than that produced by a typical SVC.

Let’s have a look at a well-liked Kernel perform: the Radial Foundation Perform (RBF) Kernel.

The system is proven above for reference, however for the sake of fundamental instinct the main points aren’t necessary: simply consider it as one thing that quantifies how “comparable” two observations are in a excessive (infinite!) dimensional house.

Let’s revisit the information we noticed on the finish of the SVC part. Once we apply the RBF kernel to an SVM classifier & match it to that knowledge, we are able to produce a call boundary that does a significantly better job of distinguishing the statement courses than that of the SVC.

import matplotlib.pyplot as plt

import numpy as np

from sklearn.datasets import make_circles

from sklearn import svm# create circle inside a circle

X, Y = make_circles(n_samples=100, issue=0.3, noise=0.05, random_state=0)

kernel_list = ['linear','rbf']

fignum = 1

for ok in kernel_list:

# match the mannequin

clf = svm.SVC(kernel=ok, C=1)

clf.match(X, Y)

# plot the road, the factors, and the closest vectors to the airplane

xx = np.linspace(-2, 2, 8)

yy = np.linspace(-2, 2, 8)

X1, X2 = np.meshgrid(xx, yy)

Z = np.empty(X1.form)

for (i, j), val in np.ndenumerate(X1):

x1 = val

x2 = X2[i, j]

p = clf.decision_function([[x1, x2]])

Z[i, j] = p[0]

ranges = [-1.0, 0.0, 1.0]

linestyles = ["dashed", "solid", "dashed"]

colours = "ok"

plt.determine(fignum, figsize=(4,3))

plt.contour(X1, X2, Z, ranges, colours=colours, linestyles=linestyles)

plt.scatter(

clf.support_vectors_[:, 0],

clf.support_vectors_[:, 1],

s=80,

facecolors="none",

zorder=10,

edgecolors="ok",

cmap=plt.get_cmap("RdBu"),

)

plt.scatter(X[:, 0], X[:, 1], c=Y, cmap=plt.cm.Paired, edgecolor="black", s=20)

# print kernel & corresponding accuracy rating

plt.title(f"Kernel = {ok}: Accuracy = {clf.rating(X, Y)}")

plt.axis("tight")

fignum = fignum + 1

plt.present()

In the end, there are a lot of completely different decisions for Kernel functions, which gives plenty of freedom in what sorts of determination boundaries we are able to produce. This may be very highly effective, nevertheless it’s necessary to remember to accompany these Kernel features with applicable regularization to scale back probabilities of overfitting.