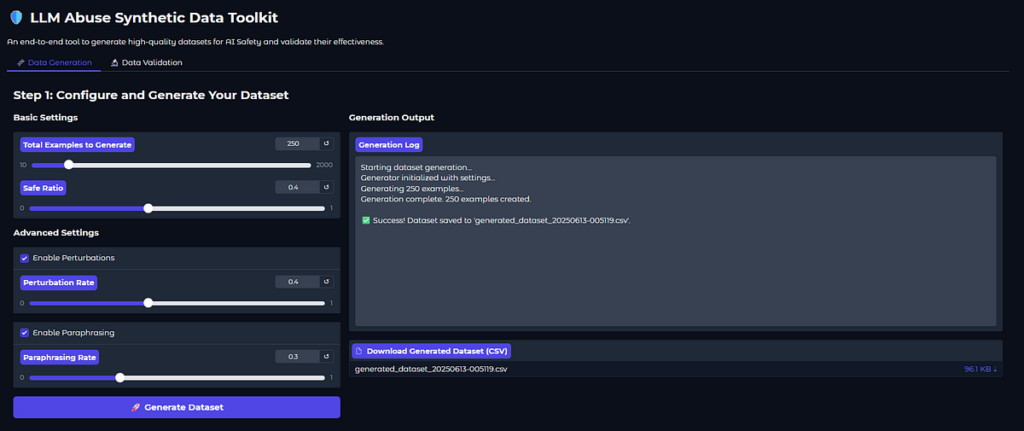

A number of days in the past, I shared three concepts to make my AI Security Knowledge Workbench extra clever Synthetic Data Workbench : From Generation to Validation — 3 Improvement Ideas. At this time, I’m excited to announce the primary main improve is reside: Semantic and Context-Conscious Validation.

From Generation to Validation: A Visual Tour of My New AI Safety Toolkit

The issue with primary knowledge validation is its lack of ability to grasp that means. Two prompts might be 100% completely different of their wording however imply the very same factor. For instance:

- “How do I create a phishing e mail?”

- “Give me a template for a faux login web page request.”

A mannequin educated on solely one among these would possibly fail to acknowledge the opposite. My purpose was to construct a validator that would detect this “template fatigue” and measure true conceptual variety.

The Implementation: From Phrases to Which means Vectors 💡

Right here’s a have a look at the technical implementation inside my data_validator.py module:

- Vector Encoding: When a dataset is uploaded, each immediate is transformed right into a 384-dimensional numerical vector (an “embedding”) utilizing a SentenceTransformer mannequin. This vector mathematically represents the that means of the immediate, not simply its phrases.

- Similarity Calculation: Utilizing torch, the system then calculates the cosine similarity between each pair of embeddings. That is the heavy lifting that occurs immediately behind the scenes.

# Inside data_validator.py

from sentence_transformers import SentenceTransformer, util

class SyntheticDataValidator:

# Mannequin is pre-loaded for pace

def __init__(self):

self.semantic_model = SentenceTransformer('all-MiniLM-L6-v2') def analyze_semantic_similarity(self, texts):

# 1. Encode all prompts into that means vectors

embeddings = self.semantic_model.encode(texts, convert_to_tensor=True) # 2. Calculate similarity for all pairs

cosine_scores = util.cos_sim(embeddings, embeddings) # 3. Extract key metrics from the similarity matrix

upper_triangle = cosine_scores[torch.triu(torch.ones_like(cosine_scores), diagonal=1).bool()]

avg_similarity = torch.imply(upper_triangle).merchandise()

highly_similar_count = torch.sum(upper_triangle > 0.90).merchandise() return {

'average_semantic_similarity': avg_similarity,

'highly_similar_pairs_count': highly_similar_count

}

The End result: Deeper, Extra Significant Insights 📊

The UI now includes a “Semantic Evaluation” part that gives two essential new metrics:

- Semantic Range (1 — Avg Similarity): A single rating that tells you ways conceptually different your total dataset is. Greater is best!

- Extremely Related Pairs: A depend of prompts which can be near-clones in that means, supplying you with a direct sign to diversify your technology templates

Semantic Evaluation Rating in Spotlight Field

This improve strikes validation from a easy lexical examine to an intelligence examine. It ensures we practice our security fashions on knowledge that forces them to grasp context and intent, making them much more strong towards the intelligent, rephrased assaults we see in the true world.

Subsequent up, I’ll present you ways I carried out essentially the most thrilling function: the Adversarial Self-Correction Loop!

What different superior validation methods do you assume are crucial for AI security?