At 2 a.m., in a darkish front room, a robotic vacuum cleaner is working silently.

Immediately, it stops: “There’s a settee leg forward.”

The voice assistant chimes in: “It may be associated to the popcorn you dropped half an hour in the past.”

The robotic thinks for a second: “I’ll go round it first and are available again to wash later.”

The motor begins, and the robotic gently turns round, bypassing the impediment to proceed its work.

This late-night “soul dialog” really hides a secret.

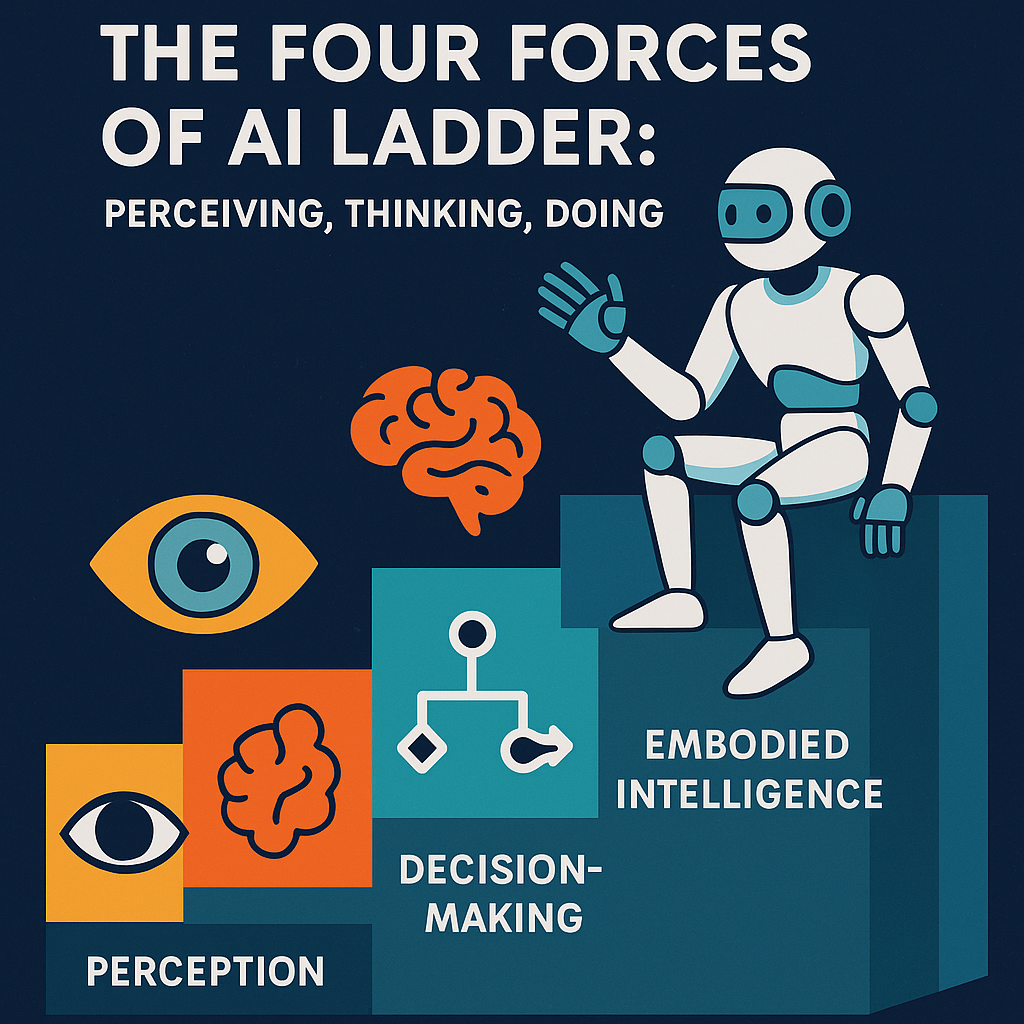

These 4 sentences correspond to the 4 main capabilities of synthetic intelligence: notion, cognition, decision-making, and embodiment (bodily motion).

They’re just like the 4 ranges on the AI talent tree, progressing step-by-step.

Subsequent, let’s comply with this “four-power ladder” to discover AI’s thrilling journey from “seeing” to “transferring steadily,” and the place it’s going to go from right here.

How do machines “see” the world? Not with eyes, however by deciphering pixels with algorithms.

Perceptual intelligence is step one in AI and the inspiration for all subsequent capabilities.

It permits machines to “digitize” the exterior world — changing pictures and sounds into alerts that computer systems can perceive, and extracting significant options and labels.

Simply because the human mind’s visible cortex can acknowledge the form and sound of a cat, the pc’s notion module should “see” objects from pixels and “hear” statements from waveforms.

Over a decade in the past, pc imaginative and prescient was jokingly referred to as the “world’s hardest downside of educating machines to acknowledge cats.”

However in 2012, the emergence of deep convolutional neural networks rewrote historical past.

The AlexNet developed by Hinton’s group considerably broke information within the ImageNet picture recognition competitors, lowering the picture classification error charge by greater than 10 share factors!

This meant that computer systems might lastly reliably distinguish between cats and canines in images, marking a milestone the place machines actually “noticed” the content material of pictures.

Curiously, Google researchers used related algorithms to “self-learn” the idea of “cat” from huge quantities of unlabeled internet pictures, permitting neural networks to find cat face patterns with out human steering.

Since then, convolutional neural networks have turn out to be the principle pressure within the visible discipline, making it not troublesome for computer systems to see the colourful world (in fact, early fashions additionally made errors: it’s mentioned that when a mannequin mistook a panda for a baked candy potato, simply due to its black and white sample and description).

On the identical time, auditory notion has additionally made fast progress with the assistance of deep studying.

Conventional speech recognition used hidden Markov fashions (HMM) and acoustic fashions, with accuracy charges caught round 90%, arduous to enhance.

After introducing deep neural networks, speech recognition accuracy soared to 95%, 98%, approaching human skilled transcription ranges.

In 2017, Microsoft Analysis introduced that their conversational speech recognition system had a phrase error charge of solely 5.1% on customary check units, similar to people.

Which means that sensible audio system and telephone assistants can lastly “hear” what we are saying clearly.

Because the individual in cost mentioned, the following problem is to make machines actually “perceive” the which means of phrases after “listening to” them — this results in the second stage of AI evolution.

Perceptual intelligence shouldn’t be restricted to cameras and microphones.

At the moment, robots and self-driving vehicles are outfitted with numerous new sensors: millimeter-wave radar to seize high-speed transferring objects, LiDAR to measure three-dimensional spatial buildings, inertial measurement models (IMUs) to sense movement and tilt…

These sensor information horizontally broaden the machine’s notion dimensions of the setting, constructing a wealthy “information basis.”

The duty of perceptual intelligence is to transform these advanced uncooked information into helpful data.

This step appears easy, however with out it, subsequent cognition and decision-making can not proceed.

Can machines actually perceive language?

With the rise of huge language fashions, they’re getting nearer to this objective.

Cognitive intelligence is the second stage of the AI talent tree: enabling machines to type semantic understanding primarily based on perceptual data and carry out summary pondering and reasoning.

Massive language fashions (LLMs) are the celebs of this stage.

By coaching on large texts, they compress high-dimensional semantic data into large parameter matrices, forming “idea vector” fashion information representations.

Merely put, the mannequin “remembers” patterns from billions of sentences on the web.

Once you give it a sentence, it finds the corresponding which means place in a thousands-dimensional vector area after which steadily unfolds information to reply your query primarily based on what it realized throughout coaching about “what to say subsequent.”

Google’s Transformer structure proposed in 2017 is a watershed on this discipline — it permits fashions to course of sequential data like gossip, however primarily it’s all linear algebra operations.

This capacity to “seemingly chat casually whereas really performing matrix multiplications” endows AI with highly effective capabilities to learn and generate human language.

Chatbots like ChatGPT and GPT-4 are representatives of cognitive intelligence.

They cannot solely perceive the which means of on a regular basis conversations but in addition carry out logical reasoning primarily based on context, even scoring larger than the human common in lots of information quizzes.

That is because of the “emergent” reasoning talents throughout coaching: when the parameter scale and coaching corpus attain a sure stage, the mannequin begins to point out shocking “pondering” indicators, equivalent to with the ability to write code, show math issues, or mix a number of sentences for deduction.

Researchers excitedly name this the “spark of AGI” (synthetic basic intelligence).

In fact, massive language fashions even have apparent limitations, probably the most well-known being the “hallucination” downside — the place the mannequin confidently fabricates seemingly cheap however really incorrect solutions.

The foundation trigger is that the reasoning course of lacks perceptual anchors from the true world, like a stagnant pool indifferent from the river, babbling within the semantic quagmire.

Subsequently, GPT-4 typically confidently outputs fallacies as a result of it has no expertise of the bodily world (no “vestibular system” or “IMU” notion).

For instance, there’s a joke that GPT-4 can memorize the traces and plots of a preferred TV sequence and make it sound believable, nevertheless it would possibly utterly violate widespread sense — as a result of it has no real-world calibration.

To cut back such points, researchers are attempting to attach cognitive fashions to extra modal inputs.

Multimodal massive fashions are a current hotspot: for example, OpenAI has enabled GPT-4 to simply accept picture inputs, permitting it to reply questions on pictures and even clarify standard web memes.

Fashions like BLIP-2 and Microsoft’s Kosmos-1 mix visible options with language fashions, enabling them to investigate pictures whereas utilizing pure language.

“Multimodal unified illustration” implies that the mannequin’s mind is not simply textual content however may “see footage and discuss.”

When picture understanding is built-in into the language core, AI’s cognition will likely be nearer to people’ complete notion of the world.

Sooner or later, massive fashions is not going to solely learn books and newspapers but in addition watch pictures and take heed to audio, actually “understanding” the tales the world tells them.

How does AI make choices?

Via reinforcement studying, it learns like people via trial and error.

Resolution intelligence is the third stage of the AI talent tree: enabling machines to plan actions, weigh execs and cons, and make optimum selections in unsure environments.

Reinforcement studying (RL) is a vital department of determination intelligence.

It permits brokers to enhance methods via steady trial and error to maximise cumulative rewards.

AI learns to decide on actions that yield the best advantages in advanced conditions via “strive — error — enhance.”

The Go-playing AI AlphaGo is a basic case of reinforcement studying mixed with tree search algorithms.

In 2016, DeepMind’s AlphaGo defeated world champion Lee Sedol 4:1, stunning the world — many specialists had thought that machines enjoying Go would take at the very least one other decade.

AlphaGo initially realized from human skilled video games however then rapidly surpassed human expertise via self-play reinforcement studying.

This decision-making AI, combining deep neural networks and Monte Carlo tree search, demonstrated gorgeous creativity: within the second recreation towards Lee Sedol, it performed transfer 37, often known as the “divine transfer,” leaving skilled gamers astounded.

AlphaGo’s success marked a leap in AI’s advanced decision-making capabilities.

Much more spectacular is its successor, AlphaZero.

Launched on the finish of 2017, AlphaZero’s brilliance lies in not counting on any human recreation information; it educated from scratch via self-play and reached ranges surpassing centuries of human accumulation in just some hours!

For instance, it realized chess in simply 4 hours and defeated the then-strongest chess program Stockfish; after 24 hours of coaching, it comprehensively crushed conventional packages in Go and Shogi.

AlphaZero demonstrated the ability of basic reinforcement studying and self-play — with none human technique steering, it might work out practically excellent decision-making plans by itself.

It may be mentioned that it graduated from “human lecturers” and have become its personal trainer.

This self-evolution of determination intelligence means that AI can obtain and even surpass human ranges in a wider vary of fields.

Past board video games, decision-making AI has many functions in actuality.

Meals supply platforms use clever scheduling algorithms to plan routes for riders, maximizing supply effectivity.

Drone formations obtain autonomous division of labor via multi-agent collaborative RL, finishing optimum protection in catastrophe aid or logistics.

A number of AI brokers have to cooperate or compete, giving rise to multi-agent decision-making issues.

By setting applicable reward mechanisms, machine teams may emerge coordinated behaviors to perform duties that single brokers can not.

In recent times, massive language fashions have additionally been mixed with determination intelligence, resulting in the brand new thought of “LLM as Agent.”

Merely put, it permits language fashions not solely to converse but in addition to autonomously plan and execute duties.

For instance, techniques like AutoGPT allow GPT fashions to interrupt down advanced objectives into a number of steps, type motion plans in pure language, after which name instruments (equivalent to search, code execution) to finish duties step-by-step.

The mannequin additionally displays and adjusts primarily based on outcomes (some name this the “Reflexion” mechanism), steadily approaching the objective.

LLM-Agent is like putting in an “govt within the mind” for language fashions, enabling them to cyclically generate concepts — take motion — observe outcomes — and modify once more.

This provides AI a sure diploma of autonomy to deal with open-ended issues.

This try to make fashions act on their very own foreshadows additional integration of determination intelligence and cognitive intelligence.

In fact, determination intelligence can typically be nerve-wracking.

Generative AI typically must pattern from chance distributions when making choices, like opening a thriller field: the identical technique with added randomness (rising the “temperature” parameter) would possibly convey shocking creativity or result in disastrous selections.

Balancing exploration and stability is an everlasting matter for decision-making AI — identical to human decision-making fears each extreme danger and sticking to the established order.

Nonetheless, with the event of reinforcement studying algorithms and security measures, we hope to make AI choices each “boldly hypothesizing” and “fastidiously verifying,” selecting the very best options which are each ingenious and correct.

What’s the final check for AI?

To behave in the true world!

Embodied intelligence is the ultimate stage of the AI talent tree, which means letting AI actually “develop muscle mass and flesh” and carry out duties within the bodily world.

It closes the loop of perception-cognition-decision-action into actuality: AI not solely thinks with its mind but in addition drives mechanical our bodies to finish duties.

In any case, irrespective of how sensible an algorithm is in a simulated world, as soon as it steps onto actual floor, there are infinite unknowns and uncertainties ready for it.

Prior to now two years, main tech corporations have been pushing in direction of “AI + robotics.”

On the Huawei Developer Convention in June 2024, a humanoid robotic outfitted with the Pangu massive mannequin made its debut, attracting consideration.

In line with reviews, Huawei Cloud’s Pangu Embodied Intelligence Massive Mannequin can already allow robots to finish advanced job planning with greater than ten steps, attaining multi-scenario generalization and multi-task processing throughout execution.

Much more spectacular, it will possibly robotically generate coaching movies for robots, educating them easy methods to act in numerous environments.

Along with the humanoid robotic “Kua Fu,” the Pangu mannequin may empower numerous industrial and repair robots, permitting them to exchange people in harmful or laborious work.

Think about, within the close to future, our properties may need versatile robotic butlers that may do laundry, cook dinner, and supply care — simply as Huawei Cloud’s CEO envisioned on the convention: “Let AI robots assist us with laundry, cooking, and cleansing, so we’ve extra time to learn, write poetry, and paint.”

Internationally, Google’s DeepMind group additionally launched the Gemini Robotics mannequin this yr.

It’s referred to as the primary visual-language-action (VLA) mannequin sequence, enabling robots to know spatial semantics and carry out advanced actions.

In easy phrases, it’s about equipping AI with “eyes” and “limbs.”

As commentators say, “Fashions want our bodies, robots want brains” — Gemini Robotics goals to bridge the 2.

It’s reported to incorporate a fundamental embodied reasoning mannequin (Gemini Robotics-ER) and a choice layer on high that may instantly output particular motion sequences.

For instance, it will possibly analyze digicam pictures, perceive the spatial relationships of objects within the scene, after which generate directions like “rotate the robotic arm joints sequentially by X levels, apply Y pressure with fingers to know.”

These directions are like motion scripts for robots, permitting them to comply with steps to finish duties.

Some commentators jokingly say that is like putting in “director + choreographer” for robots, enabling AI not solely to consider what to do subsequent but in addition to instantly inform robots easy methods to do every step.

Google’s earlier releases, PaLM-E and RT-2 (Robotics Transformer 2), are additionally exploring related concepts.

PaLM-E integrates ultra-large language fashions with visible inputs, processing multimodal information from robotic sensors and outputting high-level directions in textual content type to manage robots.

For example, in experiments, PaLM-E can enter “

kitchen scene … get me an apple,” and the mannequin generates steps like “stroll to the desk -> choose up the crimson apple -> stroll again to the human -> hand over the apple.”

RT-2 goes additional by discretizing actions into tokens embedded within the mannequin’s output, permitting robots to learn and execute motion directions like studying sentences.

This solves the issue that language fashions are good at outputting textual content however not at outputting joint management alerts — so long as mechanical actions are encoded right into a “vocabulary,” and the mannequin learns to “communicate” with these particular “phrases,” it’s equal to giving robots a set of mind language they will perceive.

With fashions educated synergistically with “mind + limbs,” robots can understand, perceive, and act in a closed loop in the true world.

Google’s experiments present that after coaching, RT-2 could make cheap actions primarily based on web information even when encountering new objects or requests: for instance, when informed “get me one thing that can be utilized as a hammer,” the robotic selects a stone from unseen objects as a result of it infers {that a} stone matches the idea of “improvised hammer.”

That is precisely the embodiment of cross-modal switch and multi-step reasoning in actual robots.

It’s price mentioning that the sector of embodied intelligence is beginning to give attention to the idea of “simulated vestibular system.”

That’s, including modules just like stability sensing to AI fashions, letting them know their very own movement states.

Researchers are attempting to enter IMU inertial sensor information together with robotic actions into massive fashions, permitting them to think about bodily stability when producing motion plans.

If atypical massive fashions produce “hallucinatory” choices as a result of lack of real-world notion, fashions linked to movement sensors are like having a human vestibular system, lowering unrealistic choices.

Sooner or later, sensible units we put on could mix with massive fashions to type one thing like “SensorGPT”: via six-axis accelerometers/gyroscopes on the wrist, understanding your actions in real-time, producing voice or textual content prompts to information your habits and even interactive plots.

This concept is thrilling — AI is not going to solely exist within the cloud and on screens however actually combine into our bodily extensions.

It’s foreseeable that within the period of embodied intelligence, AI will seem in numerous varieties in the true world: maybe a delicate and meticulous humanoid robotic assistant at house, or a gaggle of sturdy and diligent quadruped robotic staff on manufacturing facility flooring.

They could have completely different appearances however might share the identical “mind” — a basic intelligence mannequin within the cloud issuing instructions primarily based on completely different physique capabilities, permitting every robotic to carry out its duties.

From “seeing” to “transferring steadily,” as AI climbs to the extent of embodied intelligence, we appear to see the shadows of robots from science fiction novels entering into actuality.

Trying on the 4 ranges of “seeing, pondering, calculating, transferring,” every leap in AI functionality depends on the buildup of notion/information from the earlier stage and triggers vertical breakthroughs via horizontal information growth.

Initially, AI groped within the “sea of knowledge” composed of pixels and waveforms, steadily studying to understand labels.

Then, the flood of textual content data from the whole internet converged into the “river of semantics,” pushing AI to the opposite shore of cognitive intelligence.

Additional up, numerous simulated experiments and recreation experiences shaped the “lake of methods,” nurturing clever brokers like AlphaGo that make choices freely.

Lastly, these capabilities converge into the “tsunami of motion” in the true world, permitting robots to really have an effect on the bodily setting.

As ranges rise, information granularity turns into coarser, abstraction will increase, however the depth of suggestions loops multiplies — AI must undergo a number of closed loops of perception-cognition-decision-action to confirm and modify itself in actuality.

When the 4 powers are actually built-in, AI could endure a “part transition”-like qualitative change.

“Multimodal unified illustration + suggestions loop” is taken into account a key precursor to larger types of intelligence.

When machines have complete senses, integrating data from completely different sources right into a unified information area, and repeatedly correcting methods via closed-loop experiments, they may possess unprecedented studying and adaptation capabilities.

Many specialists speculate that true basic synthetic intelligence (AGI) could also be born from such multimodal, multi-loop coupled techniques.

As an trade insider metaphorically put it: massive fashions and robotics know-how are inherently “paired” next-generation technological waves — fashions give robots intelligence, robots give fashions physicality, and their mixture can provide delivery to new miracles.

As we climb the “four-power ladder,” every stage comes with new dangers and challenges.

To make sure the secure and wholesome improvement of AI, governance measures have to preserve tempo.

We will analyze potential hazards and regulatory strategies corresponding to those 4 capabilities:

- Notion: Machines could have information biases (e.g., decrease recognition charges for minority teams in picture recognition) or privateness leak dangers.

Strengthening information governance is important to make sure coaching information is numerous, honest, and compliant with privateness laws.

In actuality, nations have enacted private data safety legal guidelines requiring prevention of knowledge abuse in functions like facial recognition.

- Cognition: Hallucinations and misinformation from massive fashions could mislead the general public, inflicting moral controversies.

Subsequently, mannequin analysis and regulation are indispensable.

For instance, some areas have issued administration measures for generative AI companies, requiring security assessments and filings earlier than fashions log on; others are discussing strict testing and third-party opinions for high-risk AI techniques.

- Resolution: AI decision-making processes are sometimes black packing containers, missing interpretability, which can result in unfairness or security hazards (e.g., why did an autonomous automobile out of the blue brake? What’s the foundation for algorithmic layoffs?).

Selling necessities for determination interpretability and transparency is important.

Some regulatory proposals embrace high-risk decision-making AI underneath strict administration, requiring explanations and human supervision.

Academia can also be researching explainable AI applied sciences to permit fashions to clarify their reasoning in methods people can perceive.

- Embodiment: When robots enter actuality, the largest concern is bodily lack of management inflicting security accidents.

Whether or not it’s autonomous autos misjudging and hitting individuals or manufacturing facility robotic arms going uncontrolled and injuring individuals, the results could be extreme.

Subsequently, bodily safety and emergency mechanisms have to be strictly managed.

For instance, reserving a “crimson emergency cease button” for every autonomous robotic, establishing security requirements for robots leaving the manufacturing facility, and strengthening exterior monitoring of AI habits.

Some nations are additionally brewing moral tips for robots, discussing behavioral codes just like the “Three Legal guidelines,” to keep away from human-machine conflicts resulting in tragedies as a lot as doable.

From information to fashions, to choices, and eventually to bodily entities, AI governance requires a full-chain, multi-level framework.

International regulatory developments are additionally transferring on this course: some areas have proposed invoice drafts to handle AI in 4 classes primarily based on danger ranges, whereas others are making frequent strikes in information safety, algorithm submitting, and generative AI laws.

Sooner or later, we might even see extra refined hierarchical regulatory techniques that each defend innovation from pointless constraints and construct security valves for dangers at completely different ranges.

Standing at this cut-off date, we’ve witnessed synthetic intelligence climbing step-by-step up the 4 ranges of “seeing, pondering, calculating, transferring.”

Every stage’s leap was as soon as a troublesome downside that researchers labored on day and night time, however now it has built-in into each facet of our every day lives: telephones can acknowledge cats, audio system can chat, navigation can plan routes, and manufacturing facility robotic arms have began engaged on their very own.

The evolution of AI is sort of a fascinating sequence, and we’re a part of it.

So, what’s the subsequent stage?

When sooner or later, your robotic vacuum cleaner out of the blue appears up and questions gravity or discusses existential philosophy with you, that may be it climbing to the fifth stage on a brand new journey.

At the moment, synthetic intelligence will not be restricted to any certainly one of notion, cognition, decision-making, or motion however could exhibit some new “clever type” that we are able to hardly think about.

Pricey readers, I’ve a query for you: Amongst these 4 ranges, which one are you most wanting ahead to introducing new horizontal information to catalyze the following vertical leap?

In different phrases, if you happen to might add a catalyst to AI’s evolution, which stage would you select: notion, cognition, decision-making, or embodiment?

Be happy to make use of your creativeness and share your inventive concepts within the feedback.

Maybe the following viral innovation is hidden in your whimsical ideas.

Let’s wait and see, witnessing AI’s continued ascent on its magnificent journey!