thrilling to see the GenAI business starting to maneuver towards standardisation. We is likely to be witnessing one thing just like the early days of the web, when HTTP (HyperText Switch Protocol) first emerged. When Tim Berners-Lee developed HTTP in 1990, it offered a easy but extensible protocol that remodeled the web from a specialised analysis community into the globally accessible World Huge Internet. By 1993, internet browsers like Mosaic had made HTTP so well-liked that internet visitors shortly outpaced different methods.

One promising step on this path is MCP (Mannequin Context Protocol), developed by Anthropic. MCP is gaining recognition with its makes an attempt to standardise the interactions between LLMs and exterior instruments or information sources. Extra just lately (the first commit is dated April 2025), a brand new protocol referred to as ACP (Agent Communication Protocol) appeared. It enhances MCP by defining the methods wherein brokers can talk with one another.

On this article, I wish to talk about what ACP is, why it may be useful and the way it may be utilized in apply. We are going to construct a multi-agent AI system for interacting with information.

ACP overview

Earlier than leaping into apply, let’s take a second to know the idea behind ACP and the way it works underneath the hood.

ACP (Agent Communication Protocol) is an open protocol designed to deal with the rising problem of connecting AI brokers, functions, and people. The present GenAI business is sort of fragmented, with totally different groups constructing brokers in isolation utilizing varied, typically incompatible frameworks and applied sciences. This fragmentation slows down innovation and makes it troublesome for brokers to collaborate successfully.

To handle this problem, ACP goals to standardise communication between brokers by way of RESTful APIs. The protocol is framework- and technology-agnostic, that means it may be used with any agentic framework, resembling LangChain, CrewAI, smolagents, or others. This flexibility makes it simpler to construct interoperable methods the place brokers can seamlessly work collectively, no matter how they have been initially developed.

This protocol has been developed as an open commonplace underneath the Linux Basis, alongside BeeAI (its reference implementation). One of many key factors the crew emphasises is that ACP is overtly ruled and formed by the group, reasonably than a bunch of distributors.

What advantages can ACP carry?

- Simply replaceable brokers. With the present tempo of innovation within the GenAI house, new cutting-edge applied sciences are rising on a regular basis. ACP permits brokers to be swapped in manufacturing seamlessly, lowering upkeep prices and making it simpler to undertake probably the most superior instruments once they change into out there.

- Enabling collaboration between a number of brokers constructed on totally different frameworks. As we all know from individuals administration, specialisation typically results in higher outcomes. The identical applies to agentic methods. The group of brokers, every targeted on a particular job (like writing Python code or researching the online), can typically outperform a single agent making an attempt to do the whole lot. ACP makes it potential for such specialised brokers to speak and work collectively, even when they’re constructed utilizing totally different frameworks or applied sciences.

- New alternatives for partnerships. With a unified commonplace for brokers’ communication, it could be simpler for brokers to collaborate, sparking new partnerships between totally different groups throughout the firm and even totally different firms. Think about a world the place your good residence agent notices the temperature dropping unusually, determines that the heating system has failed, and checks along with your utility supplier’s agent to verify there are not any deliberate outages. Lastly, it books a technician coordinating the go to along with your Google Calendar agent to ensure you’re residence. It could sound futuristic, however with ACP, it may be fairly shut.

We’ve coated what ACP is and why it issues. The protocol seems to be fairly promising. So, let’s put it to the check and see the way it works in apply.

ACP in apply

Let’s attempt ACP in a basic “speak to information” use case. To make use of the advantage of ACP being framework agnostic, we are going to construct ACP brokers with totally different frameworks:

- SQL Agent with CrewAI to compose SQL queries

- DB Agent with HuggingFace smolagents to execute these queries.

I gained’t go into the main points of every framework right here, however when you’re curious, I’ve written in-depth articles on each of them:

– “Multi AI Agent Systems 101” about CrewAI,

– “Code Agents: The Future of Agentic AI” about smolagents.

Constructing DB Agent

Let’s begin with the DB agent. As I discussed earlier, ACP could possibly be complemented by MCP. So, I’ll use instruments from my analytics toolbox by the MCP server. Yow will discover the MCP server implementation on GitHub. For a deeper dive and step-by-step directions, verify my previous article, the place I coated MCP intimately.

The code itself is fairly easy: we initialise the ACP server and use the @server.agent() decorator to outline our agent operate. This operate expects an inventory of messages as enter and returns a generator.

from collections.abc import AsyncGenerator

from acp_sdk.fashions import Message, MessagePart

from acp_sdk.server import Context, RunYield, RunYieldResume, Server

from smolagents import LiteLLMModel,ToolCallingAgent, ToolCollection

import logging

from dotenv import load_dotenv

from mcp import StdioServerParameters

load_dotenv()

# initialise ACP server

server = Server()

# initialise LLM

mannequin = LiteLLMModel(

model_id="openai/gpt-4o-mini",

max_tokens=2048

)

# outline config for MCP server to attach

server_parameters = StdioServerParameters(

command="uv",

args=[

"--directory",

"/Users/marie/Documents/github/mcp-analyst-toolkit/src/mcp_server",

"run",

"server.py"

],

env=None

)

@server.agent()

async def db_agent(enter: record[Message], context: Context) -> AsyncGenerator[RunYield, RunYieldResume]:

"This can be a CodeAgent can execute SQL queries in opposition to ClickHouse database."

with ToolCollection.from_mcp(server_parameters, trust_remote_code=True) as tool_collection:

agent = ToolCallingAgent(instruments=[*tool_collection.tools], mannequin=mannequin)

query = enter[0].elements[0].content material

response = agent.run(query)

yield Message(elements=[MessagePart(content=str(response))])

if __name__ == "__main__":

server.run(port=8001)We will even have to arrange a Python setting. I will probably be utilizing uv package deal supervisor for this.

uv init --name acp-sql-agent

uv venv

supply .venv/bin/activate

uv add acp-sdk "smolagents[litellm]" python-dotenv mcp "smolagents[mcp]" ipykernelThen, we are able to run the agent utilizing the next command.

uv run db_agent.pyIf the whole lot is ready up accurately, you will note a server operating on port 8001. We are going to want an ACP shopper to confirm that it’s working as anticipated. Bear with me, we are going to check it shortly.

Constructing the SQL agent

Earlier than that, let’s construct a SQL agent that can compose queries. We are going to use the CrewAI framework for this. Our agent will reference the information base of questions and queries to generate solutions. So, we are going to equip it with a RAG (Retrieval Augmented Era) software.

First, we are going to initialise the RAG software and cargo the reference file clickhouse_queries.txt. Subsequent, we are going to create a CrewAI agent by specifying its function, purpose and backstory. Lastly, we’ll create a job and bundle the whole lot collectively right into a Crew object.

from crewai import Crew, Process, Agent, LLM

from crewai.instruments import BaseTool

from crewai_tools import RagTool

from collections.abc import AsyncGenerator

from acp_sdk.fashions import Message, MessagePart

from acp_sdk.server import RunYield, RunYieldResume, Server

import json

import os

from datetime import datetime

from typing import Kind

from pydantic import BaseModel, Subject

import nest_asyncio

nest_asyncio.apply()

# config for RAG software

config = {

"llm": {

"supplier": "openai",

"config": {

"mannequin": "gpt-4o-mini",

}

},

"embedding_model": {

"supplier": "openai",

"config": {

"mannequin": "text-embedding-ada-002"

}

}

}

# initialise software

rag_tool = RagTool(

config=config,

chunk_size=1200,

chunk_overlap=200)

rag_tool.add("clickhouse_queries.txt")

# initialise ACP server

server = Server()

# initialise LLM

llm = LLM(mannequin="openai/gpt-4o-mini", max_tokens=2048)

@server.agent()

async def sql_agent(enter: record[Message]) -> AsyncGenerator[RunYield, RunYieldResume]:

"This agent is aware of the database schema and might return SQL queries to reply questions concerning the information."

# create agent

sql_agent = Agent(

function="Senior SQL analyst",

purpose="Write SQL queries to reply questions concerning the e-commerce analytics database.",

backstory="""

You're an professional in ClickHouse SQL queries with over 10 years of expertise. You're aware of the e-commerce analytics database schema and might write optimized queries to extract insights.

## Database Schema

You're working with an e-commerce analytics database containing the next tables:

### Desk: ecommerce.customers

**Description:** Buyer data for the web store

**Main Key:** user_id

**Fields:**

- user_id (Int64) - Distinctive buyer identifier (e.g., 1000004, 3000004)

- nation (String) - Buyer's nation of residence (e.g., "Netherlands", "United Kingdom")

- is_active (Int8) - Buyer standing: 1 = lively, 0 = inactive

- age (Int32) - Buyer age in full years (e.g., 31, 72)

### Desk: ecommerce.periods

**Description:** Consumer session information and transaction data

**Main Key:** session_id

**International Key:** user_id (references ecommerce.customers.user_id)

**Fields:**

- user_id (Int64) - Buyer identifier linking to customers desk (e.g., 1000004, 3000004)

- session_id (Int64) - Distinctive session identifier (e.g., 106, 1023)

- action_date (Date) - Session begin date (e.g., "2021-01-03", "2024-12-02")

- session_duration (Int32) - Session period in seconds (e.g., 125, 49)

- os (String) - Working system used (e.g., "Home windows", "Android", "iOS", "MacOS")

- browser (String) - Browser used (e.g., "Chrome", "Safari", "Firefox", "Edge")

- is_fraud (Int8) - Fraud indicator: 1 = fraudulent session, 0 = professional

- income (Float64) - Buy quantity in USD (0.0 for non-purchase periods, >0 for purchases)

## ClickHouse-Particular Tips

1. **Use ClickHouse-optimized features:**

- uniqExact() for exact distinctive counts

- uniqExactIf() for conditional distinctive counts

- quantile() features for percentiles

- Date features: toStartOfMonth(), toStartOfYear(), in the present day()

2. **Question formatting necessities:**

- All the time finish queries with "format TabSeparatedWithNames"

- Use significant column aliases

- Use correct JOIN syntax when combining tables

- Wrap date literals in quotes (e.g., '2024-01-01')

3. **Efficiency concerns:**

- Use applicable WHERE clauses to filter information

- Think about using HAVING for post-aggregation filtering

- Use LIMIT when discovering prime/backside outcomes

4. **Knowledge interpretation:**

- income > 0 signifies a purchase order session

- income = 0 signifies a looking session with out buy

- is_fraud = 1 periods ought to sometimes be excluded from enterprise metrics except particularly analyzing fraud

## Response Format

Present solely the SQL question as your reply. Embrace transient reasoning in feedback if the question logic is advanced.

""",

verbose=True,

allow_delegation=False,

llm=llm,

instruments=[rag_tool],

max_retry_limit=5

)

# create job

task1 = Process(

description=enter[0].elements[0].content material,

expected_output = "Dependable SQL question that solutions the query primarily based on the e-commerce analytics database schema.",

agent=sql_agent

)

# create crew

crew = Crew(brokers=[sql_agent], duties=[task1], verbose=True)

# execute agent

task_output = await crew.kickoff_async()

yield Message(elements=[MessagePart(content=str(task_output))])

if __name__ == "__main__":

server.run(port=8002)We will even want so as to add any lacking packages to uv earlier than operating the server.

uv add crewai crewai_tools nest-asyncio

uv run sql_agent.pyNow, the second agent is operating on port 8002. With each servers up and operating, it’s time to verify whether or not they’re working correctly.

Calling an ACP agent with a shopper

Now that we’re prepared to check our brokers, we’ll use the ACP shopper to run them synchronously. For that, we have to initialise a Consumer with the server URL and use the run_sync operate specifying the agent’s identify and enter.

import os

import nest_asyncio

nest_asyncio.apply()

from acp_sdk.shopper import Consumer

import asyncio

# Set your OpenAI API key right here (or use setting variable)

# os.environ["OPENAI_API_KEY"] = "your-api-key-here"

async def instance() -> None:

async with Consumer(base_url="http://localhost:8001") as client1:

run1 = await client1.run_sync(

agent="db_agent", enter="choose 1 as check"

)

print(' DB agent response:')

print(run1.output[0].elements[0].content material)

async with Consumer(base_url="http://localhost:8002") as client2:

run2 = await client2.run_sync(

agent="sql_agent", enter="What number of clients did we have now in Could 2024?"

)

print(' SQL agent response:')

print(run2.output[0].elements[0].content material)

if __name__ == "__main__":

asyncio.run(instance())

# DB agent response:

# 1

# SQL agent response:

# ```

# SELECT COUNT(DISTINCT user_id) AS total_customers

# FROM ecommerce.customers

# WHERE is_active = 1

# AND user_id IN (

# SELECT DISTINCT user_id

# FROM ecommerce.periods

# WHERE action_date >= '2024-05-01' AND action_date < '2024-06-01'

# )

# format TabSeparatedWithNames We acquired anticipated outcomes from each servers, so it seems to be like the whole lot is working as supposed.

💡Tip: You possibly can verify the total execution logs within the terminal the place every server is operating.

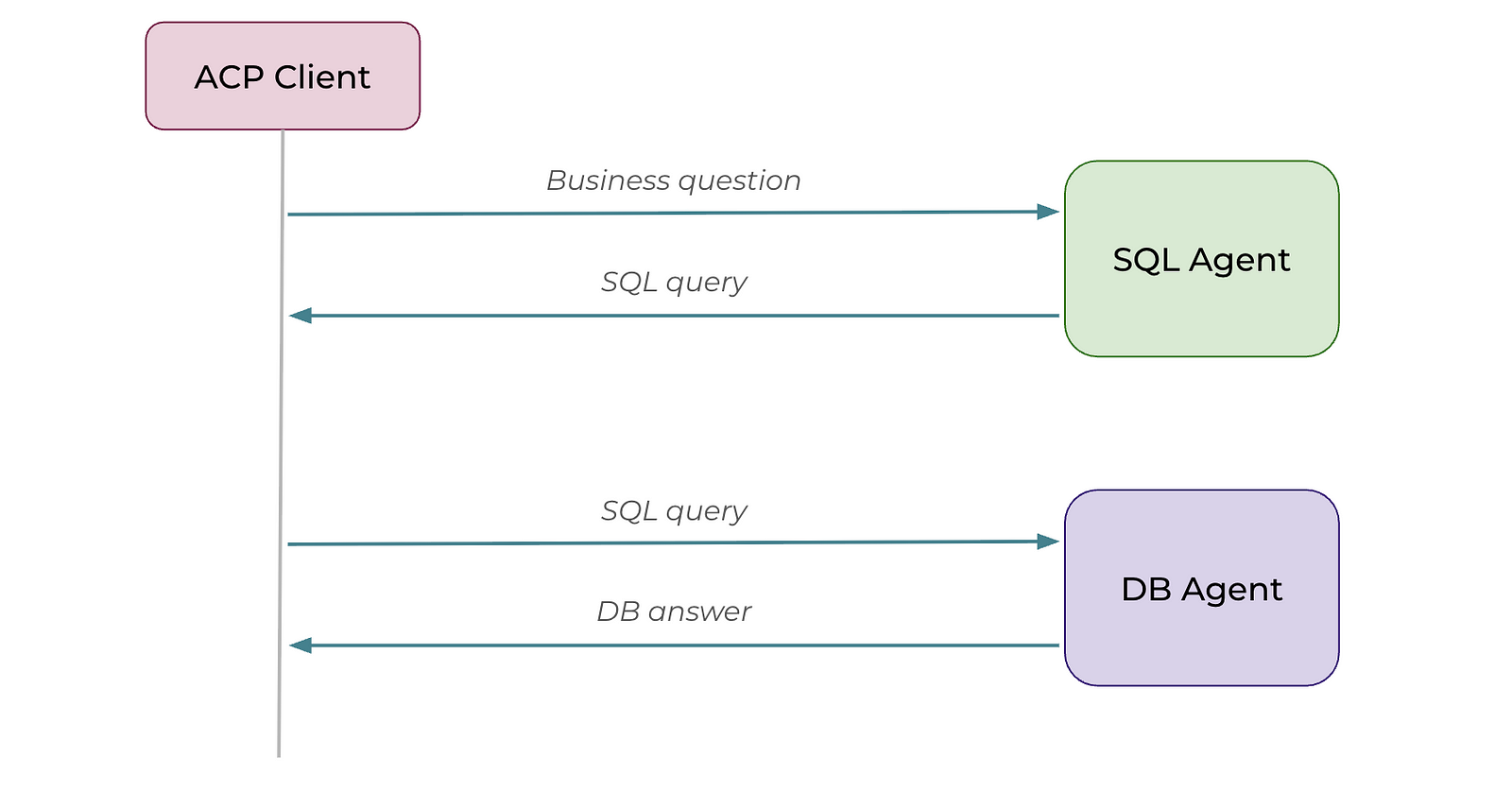

Chaining brokers sequentially

To reply precise questions from clients, we want each brokers to work collectively. Let’s chain them one after the opposite. So, we are going to first name the SQL agent after which cross the generated SQL question to the DB agent for execution.

Right here’s the code to chain the brokers. It’s fairly just like what we used earlier to check every server individually. The principle distinction is that we now cross the output from the SQL agent instantly into the DB agent.

async def instance() -> None:

async with Consumer(base_url="http://localhost:8001") as db_agent, Consumer(base_url="http://localhost:8002") as sql_agent:

query = 'What number of clients did we have now in Could 2024?'

sql_query = await sql_agent.run_sync(

agent="sql_agent", enter=query

)

print('SQL question generated by SQL agent:')

print(sql_query.output[0].elements[0].content material)

reply = await db_agent.run_sync(

agent="db_agent", enter=sql_query.output[0].elements[0].content material

)

print('Reply from DB agent:')

print(reply.output[0].elements[0].content material)

asyncio.run(instance())All the things labored easily, and we acquired the anticipated output.

SQL question generated by SQL agent:

Thought: I have to craft a SQL question to rely the variety of distinctive clients

who have been lively in Could 2024 primarily based on their periods.

```sql

SELECT COUNT(DISTINCT u.user_id) AS active_customers

FROM ecommerce.customers AS u

JOIN ecommerce.periods AS s ON u.user_id = s.user_id

WHERE u.is_active = 1

AND s.action_date >= '2024-05-01'

AND s.action_date < '2024-06-01'

FORMAT TabSeparatedWithNames

```

Reply from DB agent:

234544Router sample

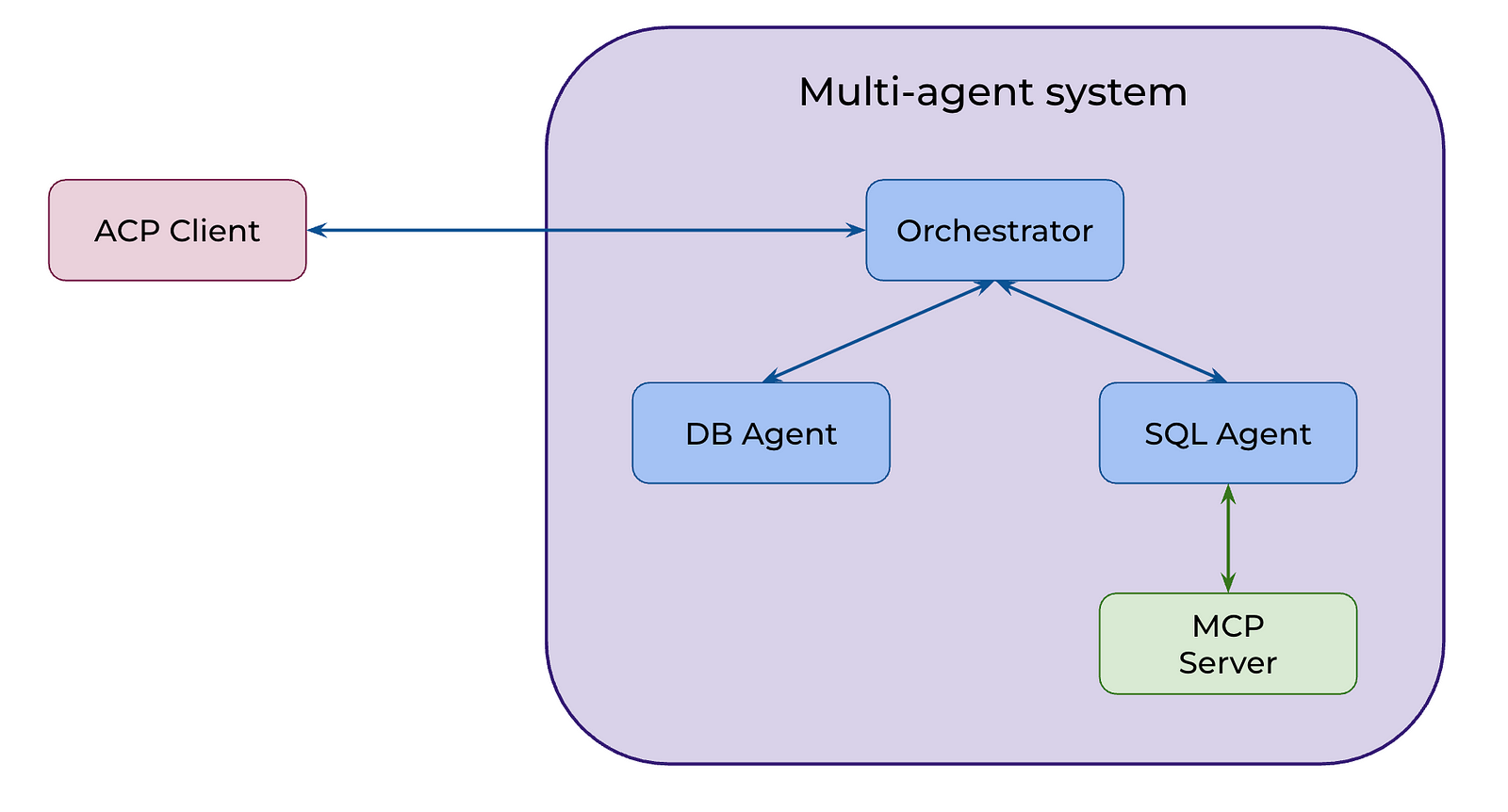

In some use circumstances, the trail is static and well-defined, and we are able to chain brokers instantly as we did earlier. Nevertheless, extra typically we anticipate LLM brokers to purpose independently and resolve which instruments or brokers to make use of to realize a purpose. To unravel for such circumstances, we are going to implement a router sample utilizing ACP. We are going to create a brand new agent (the orchestrator ) that may delegate duties to DB and SQL brokers.

We are going to begin by including a reference implementation beeai_framework to the package deal supervisor.

uv add beeai_frameworkTo allow our orchestrator to name the SQL and DB brokers, we are going to wrap them as instruments. This fashion, the orchestrator can deal with them like another software and invoke them when wanted.

Let’s begin with the SQL agent. It’s primarily boilerplate code: we outline the enter and output fields utilizing Pydantic after which name the agent within the _run operate.

from pydantic import BaseModel, Subject

from acp_sdk import Message

from acp_sdk.shopper import Consumer

from acp_sdk.fashions import MessagePart

from beeai_framework.instruments.software import Device

from beeai_framework.instruments.varieties import ToolRunOptions

from beeai_framework.context import RunContext

from beeai_framework.emitter import Emitter

from beeai_framework.instruments import ToolOutput

from beeai_framework.utils.strings import to_json

# helper operate

async def run_agent(agent: str, enter: str) -> record[Message]:

async with Consumer(base_url="http://localhost:8002") as shopper:

run = await shopper.run_sync(

agent=agent, enter=[Message(parts=[MessagePart(content=input, content_type="text/plain")])]

)

return run.output

class SqlQueryToolInput(BaseModel):

query: str = Subject(description="The query to reply utilizing SQL queries in opposition to the e-commerce analytics database")

class SqlQueryToolResult(BaseModel):

sql_query: str = Subject(description="The SQL question that solutions the query")

class SqlQueryToolOutput(ToolOutput):

outcome: SqlQueryToolResult = Subject(description="SQL question outcome")

def get_text_content(self) -> str:

return to_json(self.outcome)

def is_empty(self) -> bool:

return self.outcome.sql_query.strip() == ""

def __init__(self, outcome: SqlQueryToolResult) -> None:

tremendous().__init__()

self.outcome = outcome

class SqlQueryTool(Device[SqlQueryToolInput, ToolRunOptions, SqlQueryToolOutput]):

identify = "SQL Question Generator"

description = "Generate SQL queries to reply questions concerning the e-commerce analytics database"

input_schema = SqlQueryToolInput

def _create_emitter(self) -> Emitter:

return Emitter.root().baby(

namespace=["tool", "sql_query"],

creator=self,

)

async def _run(self, enter: SqlQueryToolInput, choices: ToolRunOptions | None, context: RunContext) -> SqlQueryToolOutput:

outcome = await run_agent("sql_agent", enter.query)

return SqlQueryToolOutput(outcome=SqlQueryToolResult(sql_query=str(outcome[0])))Let’s observe the identical method with the DB agent.

from pydantic import BaseModel, Subject

from acp_sdk import Message

from acp_sdk.shopper import Consumer

from acp_sdk.fashions import MessagePart

from beeai_framework.instruments.software import Device

from beeai_framework.instruments.varieties import ToolRunOptions

from beeai_framework.context import RunContext

from beeai_framework.emitter import Emitter

from beeai_framework.instruments import ToolOutput

from beeai_framework.utils.strings import to_json

async def run_agent(agent: str, enter: str) -> record[Message]:

async with Consumer(base_url="http://localhost:8001") as shopper:

run = await shopper.run_sync(

agent=agent, enter=[Message(parts=[MessagePart(content=input, content_type="text/plain")])]

)

return run.output

class DatabaseQueryToolInput(BaseModel):

question: str = Subject(description="The SQL question or query to execute in opposition to the ClickHouse database")

class DatabaseQueryToolResult(BaseModel):

outcome: str = Subject(description="The results of the database question execution")

class DatabaseQueryToolOutput(ToolOutput):

outcome: DatabaseQueryToolResult = Subject(description="Database question execution outcome")

def get_text_content(self) -> str:

return to_json(self.outcome)

def is_empty(self) -> bool:

return self.outcome.outcome.strip() == ""

def __init__(self, outcome: DatabaseQueryToolResult) -> None:

tremendous().__init__()

self.outcome = outcome

class DatabaseQueryTool(Device[DatabaseQueryToolInput, ToolRunOptions, DatabaseQueryToolOutput]):

identify = "Database Question Executor"

description = "Execute SQL queries and questions in opposition to the ClickHouse database"

input_schema = DatabaseQueryToolInput

def _create_emitter(self) -> Emitter:

return Emitter.root().baby(

namespace=["tool", "database_query"],

creator=self,

)

async def _run(self, enter: DatabaseQueryToolInput, choices: ToolRunOptions | None, context: RunContext) -> DatabaseQueryToolOutput:

outcome = await run_agent("db_agent", enter.question)

return DatabaseQueryToolOutput(outcome=DatabaseQueryToolResult(outcome=str(outcome[0])))Now let’s put collectively the primary agent that will probably be orchestrating the others as instruments. We are going to use the ReAct agent implementation from the BeeAI framework for the orchestrator. I’ve additionally added some further logging to the software wrappers round our DB and SQL brokers, in order that we are able to see all of the details about the calls.

from collections.abc import AsyncGenerator

from acp_sdk import Message

from acp_sdk.fashions import MessagePart

from acp_sdk.server import Context, Server

from beeai_framework.backend.chat import ChatModel

from beeai_framework.brokers.react import ReActAgent

from beeai_framework.reminiscence import TokenMemory

from beeai_framework.utils.dicts import exclude_none

from sql_tool import SqlQueryTool

from db_tool import DatabaseQueryTool

import os

import logging

# Configure logging

logger = logging.getLogger(__name__)

logger.setLevel(logging.INFO)

# Solely add handler if it does not exist already

if not logger.handlers:

handler = logging.StreamHandler()

handler.setLevel(logging.INFO)

formatter = logging.Formatter('ORCHESTRATOR - %(levelname)s - %(message)s')

handler.setFormatter(formatter)

logger.addHandler(handler)

# Stop propagation to keep away from duplicate messages

logger.propagate = False

# Wrapped our instruments with further logging for tracebility

class LoggingSqlQueryTool(SqlQueryTool):

async def _run(self, enter, choices, context):

logger.data(f"🔍 SQL Device Request: {enter.query}")

outcome = await tremendous()._run(enter, choices, context)

logger.data(f"📝 SQL Device Response: {outcome.outcome.sql_query}")

return outcome

class LoggingDatabaseQueryTool(DatabaseQueryTool):

async def _run(self, enter, choices, context):

logger.data(f"🗄️ Database Device Request: {enter.question}")

outcome = await tremendous()._run(enter, choices, context)

logger.data(f"📊 Database Device Response: {outcome.outcome.outcome}...")

return outcome

server = Server()

@server.agent(identify="orchestrator")

async def orchestrator(enter: record[Message], context: Context) -> AsyncGenerator:

logger.data(f"🚀 Orchestrator began with enter: {enter[0].elements[0].content material}")

llm = ChatModel.from_name("openai:gpt-4o-mini")

agent = ReActAgent(

llm=llm,

instruments=[LoggingSqlQueryTool(), LoggingDatabaseQueryTool()],

templates={

"system": lambda template: template.replace(

defaults=exclude_none({

"directions": """

You're an professional information analyst assistant that helps customers analyze e-commerce information.

You will have entry to 2 instruments:

1. SqlQueryTool - Use this to generate SQL queries from pure language questions concerning the e-commerce database

2. DatabaseQueryTool - Use this to execute SQL queries instantly in opposition to the ClickHouse database

The database comprises two fundamental tables:

- ecommerce.customers (buyer data)

- ecommerce.periods (consumer periods and transactions)

When a consumer asks a query:

1. First, use SqlQueryTool to generate the suitable SQL question

2. Then, use DatabaseQueryTool to execute that question and get the outcomes

3. Current the ends in a transparent, comprehensible format

All the time present context about what the info reveals and any insights you'll be able to derive.

""",

"function": "system"

})

)

}, reminiscence=TokenMemory(llm))

immediate = (str(enter[0]))

logger.data(f"🤖 Operating ReAct agent with immediate: {immediate}")

response = await agent.run(immediate)

logger.data(f"✅ Orchestrator accomplished. Response size: {len(response.outcome.textual content)} characters")

logger.data(f"📤 Last response: {response.outcome.textual content}...")

yield Message(elements=[MessagePart(content=response.result.text)])

if __name__ == "__main__":

server.run(port=8003)Now, identical to earlier than, we are able to run the orchestrator agent utilizing the ACP shopper to see the outcome.

async def router_example() -> None:

async with Consumer(base_url="http://localhost:8003") as orchestrator_client:

query = 'What number of clients did we have now in Could 2024?'

response = await orchestrator_client.run_sync(

agent="orchestrator", enter=query

)

print('Orchestrator response:')

# Debug: Print the response construction

print(f"Response sort: {sort(response)}")

print(f"Response output size: {len(response.output) if hasattr(response, 'output') else 'No output attribute'}")

if response.output and len(response.output) > 0:

print(response.output[0].elements[0].content material)

else:

print("No response acquired from orchestrator")

print(f"Full response: {response}")

asyncio.run(router_example())

# In Could 2024, we had 234,544 distinctive lively clients.Our system labored properly, and we received the anticipated outcome. Good job!

Let’s see the way it labored underneath the hood by checking the logs from the orchestrator server. The router first invoked the SQL agent as a SQL software. Then, it used the returned question to name the DB agent. Lastly, it produced the ultimate reply.

ORCHESTRATOR - INFO - 🚀 Orchestrator began with enter: What number of clients did we have now in Could 2024?

ORCHESTRATOR - INFO - 🤖 Operating ReAct agent with immediate: What number of clients did we have now in Could 2024?

ORCHESTRATOR - INFO - 🔍 SQL Device Request: What number of clients did we have now in Could 2024?

ORCHESTRATOR - INFO - 📝 SQL Device Response:

SELECT COUNT(uniqExact(u.user_id)) AS active_customers

FROM ecommerce.customers AS u

JOIN ecommerce.periods AS s ON u.user_id = s.user_id

WHERE u.is_active = 1

AND s.action_date >= '2024-05-01'

AND s.action_date < '2024-06-01'

FORMAT TabSeparatedWithNames

ORCHESTRATOR - INFO - 🗄️ Database Device Request:

SELECT COUNT(uniqExact(u.user_id)) AS active_customers

FROM ecommerce.customers AS u

JOIN ecommerce.periods AS s ON u.user_id = s.user_id

WHERE u.is_active = 1

AND s.action_date >= '2024-05-01'

AND s.action_date < '2024-06-01'

FORMAT TabSeparatedWithNames

ORCHESTRATOR - INFO - 📊 Database Device Response: 234544...

ORCHESTRATOR - INFO - ✅ Orchestrator accomplished. Response size: 52 characters

ORCHESTRATOR - INFO - 📤 Last response: In Could 2024, we had 234,544 distinctive lively clients....Due to the additional logging we added, we are able to now hint all of the calls made by the orchestrator.

Yow will discover the total code on GitHub.

Abstract

On this article, we’ve explored the ACP protocol and its capabilities. Right here’s the fast recap of the important thing factors:

- ACP (Agent Communication Protocol) is an open protocol that goals to standardise communication between brokers. It enhances MCP, which handles interactions between brokers and exterior instruments and information sources.

- ACP follows a client-server structure and makes use of RESTful APIs.

- The protocol is technology- and framework-agnostic, permitting you to construct interoperable methods and create new collaborations between brokers seamlessly.

- With ACP, you’ll be able to implement a variety of agent interactions, from easy chaining in well-defined workflows to the router sample, the place an orchestrator can delegate duties dynamically to different brokers.

Thanks for studying. I hope this text was insightful. Keep in mind Einstein’s recommendation: “The necessary factor is to not cease questioning. Curiosity has its personal purpose for current.” Could your curiosity lead you to your subsequent nice perception.

Reference

This text is impressed by the “ACP: Agent Communication Protocol“ brief course from DeepLearning.AI.