In 2022, Anthropic launched a paper the place they confirmed proof that induction head might constitute the mechanism for ICL. What are induction heads? As acknowledged by Anthropic — “Induction heads are carried out by a circuit consisting of a pair of consideration heads in numerous layers that work collectively to repeat or full patterns.”, merely put what the induction head does is given a sequence like — […, A, B,…, A] it would full it with B with the reasoning that if A is adopted by B earlier within the context, it’s probably that A is adopted by B once more. When you’ve a sequence like “…A, B…A”, the primary consideration head copies earlier token information into every place, and the second consideration head makes use of this information to search out the place A appeared earlier than and predict what got here after it (B).

Just lately a number of analysis has proven that transformers may very well be doing ICL via gradient descent (Garg et al. 2022, Oswald et al. 2023, and so forth) by exhibiting the relation between linear consideration and gradient descent. Let’s revisit least squares and gradient descent,

Now let’s see how this hyperlinks with linear consideration

Right here we deal with linear consideration as identical as softmax consideration minus the softmax operation. The essential linear consideration components,

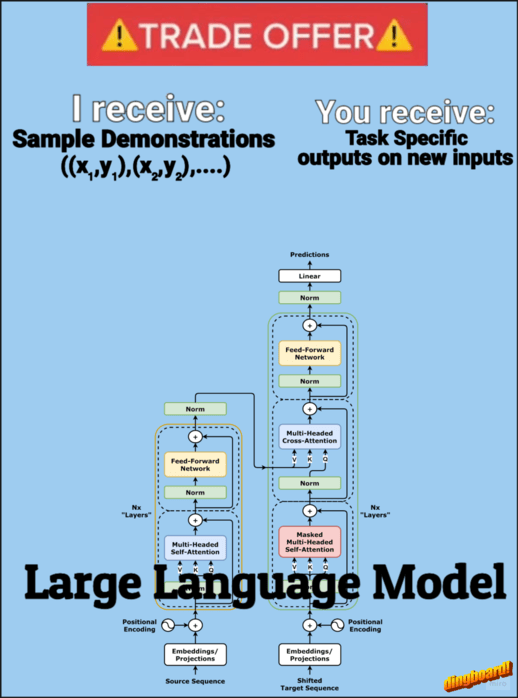

Let’s begin with a single-layer building that captures the essence of in-context studying. Think about we’ve got n coaching examples (x₁,y₁)…(xₙ,yₙ), and we wish to predict y_{n+1} for a brand new enter x_{n+1}.

This appears to be like similar to what we acquired with gradient descent, besides in linear consideration we’ve got an additional time period ‘W’. What linear consideration is implementing is one thing generally known as preconditioned gradient descent (PGD), the place as an alternative of the usual gradient step, we modify the gradient with a preconditioning matrix W,

What we’ve got proven right here is that we will assemble a weight matrix such that one layer of linear consideration will do one step of PGD.

We noticed how consideration can implement “studying algorithms”, these are algorithms the place principally if we offer a lot of demonstrations (x,y) then the mannequin learns from these demonstrations to foretell the output of any new question. Whereas the precise mechanisms involving a number of consideration layers and MLPs are advanced, researchers have made progress in understanding how in-context studying works mechanistically. This text offers an intuitive, high-level introduction to assist readers perceive the internal workings of this emergent skill of transformers.

To learn extra on this matter, I’d recommend the next papers:

In-context Learning and Induction Heads

What Can Transformers Learn In-Context? A Case Study of Simple Function Classes

Transformers Learn In-Context by Gradient Descent

Transformers learn to implement preconditioned gradient descent for in-context learning

This weblog put up was impressed by coursework from my graduate research throughout Fall 2024 at College of Michigan. Whereas the programs supplied the foundational information and motivation to discover these matters, any errors or misinterpretations on this article are totally my very own. This represents my private understanding and exploration of the fabric.