Allow us to start with a confession: each time we construct a machine to assume like us, we by chance reveal how little we perceive about pondering. The most recent providing from Convergence Labs-LM2, a “massive reminiscence mannequin” that supposedly solves the Transformer structure’s continual amnesia-is no exception. Of their whitepaper, engineers describe an elaborate system of reminiscence banks and gating mechanisms, a Rube Goldberg contraption of cross-attention layers and sigmoid-activated overlook gates. They communicate of 80.4% enhancements over earlier fashions in “multi-hop inference,” as if reasoning had been a collection of parkour maneuvers throughout knowledge factors relatively than the gradual accumulation of lived expertise. One nearly admires the audacity: in an age the place human consideration spans measured in TikTok movies, we’ve constructed machines that may parse 128,000 tokens of context whereas retaining the emotional depth of a spreadsheet.

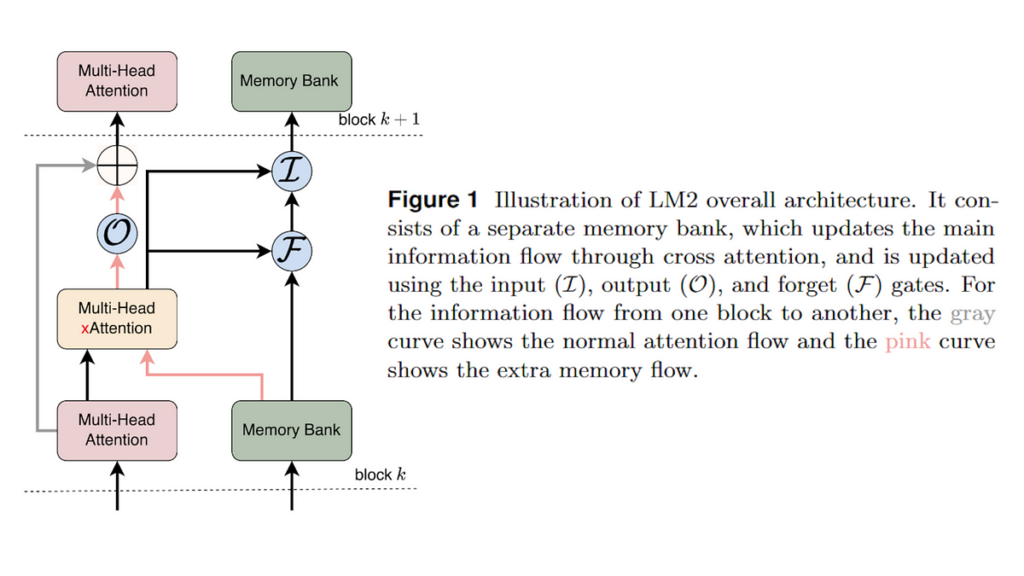

The LM2 paper reads like a love letter to the very idea of memory-if love letters had been written by actuaries. Its central innovation is a “reminiscence financial institution” that operates parallel to the usual…