In 1977, Andrew Barto, as a researcher on the College of Massachusetts, Amherst, started exploring a brand new idea that neurons behaved like hedonists. The fundamental concept was that the human mind was pushed by billions of nerve cells that had been every making an attempt to maximise pleasure and reduce ache.

A 12 months later, he was joined by one other younger researcher, Richard Sutton. Collectively, they labored to elucidate human intelligence utilizing this straightforward idea and utilized it to synthetic intelligence. The consequence was “reinforcement studying,” a manner for A.I. methods to be taught from the digital equal of enjoyment and ache.

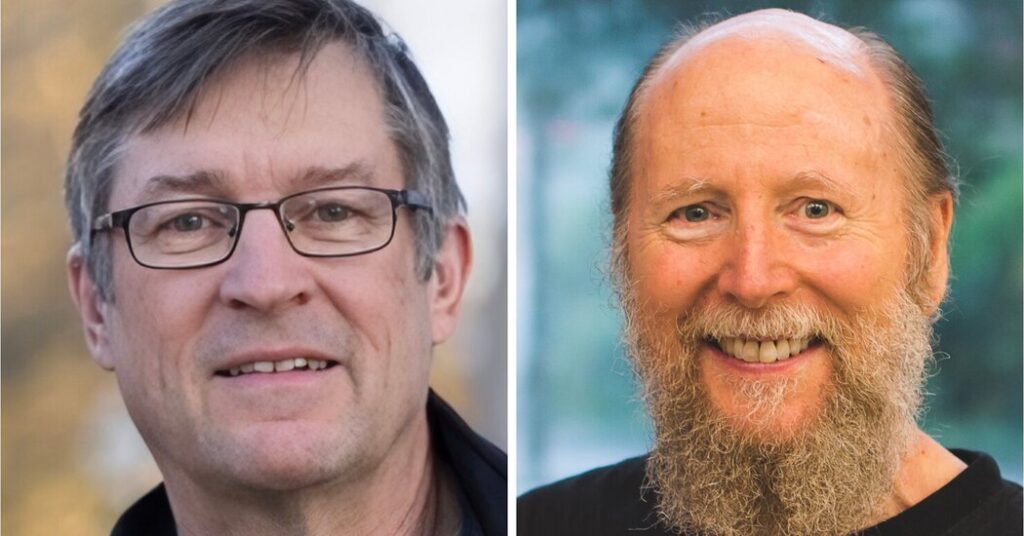

On Wednesday, the Affiliation for Computing Equipment, the world’s largest society of computing professionals, introduced that Dr. Barto and Dr. Sutton had received this 12 months’s Turing Award for his or her work on reinforcement studying. The Turing Award, which was launched in 1966, is commonly known as the Nobel Prize of computing. The 2 scientists will share the $1 million prize that comes with the award.

Over the previous decade, reinforcement studying has performed a significant position within the rise of synthetic intelligence, together with breakthrough applied sciences comparable to Google’s AlphaGo and OpenAI’s ChatGPT. The strategies that powered these methods had been rooted within the work of Dr. Barto and Dr. Sutton.

“They’re the undisputed pioneers of reinforcement studying,” stated Oren Etzioni, a professor emeritus of laptop science on the College of Washington and founding chief government of the Allen Institute for Synthetic Intelligence. “They generated the important thing concepts — they usually wrote the guide on the topic.”

Their guide, “Reinforcement Studying: An Introduction,” which was printed in 1998, stays the definitive exploration of an concept that many consultants say is just starting to appreciate its potential.

Psychologists have lengthy studied the ways in which people and animals be taught from their experiences. Within the Forties, the pioneering British laptop scientist Alan Turing prompt that machines might be taught in a lot the identical manner.

But it surely was Dr. Barto and Dr. Sutton who started exploring the arithmetic of how this would possibly work, constructing on a idea that A. Harry Klopf, a pc scientist working for the federal government, had proposed. Dr. Barto went on to construct a lab at UMass Amherst devoted to the thought, whereas Dr. Sutton based the same sort of lab on the College of Alberta in Canada.

“It’s sort of an apparent concept whenever you’re speaking about people and animals,” stated Dr. Sutton, who can also be a analysis scientist at Eager Applied sciences, an A.I. start-up, and a fellow on the Alberta Machine Intelligence Institute, certainly one of Canada’s three nationwide A.I. labs. “As we revived it, it was about machines.”

This remained an educational pursuit till the arrival of AlphaGo in 2016. Most consultants believed that one other 10 years would go earlier than anybody constructed an A.I. system that might beat the world’s finest gamers on the sport of Go.

However throughout a match in Seoul, South Korea, AlphaGo beat Lee Sedol, the most effective Go participant of the previous decade. The trick was that the system had performed tens of millions of video games in opposition to itself, studying by trial and error. It discovered which strikes introduced success (pleasure) and which introduced failure (ache).

The Google workforce that constructed the system was led by David Silver, a researcher who had studied reinforcement studying below Dr. Sutton on the College of Alberta.

Many consultants nonetheless query whether or not reinforcement studying might work exterior of video games. Recreation winnings are decided by factors, which makes it simple for machines to tell apart between success and failure.

However reinforcement studying has additionally performed a necessary position in on-line chatbots.

Main as much as the discharge of ChatGPT within the fall of 2022, OpenAI employed lots of of individuals to make use of an early model and supply exact options that might hone its expertise. They confirmed the chatbot how to reply to specific questions, rated its responses and corrected its errors. By analyzing these options, ChatGPT discovered to be a greater chatbot.

Researchers name this “reinforcement studying from human suggestions,” or R.L.H.F. And it’s one of the key reasons that in the present day’s chatbots reply in surprisingly lifelike methods.

(The New York Occasions has sued OpenAI and its associate, Microsoft, for copyright infringement of stories content material associated to A.I. methods. OpenAI and Microsoft have denied these claims.)

Extra lately, corporations like OpenAI and the Chinese start-up DeepSeek have developed a type of reinforcement studying that enables chatbots to be taught from themselves — a lot as AlphaGo did. By working via numerous math issues, for example, a chatbot can be taught which strategies result in the suitable reply and which don’t.

If it repeats this course of with an enormously giant set of issues, the bot can be taught to mimic the way humans reason — not less than in some methods. The result’s so-called reasoning methods like OpenAI’s o1 or DeepSeek’s R1.

Dr. Barto and Dr. Sutton say these methods trace on the methods machines will be taught sooner or later. Ultimately, they are saying, robots imbued with A.I. will be taught from trial and error in the actual world, as people and animals do.

“Studying to manage a physique via reinforcement studying — that may be a very pure factor,” Dr. Barto stated.