For this text, I’m focusing at the moment’s entry on foundational data that always seems early in technical discussions: the kinds of studying paradigms, the core algorithms, and the mathematical rules that help them. This text is meant as a transparent and easy breakdown for anybody reviewing or attempting to shortly and successfully clarify ML ideas.

Every machine studying technique falls into completely different studying paradigms. Understanding these classes not solely helps form your instinct but additionally guides your solutions when interviewers inquire about real-world purposes.

Supervised Studying

- Definition: Be taught a mapping from enter options X to an output label Y utilizing labeled examples.

- Purpose: Reduce the discrepancy between predicted and precise labels.

- Examples: Predicting home costs from historic gross sales knowledge and classifying emails as spam or not spam.

- Instinct: Simply as a instructor grades your work, the mannequin is educated with right solutions.

Unsupervised Studying

- Definition: Uncover hidden patterns or constructions in knowledge with out labels.

- Purpose: Be taught helpful representations or groupings of the enter house.

- Examples: Clustering clients by conduct for advertising and marketing segmentation and dimensionality discount for visualization (e.g., Principal Part Evaluation, PCA).

- Instinct: The algorithm is tasked with discovering the underlying construction within the knowledge primarily based on standards akin to similarity (e.g., Euclidean or cosine distance), density (e.g., DBSCAN), or statistical properties (e.g., principal elements in PCA).”

Semi-Supervised Studying

What it’s: Combines a small quantity of labeled knowledge with a big pool of unlabeled knowledge.

Why it issues: Labeling is dear; this strategy leverages the unlabeled majority to spice up efficiency.

The way it works:

- Prepare a mannequin on labeled knowledge.

- Use it to generate pseudo-labels for unlabeled knowledge.

- Retrain on the total dataset, refining predictions iteratively.

Instance:

- Speech Recognition:

Suppose you have got 1,000 hours of recorded speech however solely 10 hours of transcriptions. You practice a mannequin on the labeled 10 hours, then use it to foretell transcripts for the remaining 990 hours. The mannequin is retrained on this augmented dataset to enhance accuracy. This strategy was key in scaling programs like Google Voice or Whisper. - Medical Imaging:

A radiologist may label 500 chest X-rays as wholesome or diseased, however you could have 10,000 unlabeled scans. A semi-supervised mannequin can be taught basic patterns from all 10,500 pictures whereas utilizing the labeled ones to information prognosis. - Internet Content material Classification:

In content material moderation, solely a fraction of posts could also be labeled as spam or not. Semi-supervised strategies can propagate labels to related unlabeled posts utilizing graph-based or self-training approaches.

Self-Supervised Studying

What it’s: Builds labels from the information itself by creating pretext duties.

Why it issues: Allows studying from giant, unlabeled datasets with out human supervision.

The way it works:

- Assemble artificial duties (e.g., predicting lacking phrases or picture patches).

- Be taught general-purpose options, which switch nicely to downstream duties.

Instance: BERT learns by predicting masked phrases in a sentence; SimCLR learns by contrasting augmented views of the identical picture.

BERT (Bidirectional Encoder Representations from Transformers) learns by predicting masked phrases inside a sentence — a job often called Masked Language Modeling (MLM). Throughout coaching, a proportion of enter tokens are randomly changed with a particular

[MASK]token, and the mannequin is requested to deduce the unique phrases utilizing context from each the left and proper sides. This self-supervised setup allows BERT to be taught deep contextual representations with out requiring manually labeled knowledge, making it extremely efficient when fine-tuned for downstream duties akin to query answering or sentiment classification.

Reinforcement Studying (RL)

What it’s: Trains an agent to work together with an atmosphere by maximizing cumulative reward.

Why it issues: Helpful for dynamic decision-making the place suggestions comes as delayed rewards quite than direct labels.

The way it works:

- The agent takes motion, observes outcomes, and adjusts its technique (coverage) primarily based on obtained rewards.

Instance: AlphaGo learns to win by taking part in video games towards itself and enhancing over time.

AlphaGo learns via reinforcement studying, the place it will get higher by taking part in tens of millions of video games towards itself. It’s initially educated on video games performed by human consultants, then makes use of self-play to discover new methods, incomes rewards for successful and adjusting its strategy to maximise long-term success. Over time, AlphaGo improves its decision-making by combining deep neural networks (to guage positions and counsel strikes) with Monte Carlo Tree Search (to simulate attainable future strikes), permitting it to beat human champions by discovering methods past human instinct.

Energetic Studying

What it’s: Actively selects probably the most informative samples to be labeled by a human annotator.

Why it issues: Reduces labeling price by focusing solely on unsure or numerous examples.

The way it works:

- Prepare a mannequin on restricted knowledge.

- Determine knowledge factors with excessive uncertainty or disagreement.

- Question the brand new labels and retrain.

Instance: In medical imaging, solely probably the most ambiguous scans are despatched to radiologists for annotation.

Suppose you might be coaching a mannequin to detect pneumonia from chest X-rays. You begin with 500 labeled scans and 10,000 unlabeled ones.

To establish ambiguous scans:

You compute the mannequin’s prediction chance for every scan. If the mannequin predicts pneumonia with ~50% confidence (e.g.,

P(pneumonia) ≈ 0.5), it’s extremely unsure—a great candidate for professional labeling.Alternatively, you should utilize entropy of the output chances to rank unsure predictions.

For multi-class issues, margin sampling (distinction between high two predicted lessons) can be utilized to detect ambiguity.

One other strategy: Use a committee of fashions (e.g., an ensemble or dropout-based Monte Carlo predictions) and measure their disagreement on every scan. Scans with excessive variance throughout mannequin predictions are thought of informative.

Consequence: As an alternative of asking radiologists to label all 10,000 scans, you ask them to label solely the 200 most ambiguous ones, considerably saving time whereas enhancing mannequin efficiency.

For instance, do you perceive how the algorithm learns? What assumptions does it make? And may you justify when it ought to (or shouldn’t) be utilized in an actual state of affairs?

Let’s stroll via a couple of foundational algorithms with this lens.

Linear Regression

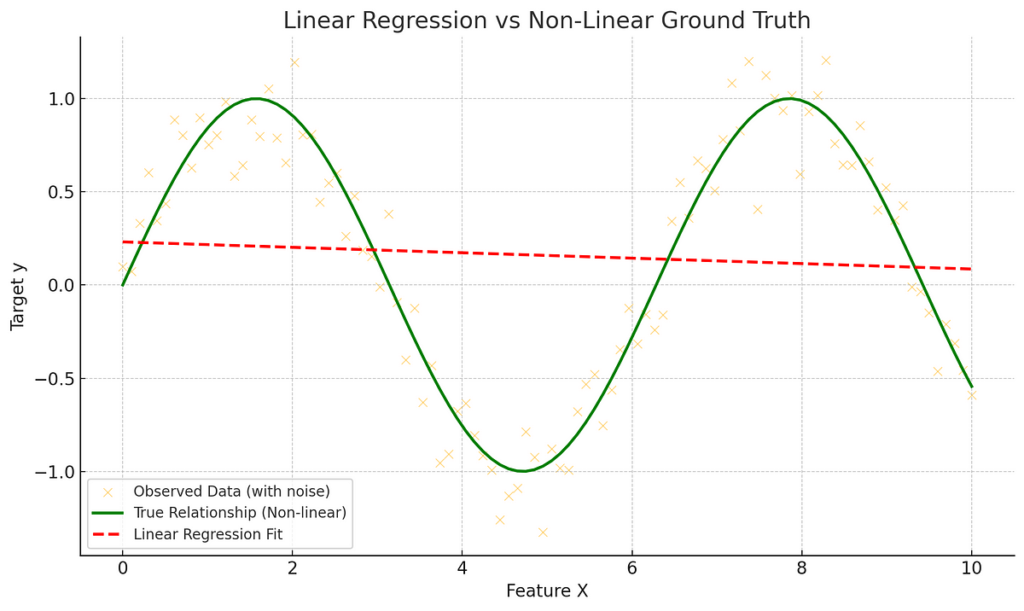

Linear regression suits a straight line (or hyperplane) to foretell steady outcomes by minimizing squared error. It assumes a linear relationship between the options and the goal, fixed variance of errors (homoscedasticity), and no multicollinearity among the many predictors. It’s usually used as a baseline for structured knowledge and is valued for its velocity and interpretability; nonetheless, it fails when the connection is very nonlinear or the interactions are complicated.

Interviewers might ask you why these assumptions matter or the way to detect after they’re violated. Every assumption underpins the validity of the linear regression mannequin:

- Linearity: This assumes a straight-line relationship between the impartial variables and the goal. If that is violated (as within the instance plot the place the precise relationship is sinusoidal), the mannequin will systematically mispredict, even with excellent estimation. You’ll be able to detect nonlinearity by plotting residuals: if residuals present a sample or curvature quite than randomness, the belief is probably going violated.

- Homoscedasticity: This implies the variance of the residuals must be fixed throughout all ranges of the enter. If this assumption is violated (i.e., errors fan out or compress), predictions turn out to be unreliable in sure areas. You’ll be able to diagnose this by plotting residuals towards predicted values; a non-constant unfold suggests heteroscedasticity.

- No multicollinearity: When predictors are extremely correlated with each other, it turns into troublesome to isolate their particular person results on the goal. This inflates coefficient variances and reduces mannequin interpretability. Interviewers might anticipate you to reference instruments just like the Variance Inflation Issue (VIF) or correlation matrices to establish multicollinearity.

Logistic Regression

Logistic regression predicts the chance of binary outcomes utilizing a sigmoid (logistic) operate. It’s a classification mannequin in observe, not a regression mannequin, and performs nicely when the choice boundary is roughly linear in characteristic house. You is likely to be requested why we use the logit operate. In interviews, you might be requested why the sigmoid operate is used. The reply is not only about output squashing but additionally about optimization. When mixed with the log-likelihood loss (cross-entropy), the ensuing loss operate is convex, making certain a single international minimal for linearly separable knowledge. This makes the optimization course of steady and environment friendly with gradient-based strategies like stochastic gradient descent.

It performs nicely on high-dimensional, sparse knowledge (like textual content) and helps regularization however doesn’t seize complicated patterns until you engineer significant options.

Resolution Bushes

Resolution timber break up knowledge recursively primarily based on characteristic values to attenuate impurity (utilizing metrics akin to Gini or entropy). They’re highly effective for rule-based studying, naturally, deal with each numeric and categorical options, and supply interpretability via their construction. Nevertheless, they’re vulnerable to overfitting when a deep tree is educated on small datasets. In interviews, you have to be ready to clarify the way to management this (e.g., pruning, setting depth limits) or when to modify to ensemble strategies, akin to Random Forests or gradient-boosted timber.

Assist Vector Machines (SVM)

SVMs intention to seek out the hyperplane that maximizes the margin between lessons. They carry out nicely in high-dimensional areas and may deal with non-linear relationships utilizing kernel capabilities. Interviewers may take a look at whether or not you perceive how the kernel trick permits studying in implicit characteristic areas with out calculating them straight.

What’s the kernel trick?

The kernel trick is a technique that permits SVMs (and different algorithms) to function in higher-dimensional characteristic areas with out explicitly calculating the coordinates of the reworked knowledge. As an alternative, it employs a kernel operate to compute dot merchandise between pairs of information factors as in the event that they had been mapped right into a higher-dimensional house.

This permits us to coach highly effective non-linear fashions utilizing solely the unique knowledge, avoiding the computational price of performing the transformation straight. Interviewers may ask:

- “Why does an RBF kernel succeed the place a linear kernel fails?”

- “What does it imply to ‘implicitly’ map knowledge?”

- “How would you select between a linear and non-linear kernel?”

You need to be ready to reply:

“A linear kernel solely works if the information is linearly separable within the unique house. The RBF kernel maps knowledge right into a higher-dimensional characteristic house, enabling separation utilizing interior merchandise. Due to the kernel trick, we compute these interior merchandise straight within the unique house with out explicitly remodeling the information.”

A standard weak point is scalability; SVMs may be gradual with giant datasets, and they’re delicate to characteristic scaling. They’re sturdy decisions when there are clear margins between lessons and never an excessive amount of knowledge.

Neural Networks

Neural networks be taught by stacking layers of linear transformations and nonlinear activations, educated utilizing backpropagation and gradient descent. They’re extremely versatile and may mannequin practically any operate given sufficient depth, width, and knowledge. Nevertheless, this flexibility comes with drawbacks: neural networks are non-convex, require a considerable amount of knowledge, and may be difficult to interpret or debug.

When coaching deep neural networks, interviewers might probe your understanding of why coaching fails or why efficiency stagnates, even with loads of knowledge. Listed here are frequent failure modes and the way to deal with them:

Vanishing Gradients

- What occurs: Gradients diminish to close zero as they’re backpropagated, particularly with sigmoid/tanh activations.

- Signs: Early layers don’t replace; coaching plateaus.

- Fixes:

- Use ReLU or variants (LeakyReLU, GELU) to protect gradient circulation.

- Apply Batch Normalization to keep up wholesome activations.

- Use residual connections (as in ResNets) to shortcut gradients via the community.

Exploding Gradients

- What occurs: Gradients turn out to be excessively giant, destabilizing coaching.

- Signs: Loss turns into NaN or oscillates wildly.

- Fixes:

- Gradient clipping to cap the gradient magnitude.

- Use correct weight initialization (e.g., He or Xavier).

- Normalize enter knowledge to cut back variance explosion.

Overfitting

- What occurs: The mannequin suits coaching knowledge nicely however fails on validation/take a look at units.

- Fixes:

- Add regularization (e.g., L2, dropout).

- Use early stopping primarily based on validation loss.

- Acquire extra knowledge or apply knowledge augmentation.

Convergence Issues

- What occurs: Coaching is gradual or doesn’t enhance.

- Fixes:

- Alter the studying charge; use schedulers (e.g., cosine annealing, ReduceLROnPlateau).

- Change to adaptive optimizers like Adam or RMSProp, which tune studying charges per parameter.

- Scale/normalize options to assist optimizers converge quicker.

Okay-Means Clustering

Okay-means is an unsupervised algorithm that partitions knowledge into Okay clusters by iteratively assigning factors to the closest centroid and updating these centroids. It assumes that clusters are roughly spherical and equally sized, which regularly doesn’t maintain in actual knowledge. In interviews, anticipate questions on when k-means may fail, akin to in circumstances of non-convex or imbalanced clusters, and the way to mitigate this (e.g., through the use of DBSCAN or Gaussian Combination Fashions). You also needs to be capable of clarify why initialization issues and the way k-means++ helps enhance the soundness of the algorithm.

Okay-Means minimizes within-cluster variance, nevertheless it’s delicate to the place the preliminary centroids are positioned. Poor initialization can result in:

- Sluggish convergence

- Native minima

- Merged or empty clusters

How Okay-Means++ helps:

Okay-Means++ selects the primary centroid randomly, then every subsequent centroid is chosen with chance proportional to its distance from the closest already chosen centroid. This promotes numerous, well-separated beginning factors, leading to extra steady and correct clustering, particularly in real-world, imbalanced datasets.

A operate is convex if any line phase connecting two factors on its graph lies above or on the graph itself. This implies there are no native minima apart from the worldwide minimal.

Why Convexity Issues in Machine Studying

In convex issues, optimization algorithms like gradient descent are assured to converge to the international minimal (assuming correct step sizes). In non-convex points, akin to coaching deep neural networks, the loss floor can have a number of native minima, saddle factors, and plateaus. Optimization turns into harder, and convergence shouldn’t be assured to be optimum.

Linear algebra is essential in ML as a result of it reveals how knowledge is represented and adjusted. Many algorithms depend on matrix operations, so understanding these ideas is vital in analyzing efficiency, optimization, and dimensionality.

Matrix Multiplication

What it’s: Combines matrices to carry out linear transformations; core to neural community layers (e.g., y=Wx+b).

In ML: Utilized in ahead and backward passes, consideration mechanisms, and PCA. Encodes how fashions remodel inputs into discovered representations.

Interview Q&A:

Q: What’s the time and house complexity of matrix multiplication?

A: For 2 dense matrices of dimension m×n and n×p, the time complexity is O(mnp), and the house complexity is O(mp) for the output. With sparse matrices, these complexities may be considerably decreased.

Q: Why is matrix multiplication environment friendly on GPUs?

A: GPUs are designed for parallel operations. Matrix multiplication is very parallelizable; every output entry is impartial and may be calculated concurrently. Deep studying libraries, akin to cuBLAS and Tensor Cores, leverage this construction.

Q: How are you going to optimize matrix multiplication in a big neural community?

A:

- Use sparse matrices when relevant (e.g., embeddings). Sparse representations scale back reminiscence and computation by storing solely non-zero entries, thereby minimizing useful resource utilization. Strategies like Compressed Sparse Row (CSR) or Coordinate (COO) codecs are used to signify these matrices effectively.

- Leverage batched operations to extend throughput.

- Apply decreased precision (e.g., FP16 or INT8) to decrease reminiscence and compute prices in case your {hardware} helps it.

- For enormous matrices, contemplate a low-rank approximation to cut back dimensionality.

Q: What’s the instinct behind matrix multiplication in neural nets?

A: Every row of the burden matrix applies a weighted mixture of enter options. Matrix multiplication stacks a number of such combos, permitting the community to extract and remodel patterns into higher-level representations.

Dot Product

What it’s: A dot product between two vectors, a⋅b = ∑a_i b_i, measures their alignment.

Why it issues: It’s the muse of similarity measures (e.g., cosine similarity) and projections. In ML, it reveals up in consideration mechanisms, advice programs, and latent house comparisons.

Interview Q&A

Q: How is similarity measured between vectors in ML?

A: The dot product measures how aligned two vectors are. If normalized, it turns into cosine similarity, which is a scale-invariant measure. For instance, in NLP, we examine phrase embeddings utilizing cosine similarity. It’s computationally low-cost (linear time) and works nicely in high-dimensional areas.

Eigenvalues and Eigenvectors

What they’re: For matrix A, if Av = λv, then v is an eigenvector, and λ is the eigenvalue.

Why it issues: Key to dimensionality discount (PCA), spectral clustering, and analyzing system dynamics.

Interview Q&A

Q: How does PCA scale back dimensionality?

A: PCA computes eigenvectors of the covariance matrix. The highest okay eigenvectors (principal elements) seize the instructions of probably the most vital variance. We challenge knowledge onto these to cut back dimensions whereas retaining probably the most data.

Complexity: Computing eigenvalues is O(n3), so we use truncated SVD for large-scale knowledge.

Q: When would you keep away from PCA?

A: When options will not be linearly correlated or the highest elements don’t separate lessons. In such circumstances, kernel PCA or autoencoders could also be more practical.

Rank

What it’s: The rank of a matrix is the variety of linearly impartial rows or columns. It reveals the intrinsic dimensionality of the information.

Why it issues: Rank helps detect characteristic redundancy, informs invertibility, and is vital in matrix factorization (e.g., in collaborative filtering or LSA).

Interview Q&A

Q: Why does rank matter in ML?

A: A rank-deficient matrix means some options are linearly dependent, which causes issues in fashions like linear regression as a consequence of multicollinearity.

Fixes embody:

- Characteristic choice to drop redundant columns.

- Regularization (e.g., Ridge) to stabilize coefficient estimates.

- Use of SVD to approximate the matrix in a lower-dimensional house.

Q: How does a low rank profit us?

A: It lets us retailer and compute on compressed kinds, which is useful in large-scale recommender programs and embedding tables.

Invertibility

What it’s: A matrix A is invertible if A^−1 exists such that AA−1=I. Solely sq., full-rank matrices are invertible.

Why it issues: In ML, matrix inversion is required in closed-form options (e.g., regular equations for linear regression) however may be computationally costly and numerically unstable.

Interview Q&A

Q: When does matrix inversion fail?

A: Inversion fails if the matrix is not full-rank (i.e., has dependent options) or if it’s ill-conditioned (practically singular). This results in unstable options.

Options:

- Use pseudo-inverse by way of SVD when inversion shouldn’t be attainable.

- Want iterative strategies (like gradient descent) for giant datasets, which keep away from express inversion.

- Add regularization (e.g., Ridge Regression) to stabilize the inverse.

Chance

What it’s: Chance measures how possible the noticed knowledge is below a selected set of parameters in a given mannequin.

Instance: In a Gaussian distribution, the probability of seeing a selected dataset depends upon the assumed imply and variance.

Interview Perception: Be ready to clarify that probability shouldn’t be the identical as chance — it’s a operate of parameters, given the information.

Most Chance Estimation (MLE)

What it’s: MLE chooses the parameters that make the noticed knowledge probably.

The way it works: You outline the probability operate, take the log (log-likelihood), compute gradients, and optimize.

Instance: In logistic regression, you utilize MLE to suit the weights that maximize the chance of observing the precise labels.

Interview Perception: “MLE suits parameters by maximizing the probability of noticed knowledge. For classification, this aligns with minimizing cross-entropy loss.”

Q: “How is MLE associated to cross-entropy loss in classification?”

A: “Minimizing cross-entropy is equal to maximizing the probability below a Bernoulli (for binary) or categorical (for multi-class) distribution. So, coaching a logistic regression mannequin with cross-entropy loss is performing MLE.”

Bayes’ Theorem

What it’s: A components that updates beliefs (posterior) primarily based on new knowledge: Posterior∝Chance×Prior

Interpretation: The posterior represents our beliefs after seeing the information.

Use in ML: Bayesian inference (e.g., Naive Bayes classifier, Bayesian neural nets).

Interview Perception: “Whereas MLE makes use of simply knowledge, Bayesian strategies incorporate prior beliefs and replace them utilizing noticed proof.”

Constrained Optimization

When it issues: Typically, parameters should fulfill constraints, like chances summing to at least one (e.g., softmax outputs).

Method: Use Lagrange multipliers to transform constrained issues into solvable kinds.

Instance: In softmax, the output is constrained so that every one parts are between 0 and 1 and sum to 1. This ensures a sound chance distribution.

Interview Perception: “When a mannequin requires legitimate chance outputs, we implement constraints utilizing softmax or apply constrained optimization with Lagrange multipliers.”

To conclude, greedy ideas like probability, MLE, Bayes’ Theorem, and constrained optimization isn’t solely about formulation; it’s about understanding how fashions be taught from knowledge, replace beliefs, and cling to real-world constraints. These rules type the spine of machine studying, and with the ability to clarify them intuitively in an interview demonstrates not simply data however real depth of understanding.