Final week I used to be confused about consideration mechanisms in transformers, so I watched 4 25–45-minute movies and browse the “Consideration Is All You Want” paper. Right here’s what clicked for me.

Earlier than 2017, there have been neural networks referred to as RNNs (Recurrent Neural Networks) and CNNs (Convolutional Neural Networks) that would deal with sequential information like textual content and speech, however they’d main ache factors: they have been gradual and couldn’t scale successfully. Sequence order and place mattered, in order that they processed information one step at a time.

In 2017, the paper “Consideration Is All You Want” launched transformers and altered all the things. If you hear “GPT,” the “T” stands for transformer. These fashions generate textual content sequentially, one token at a time, however they course of all inputs concurrently. The breakthrough is in parallelism.

1. Tokenization

Step one is to separate the enter into chunks, like phrases. They’re not all the time phrases. In actual fact, th_e text_mig_ht be s_plit in_to_chunk_s like_this, however for now, let’s consider them as phrases for simplicity.

2. Embedding

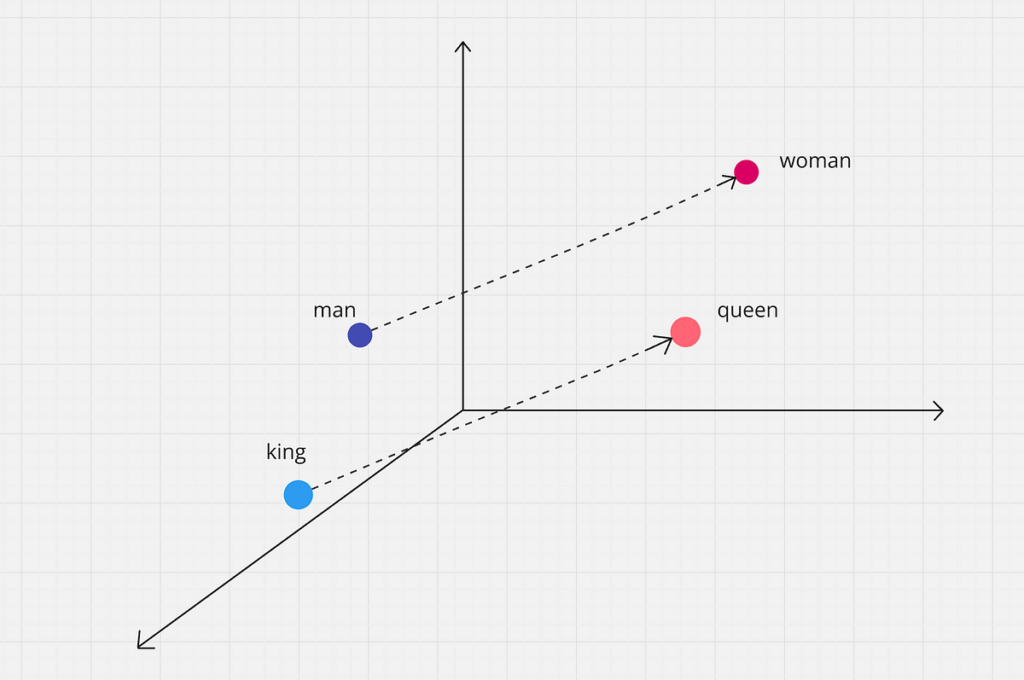

Every token is mapped to a high-dimensional vector. Suppose you’re taking the entire phrases (say, round 50,000 of them) and provides every of them its personal vector in house. what a 3D-coordinate system seems to be like. Now think about (you gained’t be capable to) an area with 12,288 dimensions as a substitute of simply 3. That is the variety of dimensions within the GPT-3. Primarily, embedding is a step, the place every phrase will get its personal column with a selected high-dimensional vector in a matrix.

I’m going to check your instinct now. The place do you suppose phrases like robotics, automation, and mountain would sit on this house? Which of them are going to be nearer to one another and which of them are farther? Right here’s an image of the embedding house for you so that you simply don’t learn the reply from the subsequent paragraph:

I suppose you stated robotics and automation are positioned nearer, whereas mountain is someplace far-off. That’s what’s taking place within the embedding house: comparable phrases cluster collectively. Now, how shut do you suppose CS main and unemployment are positioned? Jk, let’s transfer on.

3. Positional Encoding

RNNs deal with sequence order via their recurrent construction (processing tokens one after one other), and CNNs do it via a sliding window strategy, however transformers use neither. That was an issue, so it wanted one other solution to know the positions of phrases, e.g. how you can inform the distinction within the that means between “the cat sat on the mat” and “the mat sat on the cat”. That is achieved by including positional data to every token’s embedding vector utilizing sine and cosine features.

Technical notice. Be happy to skip this half, however for those who’re curious in regards to the math behind it, learn.

We add place data that has the identical dimension d as our phrase embedding in order that they are often summed. For even dimensions, they use sin(pos/10000^(2i/d)) and for odd positions, they use cos(pos/10000^(2i/d)), the place pos is the place and i is the dimension. This manner, every place will get a singular sample with completely different frequencies.

For any mounted distance ok, the encoding at place pos+ok will be computed as a linear operate of the encoding at place pos. How? We wish to compute the connection between positions pos and pos + ok. Let’s say ω = 1/10000^(2i/d). This manner, we’re evaluating sin(pos * ω) and sin((pos+ok) * ω). Utilizing the trigonometric addition formulation, we all know that:

sin(A + B) = sin(A)cos(B) + cos(A)sin(B)

cos(A + B) = cos(A)cos(B) – sin(A)sin(B)Subsequently, sin((pos+ok) * ω) = sin(posω + kω) = sin(posω)cos(kω) + cos(posω)sin(kω). Right here, sin(kω) and cos(kω) are constants, which signifies that sin((pos+ok) * ω) is only a linear mixture of sin(posω) and cos(posω) — the values we have already got within the positional encoding. This helps the mannequin be taught the relative positions sooner via linear projections.

4. Self-attention

That is the important thing mechanism employed within the transformer structure that permits every aspect to straight relate to some other aspect within the sequence whatever the distance between them.

Consideration. After I say soccer, the place does your consideration land? Do you see correct European soccer stadiums, Champions League video games, or Cristiano Ronaldo? Or do you image an oval brown ball and folks in helmets (principally, are you American)? See, the identical phrase can imply various things in numerous contexts, and that’s precisely what an consideration mechanism helps with. It seems to be on the context surrounding the time period soccer and pulls its embedding vector nearer to the phrases in the identical context within the embedding house.

Let’s have one other instance. The phrase spider has a sure embedding vector. What did you think about? Only a small arachnid. Now what for those who add man to it? Spiderman. Is the cartoonish model of the well-known superhero coming to your thoughts? Or perhaps Tom Holland? If you add superb earlier than Spider-Man, your mind would possibly shift to eager about Andrew Garfield as a substitute. Within the embedding house, the vector for the token spider strikes nearer to the vectors representing the superhero space or towards the Andrew Garfield zone relying on the contextual phrases surrounding it. With every context we see, the place of the vector will get up to date. Every phrase’s that means will get pulled nearer to or farther from different meanings primarily based on the encompassing context. Subsequently, consideration checks which phrases are related for updating the that means of our preliminary phrase.

Let’s get again to the definition of self-attention from the paper.

Self-attention, typically referred to as intra-attention is an consideration mechanism relating completely different positions of a single sequence with a view to compute a illustration of the sequence.

Now we are able to break down this definition piece by piece:

- Positions of a sequence — Have a look at this sentence: “What does this imply?” Every phrase has a place: What is place 1, does is place 2, this is place 3, and so forth.

- Relating completely different positions — Self-attention lets every place take a look at each different place within the sequence. So What connects to the entire different phrases within the sequence: does, this, imply. Each different phrase has connections to all different phrases in the identical means.

- Self or intra-attention — The eye is occurring throughout the identical sequence. If different consideration mechanisms take a look at different sequences, reminiscent of a translator taking a look at sequences in different languages, this one seems to be at itself (stays throughout the identical textual content).

- Computing a illustration — For every place, self-attention creates a brand new illustration that’s influenced by its context. It basically figures out what every phrase contextually means. The ultimate result’s the weighted combination of knowledge from all positions. Those with heavier weight affect extra.

Transformer structure

Now that we perceive the eye mechanism and different elements, let’s zoom out a bit and take a look at the entire transformer structure:

This determine may appear sophisticated, however it’s not. The entire left facet is the encoder half that processes the enter data and captures the relationships, and the proper facet is the decoder half that generates the output sequence primarily based on the illustration from the encoder.

The encoder half has two most important sub-layers (with some normalization in between for stability):

- Multi-head consideration: Many consideration heads work on the identical enter sequence concurrently to seize its completely different facets. In GPT-3, there are 96 consideration heads working in parallel.

- Feed-forward community (or multi-layer perceptron): After gathering all of the context, the transformer processes all the things via a daily neural community.

The decoder half is similar to the encoder however with an additional layer referred to as masked self-attention. We don’t wish to look into the long run, so we set all these future values (unlawful connections within the sequence) to be extraordinarily small, i.e. unfavorable infinity. So masked self-attention “masks” all future values. Then the common consideration mechanism focuses on related components of the encoder output and strikes to the feed-forward neural community.

Technical notice. In self-attention, we have now three vital matrices with tunable parameters: Question, Key, and Worth.

The Question matrix is the present phrase’s context. The Key matrix is the index of the phrases within the sequence. The Worth matrix is the precise data from the phrases. The eye mechanism matches queries to keys to find out relevance. For each token, this course of updates its illustration primarily based on context.

Right here’s the way it works in observe:

1. Take the phrase Manchester in “Manchester United is the best soccer membership”. The worth matrix will get multiplied by the embedding of the primary phrase (Manchester). The results of this multiplication is a price vector that’s added to the embeddings of the opposite phrases within the sequence, like soccer. This manner we perceive that speaking about Manchester tells us the context about English soccer, not the American one.

2. Every phrase’s embedding is multiplied by the question matrix to create ‘questions’.

3. Every embedding can also be multiplied by the important thing matrix to create a sequence of vectors referred to as keys. They are often interpreted as answering the queries. When a query and reply align effectively (if their dot product is massive), we all know these phrases ought to listen to one another.

The result’s a extra refined vector with context. We do that for each single column, each phrase within the context. This all is named one head of consideration, and I discussed that GPT-3 makes use of 96 of these. Every head learns to deal with various kinds of relationships. One head would possibly deal with subject-verb relationships, one other on adjective-noun pairs, and so forth.

The entire concept is that if we run a number of heads in parallel, the mannequin can be taught many distinct ways in which context adjustments that means. This makes transformers a lot sooner than older fashions. Summing up, the transformer has embeddings for every phrase and its purpose is to regulate these embeddings primarily based on the context to have wealthy contextual that means.

5. Softmax

In any case this complicated processing, we have to make precise predictions via the softmax layer. It takes all our calculated scores and turns them into chances between 0 and 1. How? Take every rating and lift e to that energy to get all optimistic values. Then divide every consequence by the sum of all outcomes to get the chances. That provides us good clear chances that inform us the possibility of a selected phrase coming subsequent within the sequence. For instance, if the mannequin processes “The participant within the penalty field scored a _”, it would assign an 80% chance to purpose, 10% chance to hat-trick, 5% to level, and so forth.