On this put up, I’ll stroll you thru the ideas behind Canonical Correlation Evaluation (CCA) and exhibit its utility with Python code. If you happen to loved my YouTube video on CCA, this weblog put up will present a deeper dive into the speculation, math, and sensible implementation.

Word: Earlier than diving in, I recommend watching my earlier movies on Principal Component Analysis (PCA) and Singular Value Decomposition (SVD), which lay a strong basis for greedy CCA.

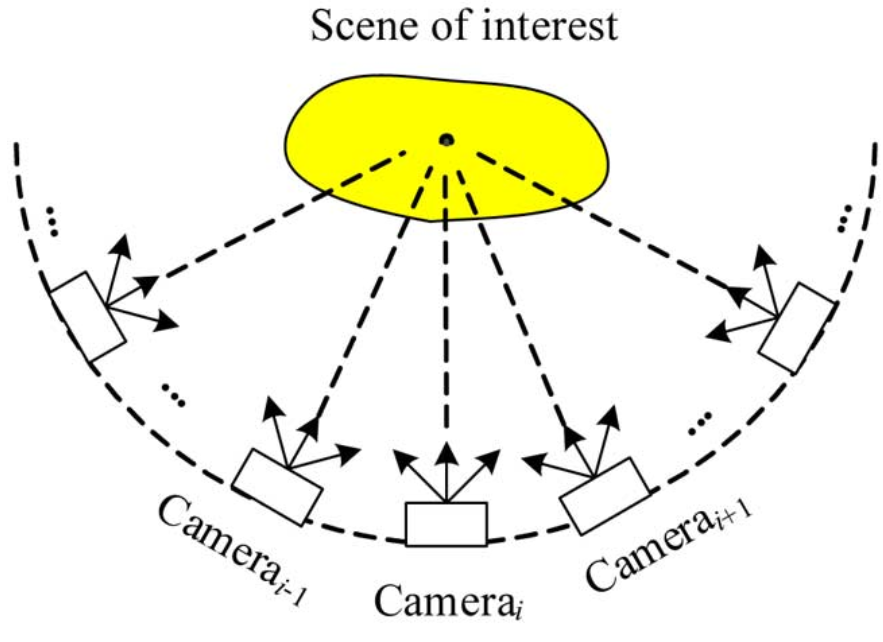

Multiview knowledge arises when a phenomenon is sampled from totally different sources or modalities. Take into account these examples:

- Soccer Sport: Two cameras capturing totally different angles of the identical participant.

- Picture and Caption: A picture paired with a descriptive textual content.

- Medical Exams: Totally different diagnostic assessments carried out on the identical affected person.

This multi-perspective strategy offers richer data for duties like decision-making, as every view can compensate for potential noise or biases within the different.

Utilizing a number of knowledge views affords advantages but additionally introduces challenges:

- Noise: One view is perhaps noisier than the opposite.

- Totally different Dimensions: Totally different views might have various dimensionalities, resulting in points like overfitting or bias towards one view.

CCA addresses these issues by:

- Decreasing dimensions: It initiatives knowledge right into a lower-dimensional area.

- Maximizing correlation: It finds the most effective linear combos (projections) in order that the remodeled knowledge from every view is maximally correlated.

This makes CCA particularly helpful for downstream duties like clustering or classification, the place combining a number of views improves efficiency.

Let’s dive into the speculation a bit. Assume you could have two knowledge views, X and Y, with nnn samples, the place:

- X has p options.

- Y has q options.

CCA finds two projection vectors, a and b, such that:

- The linear combos of X and Y are maximally correlated.

- The correlation is measured by the Greek letter ρ.

The method includes:

- Calculating the cross-covariance matrix between X and Y.

- Fixing an optimization downside with normalization constraints (forcing the projections to be unit vectors).

- Utilizing eigen (or singular worth) decomposition to extract the projection vectors that maximize correlation.

This formulation is analogous to PCA, the place we search to maximise variance; nevertheless, in CCA, the aim is to maximise the correlation between two knowledge units.

Now let’s see find out how to apply CCA utilizing Python. Within the following sections, I’ll use the California Housing dataset for instance. (Word that the dataset is initially single-view, so we’ll create artificial views by splitting the options.)

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.datasets import fetch_california_housing

from sklearn.cross_decomposition import CCA

from sklearn.preprocessing import StandardScalerknowledge = fetch_california_housing(as_frame=True)

df = knowledge.body

print(df.form)

(20640, 9)

print(df.describe())corr_matrix = df.corr()

plt.determine(figsize=(8,6))

plt.imshow(corr_matrix, cmap='coolwarm', interpolation='none')

plt.colorbar()

plt.title("Function Correlation Matrix")

plt.present()

Because the California Housing dataset is single-view, we cut up the options into two teams to simulate two totally different views.

view1 = df.iloc[:, :5]

view2 = df.iloc[:, 5:]scaler1 = StandardScaler()

scaler2 = StandardScaler()

view1_scaled = scaler1.fit_transform(view1)

view2_scaled = scaler2.fit_transform(view2)

Now we apply CCA from scikit-learn. We’ll work on a pattern of the info (say, the primary 500 samples) to cut back computation time.

n_samples = 500

view1_sample = view1_scaled[:n_samples]

view2_sample = view2_scaled[:n_samples]n_components = 2

cca = CCA(n_components=n_components)

view1_c, view2_c = cca.fit_transform(view1_sample, view2_sample)

correlation = np.corrcoef(view1_c[:, 0], view2_c[:, 0])[0, 1]

print(f"Correlation between first canonical variables: {correlation:.2f}")

Correlation between first canonical variables: 0.82

plt.determine(figsize=(8,6))

plt.scatter(view1_c[:, 0], view2_c[:, 0], alpha=0.7)

plt.xlabel("Canonical Variable 1 (View 1)")

plt.ylabel("Canonical Variable 1 (View 2)")

plt.title("Scatter Plot of the First Canonical Variables")

plt.present()

CCA not solely offers a approach to scale back the dimensionality of multiview knowledge but additionally helps to fuse totally different knowledge sources by maximizing their shared data. After acquiring the canonical variables, you possibly can additional:

- Concatenate the projected views: This can be utilized for downstream duties corresponding to clustering or classification.

- Discover further canonical pairs: Past the primary canonical variables, further pairs will be analyzed for deeper insights.

I hope this put up helped demystify CCA and demonstrated its sensible utility with a hands-on Python instance. If you happen to discovered this content material helpful, please take into account liking, commenting, and sharing this put up.

Joyful coding and knowledge exploring!