Introduction

When evaluating machine studying fashions, accuracy is usually the primary metric thought-about. Nonetheless, accuracy may be deceptive, particularly in instances of imbalanced datasets. If one class considerably outnumbers the opposite, a excessive accuracy rating won’t mirror the true efficiency of the mannequin. To handle this, Precision, Recall, and F1 Rating are used as extra dependable metrics.

On this weblog, we’ll discover:

– Why accuracy may be deceptive

– The ideas of Precision, Recall, and F1 Rating

– Their mathematical formulation and interpretations

– implement these metrics in Python utilizing Scikit-Study

Why Accuracy is Not All the time Sufficient

Take into account a binary classification drawback the place the objective is to detect fraudulent transactions. Suppose now we have 1000 transactions, with 990 professional and 10 fraudulent transactions. If a mannequin predicts each transaction as professional (by no means predicting fraud), the accuracy could be:

Regardless of the excessive accuracy, the mannequin is ineffective as a result of it by no means detects fraud! That is the place Precision and Recall change into essential.

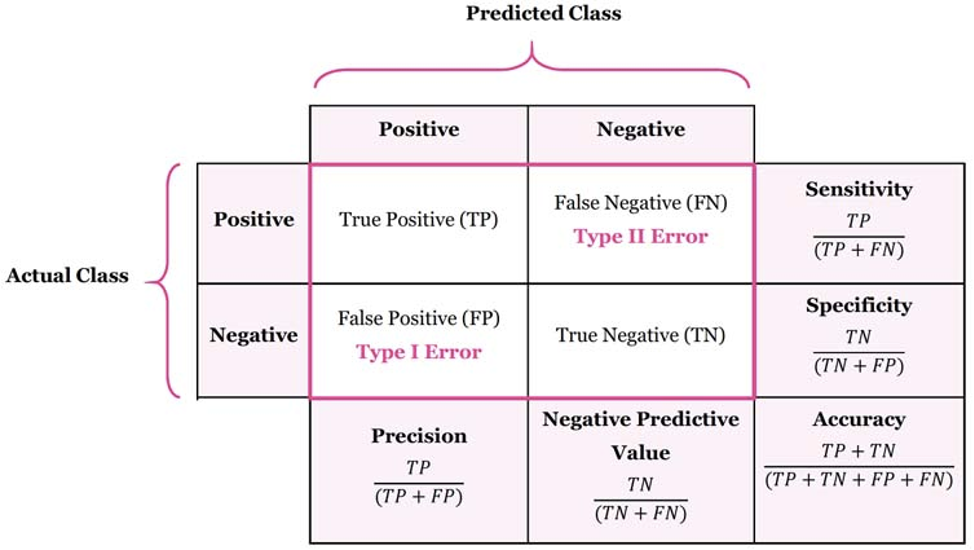

Precision: Measuring Correctness of Optimistic Predictions

Precision solutions the query: Out of all the anticipated constructive instances, what number of have been truly right?

Method:

The place:

– TP (True Positives): Accurately predicted constructive instances

– FP (False Positives): Incorrectly predicted constructive instances

Instance:

Think about a spam e mail classifier:

– The mannequin predicts 100emails as spam

– Out of these, 80 are literally spam

Precision = {80}/{80 + 20} = 0.8 = {(80%)}

When to prioritize Precision?

– When false positives are pricey (e.g., falsely classifying an essential e mail as spam).

– In medical prognosis, wrongly classifying a wholesome particular person as sick could cause pointless panic.

Recall: Measuring Protection of Precise Positives

Recall solutions the query: *Out of all precise constructive instances, what number of have been appropriately recognized?*

Method:

{Recall} = {TP}/{TP + FN}

The place:

– FN (False Negatives): Precise constructive instances that the mannequin didn’t establish

Instance:

Persevering with with the spam e mail classifier:

– There are 100 precise spam emails in complete

– The mannequin appropriately predicts 70 as spam

{Recall} = {70}/{70 + 30} = 0.7 = {(70%)}

When to prioritize Recall?

– When lacking constructive instances has severe penalties (e.g., failing to diagnose most cancers).

– In fraud detection, lacking fraudulent transactions can result in monetary losses.

The Commerce-off Between Precision and Recall

– Rising Precision reduces False Positives however might improve False Negatives.

– Rising Recall reduces False Negatives however might improve False Positives.

– The stability relies on the applying. That is the place F1 Rating helps!

— –

F1 Rating: The Stability Between Precision and Recall

F1 Rating is the harmonic imply of Precision and Recall. It supplies a single metric to guage a mannequin when each Precision and Recall are essential.

Method:

{F1 Rating} = 2 X {{Precision} X{Recall}} / {{Precision} + textual content{Recall}}

Instance:

If Precision = 0.8 and Recall = 0.7:

{F1 Rating} = 2 {0.8 X 0.7}/{0.8 + 0.7} = 0.746 = {(74.6%)}

When to make use of F1 Rating?

– When each Precision and Recall are essential.

– In imbalanced datasets, the place a single metric like Accuracy just isn’t sufficient.

Multi-Class Classification: Precision, Recall, and F1 Rating

For multi-class classification, Precision, Recall, and F1 Rating are calculated for every class individually after which averaged utilizing:

1. Macro Common: Averages metrics throughout all courses equally.

2. Weighted Common: Averages metrics contemplating class imbalance.

Implementing in Python Utilizing Scikit-Study

Let’s calculate these metrics for a pattern dataset:

from sklearn.metrics import precision_score, recall_score, f1_score, classification_report

# True labels (Precise Values)

y_true = [0, 1, 1, 0, 1, 2, 2, 2, 1, 0]

# Predicted labels by mannequin

y_pred = [0, 1, 0, 0, 1, 2, 1, 2, 2, 0]

# Compute Precision, Recall, and F1 Rating

precision = precision_score(y_true, y_pred, common=’weighted’)

recall = recall_score(y_true, y_pred, common=’weighted’)

f1 = f1_score(y_true, y_pred, common=’weighted’)

print(f”Precision: {precision:.2f}”)

print(f”Recall: {recall:.2f}”)

print(f”F1 Rating: {f1:.2f}”)

# Detailed classification report

print(classification_report(y_true, y_pred))

“`

### Output:

“`

Precision: 0.79

Recall: 0.80

F1 Rating: 0.78

precision recall f1-score help

0 1.00 1.00 1.00 3

1 0.67 0.67 0.67 3

2 0.75 0.75 0.75 4

accuracy 0.80 10

macro avg 0.81 0.81 0.81 10

weighted avg 0.79 0.80 0.78 10

“`

— –

Key Takeaways

✅ Accuracy isn’t at all times dependable — Take into account Precision, Recall, and F1 Rating for higher analysis.

✅ Use Precision when False Positives are pricey (e.g., spam filters).

✅ Use Recall when False Negatives are vital (e.g., medical prognosis).

✅ F1 Rating balances each— Ultimate for imbalanced datasets.

✅ In multi-class classification, use Macro or Weighted Averages.