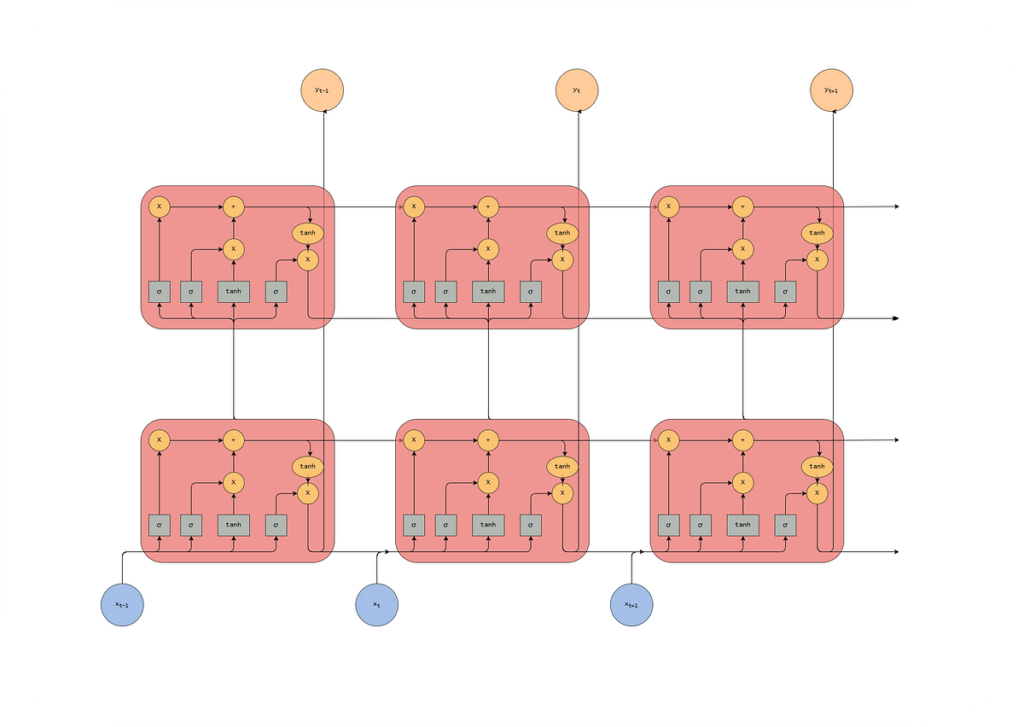

In former articles, now we have checked out Vanilla RNNs, LSTMs, and GRU — and in addition the best way to implement them in Python. On this article, we are going to think about what occurs once we don’t wish to solely use a single hidden layer. In different phrases, we are going to have a look at Deep RNNs the place now we have a number of hidden layers.

We imagined that now we have sequential knowledge, for instance over completely different time steps. That is the place we are able to attempt to think about Recurrent Neural Networks, since they’ve the idea of ‘reminiscence’. This helps them retailer the states or info of earlier inputs to generate the following output of the sequence.

Allow us to check out one thing referred to as the Recurrent Neuron:

How is it completely different from our orginal Perceptron? It’s merely a perceptron with a suggestions connection, permitting it to include its earlier output (hidden state) into the present computation. In different phrases, our perceptron is now capable of keep in mind — permitting it to seize dependencies throughout time. However possibly we must always take a faster have a look at our hidden state — what precisely is it? It shops details about what the RNN has seen up to now within the sequence of inputs.

We’ve got now understood that RNNs deal with sequences of inputs. We are able to then attempt to perceive what is supposed…