Introduction

Within the realm of machine studying and knowledge science, determination bushes play a vital function as a flexible and interpretable algorithm for classification and regression duties. On the coronary heart of establishing a call tree lies the idea of impurity, which measures the diploma of dysfunction or uncertainty in a dataset. Among the many varied metrics used to guage impurity, the Gini Index stands out as probably the most common and efficient.

The Gini Index helps in figuring out one of the best characteristic and break up level to divide knowledge into subsets, finally resulting in a extra correct and environment friendly determination tree. By minimizing the Gini Index at every step, we make sure that the ensuing subsets are as pure as attainable, that means that they comprise a majority of comparable goal values.

This text explores the idea of the Gini Index and its calculation, providing a Python implementation that will help you perceive how this metric works behind the scenes. Whether or not you’re constructing determination bushes or simply curious in regards to the math driving these algorithms, this information is tailor-made for you. Let’s dive in!

Understanding Gini Index Impurity

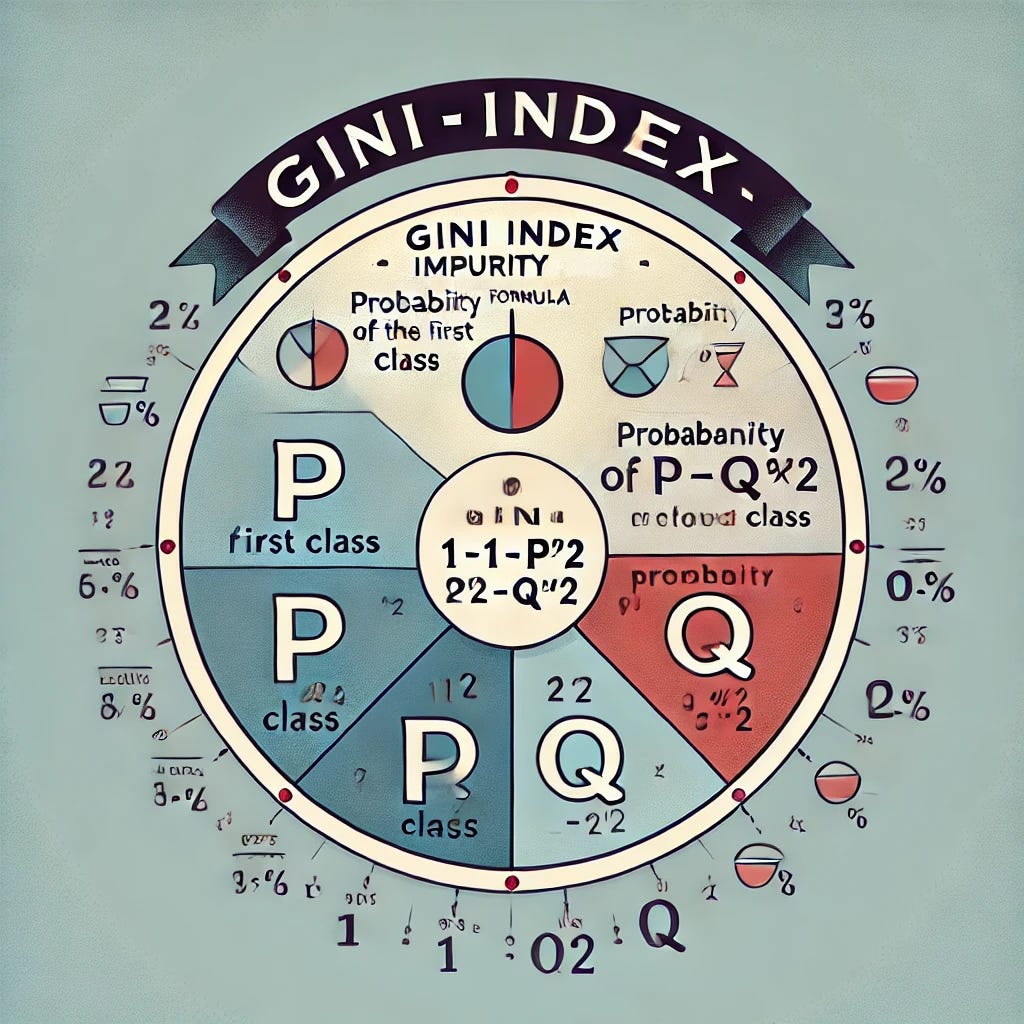

The Gini Index, often known as Gini Impurity, is a metric used to guage the purity of a dataset. It measures how usually a randomly chosen aspect can be incorrectly categorized if it had been randomly labeled primarily based on the distribution of labels within the dataset. The formulation for the Gini Index is:

The place:

• p_i is the proportion of components belonging to class i within the dataset.

• n is the entire variety of courses.

The Gini Index ranges from 0 to 1:

• A Gini Index of 0 signifies excellent purity (all components belong to a single class).

• A Gini Index near 1 signifies excessive impurity (components are evenly distributed amongst courses).

Why Use the Gini Index?

In determination bushes, the Gini Index is used to determine one of the best characteristic and break up level for dividing knowledge into subsets. By deciding on the break up that minimizes the Gini Index, the algorithm ensures that every subset is as pure as attainable, main to raised classification accuracy.

Weighted Gini Index for Splits

When splitting a dataset, the Gini Index is calculated for every subset, and a weighted common is used to mix these impurities. The formulation for the weighted Gini Index is:

The place:

• Gini_1 and Gini_2 are the impurities of the subsets.

• |Subset_1| and |Subset_2| are the sizes of the subsets.

Within the subsequent part, we’ll implement this idea step-by-step utilizing Python.

Python Implementation of Gini Index Impurity

Now that we perceive the idea of Gini Index Impurity and its weighted calculation, let’s implement it in Python. We are going to calculate the Gini Index for a given dataset and use it to guage potential splits.

Beneath is the Python code to compute the Gini Index and weighted impurity:

Step 1: Dataset Illustration

We’ll start by defining a dataset as a NumPy array, the place every row represents a knowledge level, and the columns symbolize options and labels.

import numpy as np# Pattern dataset: [Feature1, Feature2, Age, Target]

df = np.array([

[1, 0, 18, 1],

[1, 1, 15, 1],

[0, 1, 65, 0],

[0, 0, 33, 0],

[1, 0, 37, 1],

[0, 1, 45, 1],

[0, 1, 50, 0],

[1, 0, 75, 0],

[1, 0, 67, 1],

[1, 1, 60, 1],

[0, 1, 55, 1],

[0, 0, 69, 0],

[0, 0, 80, 0],

[0, 1, 87, 1],

[1, 0, 38, 1]

])

Step 2: Gini Index Calculation

Right here, we outline capabilities to calculate the Gini Index for a dataset and its weighted common for subsets after a break up.

# Operate to calculate weighted common of Gini impurities

def calc_wighted_average(im1, imp1_multiplier, im2, imp2_multiplier):

return spherical((((im1 * imp1_multiplier) + (im2 * imp2_multiplier)) / (imp1_multiplier + imp2_multiplier)), 3)# Operate to calculate Gini Index impurity

def calc_impurity(knowledge):

if len(np.distinctive(knowledge[:, 0])) > 2: # For steady variables

sorted_data = knowledge[data[:, 0].argsort()]

main_dict = {}

for i in vary(1, len(sorted_data)):

first_number = sorted_data[i - 1, 0]

second_number = sorted_data[i, 0]

avg = (first_number + second_number) / 2

# Splitting knowledge

true_xs = knowledge[data[:, 0] < avg]

count_true_xs = len(true_xs)

true_xs_true_ys = len(true_xs[true_xs[:, 1] == True])

true_xs_false_ys = len(true_xs[true_xs[:, 1] == False])

imp1 = spherical(1 - ((true_xs_true_ys / count_true_xs) ** 2) - ((true_xs_false_ys / count_true_xs) ** 2), 3)

false_xs = knowledge[data[:, 0] > avg]

count_false_xs = len(false_xs)

false_xs_true_ys = len(false_xs[false_xs[:, 1] == True])

false_xs_false_ys = len(false_xs[false_xs[:, 1] == False])

imp2 = spherical(1 - ((false_xs_true_ys / count_false_xs) ** 2) - ((false_xs_false_ys / count_false_xs) ** 2), 3)

main_dict[str(avg)] = (calc_wighted_average(imp1, count_true_xs, imp2, count_false_xs))

return {min(main_dict, key=main_dict.get): main_dict[min(main_dict, key=main_dict.get)]}

else: # For binary options

true_xs = knowledge[data[:, 0] == True]

count_true_xs = len(true_xs)

imp1 = 0 if count_true_xs == 0 else spherical(1 - ((len(true_xs[true_xs[:, 1] == True]) / count_true_xs) ** 2) -

((len(true_xs[true_xs[:, 1] == False]) / count_true_xs) ** 2), 3)

false_xs = knowledge[data[:, 0] == False]

count_false_xs = len(false_xs)

imp2 = 0 if count_false_xs == 0 else spherical(1 - ((len(false_xs[false_xs[:, 1] == True]) / count_false_xs) ** 2) -

((len(false_xs[false_xs[:, 1] == False]) / count_false_xs) ** 2), 3)

return calc_wighted_average(imp1, count_true_xs, imp2, count_false_xs)

Step 3: Instance Utilization

Let’s calculate the Gini Impurity for some pattern splits of the dataset.

# Gini impurity for the primary characteristic

print("Gini Impurity for Characteristic 1:", calc_impurity(df[:, [0, -1]]))# Gini impurity for the second characteristic

print("Gini Impurity for Characteristic 2:", calc_impurity(df[:, [1, -1]]))

# Gini impurity for the 'Age' characteristic

print("Gini Impurity for Age:", calc_impurity(df[:, [2, -1]]))

On this part, we’ll talk about how the Gini index is calculated and used to find out one of the best determination tree splits utilizing the supplied code. The dataset consists of a number of options, and the aim is to search out the optimum break up for decision-making primarily based on the Gini impurity. Let’s break it down step-by-step.

Overview of the Code

The dataset df incorporates the next columns:

1. A binary characteristic (e.g., gender or a categorical attribute).

2. A binary goal variable (e.g., whether or not a buyer purchased a product or not).

3. A numeric characteristic (e.g., age, revenue, and so forth.).

4. One other binary goal variable.

The code features a perform calc_impurity() which computes the Gini impurity of a given dataset, serving to to guage potential splits in determination bushes. Let’s stroll by means of the primary steps concerned.

1. Calculating Gini Impurity

The perform calc_impurity() calculates the Gini impurity for a set of knowledge. For binary classification, the Gini impurity is computed utilizing the formulation:

The place:

• p is the likelihood of the primary class (True on this case).

• q is the likelihood of the second class (False).

The perform first checks if the characteristic has greater than two distinctive values. If it does, it tries completely different break up factors by calculating the impurity for each attainable threshold (break up level). It then chooses the one which minimizes the impurity.

In our instance:

• We consider the Gini impurity for various columns (0, 1, and a couple of) with respect to the goal column (the final column within the dataset).

2. Making use of the Impurity Calculation

The code runs the next three calc_impurity calls:

• calc_impurity(df[:, [0, -1]]): Calculates the Gini impurity for the primary characteristic (column 0) with respect to the final column (goal).

• calc_impurity(df[:, [1, -1]]): Calculates the impurity for the second characteristic (column 1).

• calc_impurity(df[:, [2, -1]]): Calculates the impurity for the third characteristic (column 2).

Every perform name evaluates the potential splits and returns one of the best impurity worth, serving to to find out which characteristic offers the absolute best break up.

3. Splitting the Information

Subsequent, the code makes an attempt to separate the dataset primarily based on the third characteristic (numeric), utilizing a threshold of 68. The dataset is split into two subsets:

• True aspect (true_side_df): Rows the place the third characteristic worth is lower than 68.

• False aspect (false_side_df): Rows the place the third characteristic worth is larger than or equal to 68.

For all sides (True and False), the Gini impurity is calculated once more for each options 0 and 1:

• calc_impurity(true_side_df[:, [0, -1]]): Impurity for the True aspect with respect to the goal.

• calc_impurity(true_side_df[:, [1, -1]]): Impurity for the second characteristic on the True aspect.

• calc_impurity(false_side_df[:, [0, -1]]): Impurity for the False aspect with respect to the goal.

• calc_impurity(false_side_df[:, [1, -1]]): Impurity for the second characteristic on the False aspect.

4. Choice Tree Cut up Analysis

By calculating the impurity for various potential splits, the code guides us in selecting one of the best characteristic and break up threshold. The characteristic and threshold that end result within the lowest Gini impurity after the break up will likely be chosen for the choice tree. This course of is iterated over all potential options and thresholds to search out the optimum break up.

5. Conclusion

In abstract, this strategy demonstrates how Gini impurity is used to guage one of the best characteristic and threshold for splitting a dataset. By calculating impurity for varied splits and options, we make sure that the choice tree learns from the information in essentially the most environment friendly manner, minimizing classification errors. The code gives an instance of how this course of will be carried out and examined utilizing a easy dataset.

For the entire code, be at liberty to take a look at my GitHub and LinkedIn profiles.