Fashionable AI techniques like AI assistants, cloud-based apps, or autonomous brokers work in numerous conditions and environments. They should adapt primarily based on altering situations.

With out Mannequin Context Protocol (MCP), Fashions function blindly. The techniques deal with all of the inputs as identical, even when the customers, areas and environments modifications.

With MCP, it offers a standardized means the place fashions can regulate conduct primarily based on who’s utilizing them. It helps in multi-agent collaboration and allows a customized, safe and environment friendly conduct.

So What’s MCP, really?

Mannequin Context Protocol (MCP) is an open protocol that standardizes how purposes present context to LLMs. It’s developed by Anthropic, the corporate which developed Claude. The core concept behind creating MCP is “MCP makes it simple, versatile, and safe to attach AI fashions with real-world instruments and information — so you possibly can construct highly effective, helpful AI brokers rapidly.”

MCP is an enormous step in how AI brokers function. As a substitute of simply answering customers queries, brokers can now summarize the paperwork, retrieve information and save content material to a file.

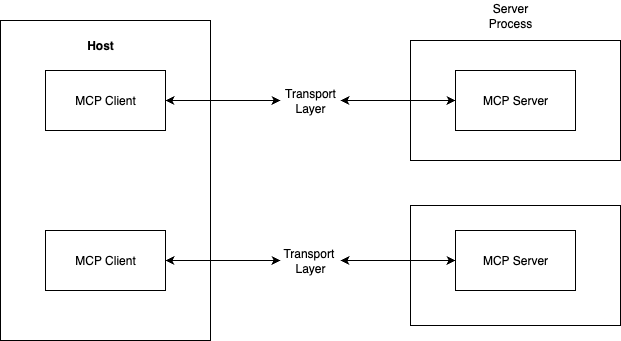

Basic Structure:

MCP Hosts: Brokers like Claude, ChatGPT, and different AI instruments need to entry the info from MCP

MCP Purchasers: They carry requests from hosts to the precise servers and convey again the outcomes. You possibly can consider them as a messenger.

MCP Server: They’re the applications that performs particular expertise like studying information, accessing a database or calling an API.

Native Knowledge Sources: These are the info by yourself system or community that MCP servers can entry out of your techniques with permissions.

Distant Providers: These are additionally the info however they’re out there on-line which MCP servers can entry.

That is all in regards to the structure of MCP. However how really does it talk between shopper and server is the principle concern right here?

To reply these questions, we have to learn about Transports, Sources, Prompts, Instruments, Sampling, and Roots. Let’s dive deeper.

Transports

Communication between the shopper and server is dealt with by transport layers. MCP helps completely different transport varieties:

- Stdio transport

Communication occurs over customary enter(stdin) and customary output(stdout) of a course of. This mechanism is helpful when each the shopper and the server is working on the identical machine.

Let me make clear with an analogy, let’s imagine you and your buddy are sitting subsequent to one another. In such situation, you don’t want cellphone, you possibly can simply speak to at least one one other.

Once you use VS Code (a code editor) to put in writing Python code, it talks to a Python helper software behind the scenes. This software checks your code, syntax, suggests fixes, and so forth. They speak utilizing stdio — a direct “walkie-talkie” line. - Streamable HTTP transport

Communication occurs by way of HTTP requests, however communication from the server to shopper occurs both by way of HTTP requests or SSE(Server-Despatched Occasions). This sort is extra appropriate for distant communication or web-based purchasers.

Let me give you an analogy for this as nicely, let’s imagine you and your buddy are in numerous international locations and you’re texting your buddy on messenger or Whatsapp. You’re far aside, however nonetheless chatting — they usually would possibly even ship messages when you’re typing.

One other instance may be of On-line coding instruments like Replit: You code within the browser. The browser talks to a helper program on a server utilizing HTTP, similar to visiting an internet site. It might additionally use streaming to provide you reside suggestions in your code.

In MCP, information exchanged between the shopper and server makes use of the JSON-RPC 2.0 format. This implies the messages are structured and encoded on this protocol. For those who’re questioning in regards to the distinction between JSON and JSON-RPC 2.0, right here’s a fast clarification:

- JSON is solely an information illustration: plain information with no built-in guidelines

- JSON-RPC 2.0 is a protocol that defines the way to use JSON for APIs, together with instructions, structured requests, and responses

The under is the distinction in construction for JSON and JSON RPC 2.0

// JSON

{

"title": "Alice",

"age": 25,

"isStudent": true

}

// JSON RPC 2.0

{

"jsonrpc": "2.0",

"technique": "add",

"params": [2, 3],

"id": 1

}

Sources

Sources are the info which MCP servers expose to their purchasers. This information may be learn by the purchasers and used because the context for LLM interactions.

Sources can include two kinds of content material:

- Textual content Sources

Textual content sources include UTF-8 encoded textual content information reminiscent of plain textual content, supply code, JSON/XML, config information, and so forth. - Binary Sources

Binary sources include uncooked binary information encoded in base64 reminiscent of photos, PDFs, audio information, video information, and so forth.

Prompts

Immediate are the reusable templates and workflows that shopper can simply floor to customers and LLMs. Prompts are offered by the server. They’ll settle for variables as enter, pull info from sources, set off actions, and even seem as interface parts like slash instructions (/debug) in shopper apps.

The construction of immediate is:

{

title: string; // Distinctive identifier for the immediate

description?: string; // Human-readable description

arguments?: [ // Optional list of arguments

{

name: string; // Argument identifier

description?: string; // Argument description

required?: boolean; // Whether argument is required

}

]

}

Instruments

Instruments are one of the crucial vital idea in MCP that enables servers to reveal executable performance to the shopper. In different phrases, a software allows an LLM to work together with an exterior system and, on the identical time, empowers to take motion.

In easy language, instruments are offered by servers, and purchasers can uncover them and invoke them when wanted.

Every software is outlined with the next construction:

{

title: string; // Distinctive identifier for the software

description?: string; // Human-readable description

inputSchema: { // JSON Schema for the software's parameters

kind: "object",

properties: { ... } // Software-specific parameters

},

annotations?: { // Non-obligatory hints about software conduct

title?: string; // Human-readable title for the software

readOnlyHint?: boolean; // If true, the software doesn't modify its setting

destructiveHint?: boolean; // If true, the software might carry out damaging updates

idempotentHint?: boolean; // If true, repeated calls with identical args haven't any extra impact

openWorldHint?: boolean; // If true, software interacts with exterior entities

}

}

Sampling

Sampling can be one of many vital idea of Mannequin Context Protocol(MCP). Sampling refers back to the means of server to ask the LLM for assist by way of the shopper. In different phrases, it’s in regards to the server collaborating with the LLM to analyse information and make choices.

Working:

- Server sends a

sampling/createMessagerequest to the shopper - Shopper evaluations the request and may modify it

- Shopper samples from an LLM

- Shopper evaluations the completion

- Shopper returns the consequence to the server

Instance:

Consumer: “The place ought to I eat dinner tonight?”

With out Sampling (Deterministic)

LLM: Calls close by restaurant software

Server: Returns prime 3 eating places sorted by rankings

1. 🍕 Pizza Palace (4.7)

2. 🍣 Sushi Home (4.6)

3. 🥗 Inexperienced Backyard (4.5)

LLM: Analyzes rankings solely

LLM responds:

👉 “I like to recommend Pizza Palace. It has the best ranking amongst close by eating places.”

🧠 End result: Simple, dependable, all the time picks the top-rated place.

With Sampling (Inventive)

Consumer: “The place ought to I eat dinner tonight?”

LLM: Calls close by restaurant software

Server: Returns prime 10 eating places with metadata (ranking, delicacies, vibe, wait time)

🍣 Sushi Home (4.6, quiet, 15-min wait)

🍕 Pizza Palace (4.7, family-friendly, 45-min wait)

🌮 Taco Spot (4.4, vigorous, 5-min wait)

LLM samples: “What’s a great place contemplating I’d need one thing informal and fast?”

LLM reasoning:

Pizza Palace has greatest ranking however lengthy wait

Sushi Home is quiet however not informal

Taco Spot is fast, informal, and has a adequate ranking

Server checks if Taco Spot has outside seating (primarily based on current person conduct)

LLM responds:

👉 “You would possibly take pleasure in Taco Spot — it’s informal, has a brief wait, and respectable evaluations. Plus, it has outside seating, which is ideal for at present’s climate.”

🧠 End result: Extra customized, numerous, and context-aware.

Takes under consideration temper, wait occasions, and vibe — not simply rankings.

Roots

Roots are the idea in MCP that present a means for purchasers to tell servers about related sources and their areas. For those who’re questioning why an MCP server must know in regards to the shopper’s roots, there are some good causes. Among the many most vital are safety, efficiency, context-aware capabilities, and useful resource scoping.

Elicitation

Elicitation lets the MCP server ask the person for extra info when wanted — as a substitute of getting all the pieces upfront. It makes interactions extra versatile and pure.

Instance: When organising a software, the server can ask:

“What’s your username?”

“Would you like darkish mode or gentle mode?”

Working

1. Server sends a request asking for particular information (like title, settings, and so forth.).

2. Shopper reveals a kind or immediate to the person.

3. Consumer enters the information (or cancels).

4. Shopper sends the response again to the server.

5. Server continues its job utilizing the information.

Request Construction

Elicitation requests comprises two elements:

1. Message

2. Schema

Conclusion

MCP (Message Communication Protocol) performs a key position in making AI instruments simpler to construct and use. It offers a easy, structured means for purchasers (like editors or apps) to speak to AI servers. Options like prompts, elicitation, streaming, and software calling assist builders create highly effective, interactive, and user-friendly experiences.

By standardizing how messages are despatched and acquired, MCP simplifies frequent AI use instances — like code strategies, explanations, and job automation — making them extra environment friendly, versatile, and simpler to combine into real-world purposes.

References:

- Mannequin Context Protocol, “Introduction — Mannequin Context Protocol,” modelcontextprotocol.io, [Online]. Obtainable: https://modelcontextprotocol.io/introduction.