VMamba: One other transformer second for imaginative and prescient duties?

From the proposal of current novel architectures in deep studying, the community engineering has performed a necessary function in rising efficiency of the mannequin by the layer / mannequin / module / methodology contributions. The variations in community began from rising layers, to introducing newer layers and its varieties, then by reworking a number of layers at a time like, aggregating layers, creating particular modules ( a mixture of layers) like resneXt, large little neXt, squeeze & excitation, and so forth. As soon as transformers had been launched, it began selecting the adjustments occurred in CNNs and began direct implementation of discipline examined variations. The adjustments in community occurred in all areas that features layers, normalisation, augmentations that alter enter photos and even have an effect on the burden initializations like Xavier, he and zeros and ones initialization. Now the imaginative and prescient is transferring in newer path to change the elemental studying sample of convolutional neural community with Vmamba. It is a path breaking shift and once more like different improvements, it is being picked up quicker for innovating and variants are there are newer variations from researchers like efficientvmamba.

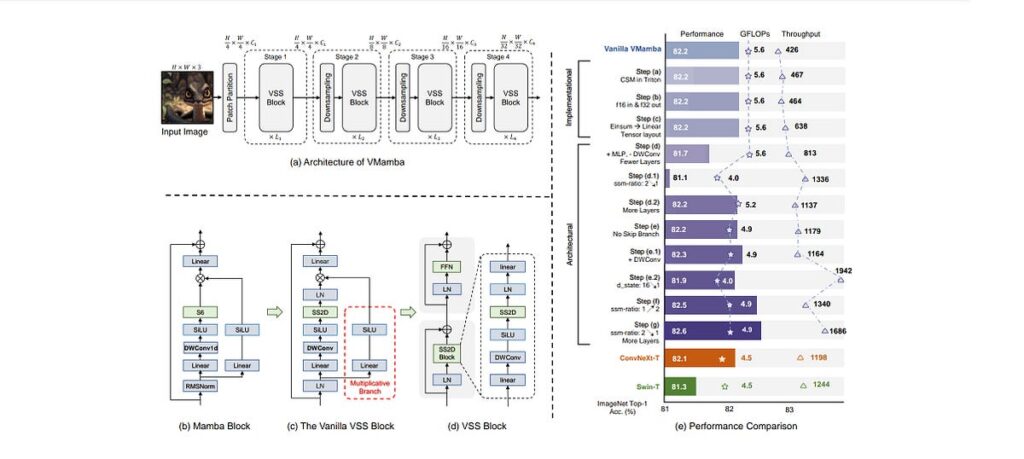

This text explains VMamba, a novel imaginative and prescient spine structure that addresses this problem by adapting State Area Fashions (SSMs), from mamba structure, which have beforehand demonstrated important success in processing sequential knowledge like textual content. VMamba represents SS2D, a 2D Selective Scan, which scans the enter patches in 4 varieties/ methods. This learns higher than the eye mechanism as claimed by researchers.

As a substitute of treating a picture patches which can be made out of the enter dataset as a single, steady sequence, it processes enter patches by traversing them alongside 4 distinct scanning routes, known as Cross-Scan. Every of those linearly modified sequences is then independently processed by separate S6 blocks, that are the guts of Vmamba. And following it are the opposite layers which helps the mannequin as typical. In addition they embody depthwise convolutional layer which helps pace up.

Whereas going by means of this methodology, i believe I’ve heard the identical factor earlier than. The CNNs studying function map with the assistance of sliding kernels is changed by the self consideration mechanism proposed for NLP duties by Google analysis. Now the tactic which is launched for NLP fashions is being performing higher than different mechanisms. Comparable data switch 😅 from NLP to CNNs. Anyhow, improvements are necessary and higher fashions are wanted.

Vmamba additionally examined with its personal initialization methodology referred to as mamba-init and examined throughout random and nil initializations. Two of essentially the most delicate (unforgiving) initializations. Yeah, they are surely when evaluating with Xavier and he. Though Erf maps for Xavier and he would have given a lot concrete readability when it comes to the fashions capabilities right here. Yet one more benefit is VMamba’s robustness to totally different initialization strategies and varied activation capabilities, as its efficiency shouldn’t be considerably affected by these selections. Many deep studying fashions are very delicate to such hyperparameters, typically requiring in depth and dear tuning and typically additionally they fail. VMamba’s noticed robustness implies simpler coaching, quicker experimentation, and doubtlessly extra steady deployment in different settings makes it succesful for utilizing it in a number of environments by making easy adjustments.

The visualization of efficient receptive discipline (Erf) map is spectacular compared to different strategies.

They’ve examined the mannequin over Imagenet, throughout the architectures like Swin Transformer, Convnext and so forth. VMamba-Base attains an 83.9% top-1 accuracy, surpassing Swin by +0.4%. The efficiency is relatively higher and though there are different transformer networks which have larger accuracy, the throughput TPs are significantly better. It leads by a really excessive margin throughout the state-of-the-art architectures. VMamba-Base’s throughput exceeds Swin’s by over 40% (646 vs. 458 photos/s), and VMamba-T achieves a formidable 1,686 photos/s. In addition they reveals enchancment in computational effectivity and scalability, making it extremely appropriate for real-world purposes the place useful resource constraints are a major issue.

This quadratic scaling imposes substantial challenges for the sensible deployment of ViTs, significantly in situations demanding high-resolution inputs or operation on resource-constrained gadgets. This makes the imaginative and prescient spine networks that may retain the benefits of international receptive fields and dynamic weighting parameters, akin to self-attention, however with considerably improved time complexity.

On the time the place the analysis world is moving into environment friendly architectures, small VLMs, and even smaller LLMs, such design is way appreciated on conventional architectures as effectively. Hope newer architectures like this emerges then and there as mentioned by Yann lecun.

Paper : VMamba: Visible State Area Mannequin ( NeurIPS 2024)

Refer this paper hyperlink : https://proceedings.neurips.cc/paper_files/paper/2024/hash/baa2da9ae4bfed26520bb61d259a3653-Summary-Convention.html