Introduction

Again-propagation has been the engine driving the deep studying revolution. We have come a great distance with developments similar to:

- New layers like Convolutional Neural Networks, Recurrent Neural Networks, Transformers.

- New coaching paradigms like fine-tuning, switch studying, self-supervised studying, contrastive studying, and reinforcement studying.

- New optimizers, regularizers, augmentations, loss capabilities, frameworks, and lots of extra…

Nonetheless, the Abstraction and Reasoning Corpus (ARC) dataset, created over 5 years in the past, has withstood the check of quite a few architectures however by no means budged. It has remained one of many hardest datasets the place even the perfect fashions couldn’t beat human stage accuracies. This was a sign that true AGI continues to be removed from our grasp.

Final week, a brand new paper “The Shocking Effectiveness of Check-Time Coaching for Summary Reasoning” pushed a comparatively novel method ahead, reaching a brand new cutting-edge stage of accuracy on the ARC dataset that has excited the deep studying group akin to how AlexNet did 12 years in the past.

TTT was invented 5 years in the past, the place coaching happens on only a few samples—often one or two—much like the testing knowledge level. The mannequin is allowed to replace its parameters primarily based on these examples, hyper-adapting it to solely these knowledge factors.

TTT is analogous to reworking a common doctor right into a surgeon who’s now tremendous specialised in solely coronary heart valve replacements.

On this publish, we’ll be taught what TTT is, how we are able to apply it in numerous duties, and talk about the benefits, disadvantages, and implications of utilizing TTT in real-world eventualities.

What’s Check Time Coaching?

People are extremely adaptable. They observe two studying phases for any activity—a common studying section that begins from beginning, and a task-specific studying section, typically referred to as activity orientation. Equally, TTT enhances pre-training and fine-tuning as a second section of studying that happens throughout inference.

Merely put, Check Time Coaching entails cloning a skilled mannequin throughout testing section and fine-tuning it on knowledge factors much like the datum on which you wish to make an inference. To interrupt down the method into steps, throughout inference, given a brand new check knowledge level to deduce, we carry out the next actions –

- clone the (common goal) mannequin,

- collect knowledge factors from coaching set which can be closest to the check level, both through some prior information or embedding similarity,

- construct a smaller coaching dataset with inputs and targets utilizing the information from above step,

- resolve on a loss perform and prepare the cloned mannequin on this small dataset,

- use the up to date clone mannequin to foretell on the stated check knowledge level.

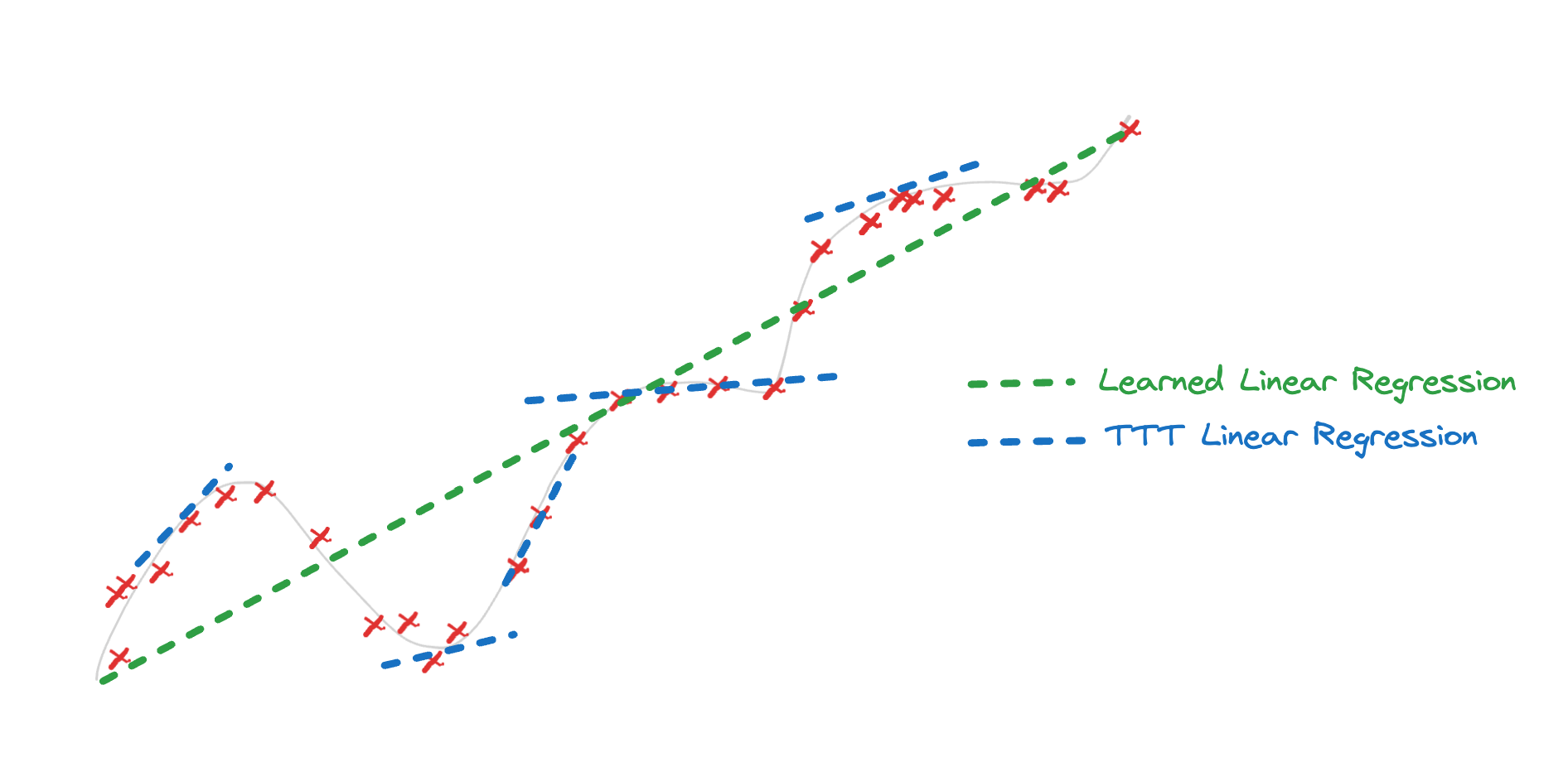

For a easy instance, one can take a skilled linear regression mannequin, and replace the slope for a set of factors within the neighborhood of the check level and use it make extra correct predictions.

Okay-Nearest Neighbors is an excessive instance of TTT course of the place the one coaching that occurs is throughout check time.

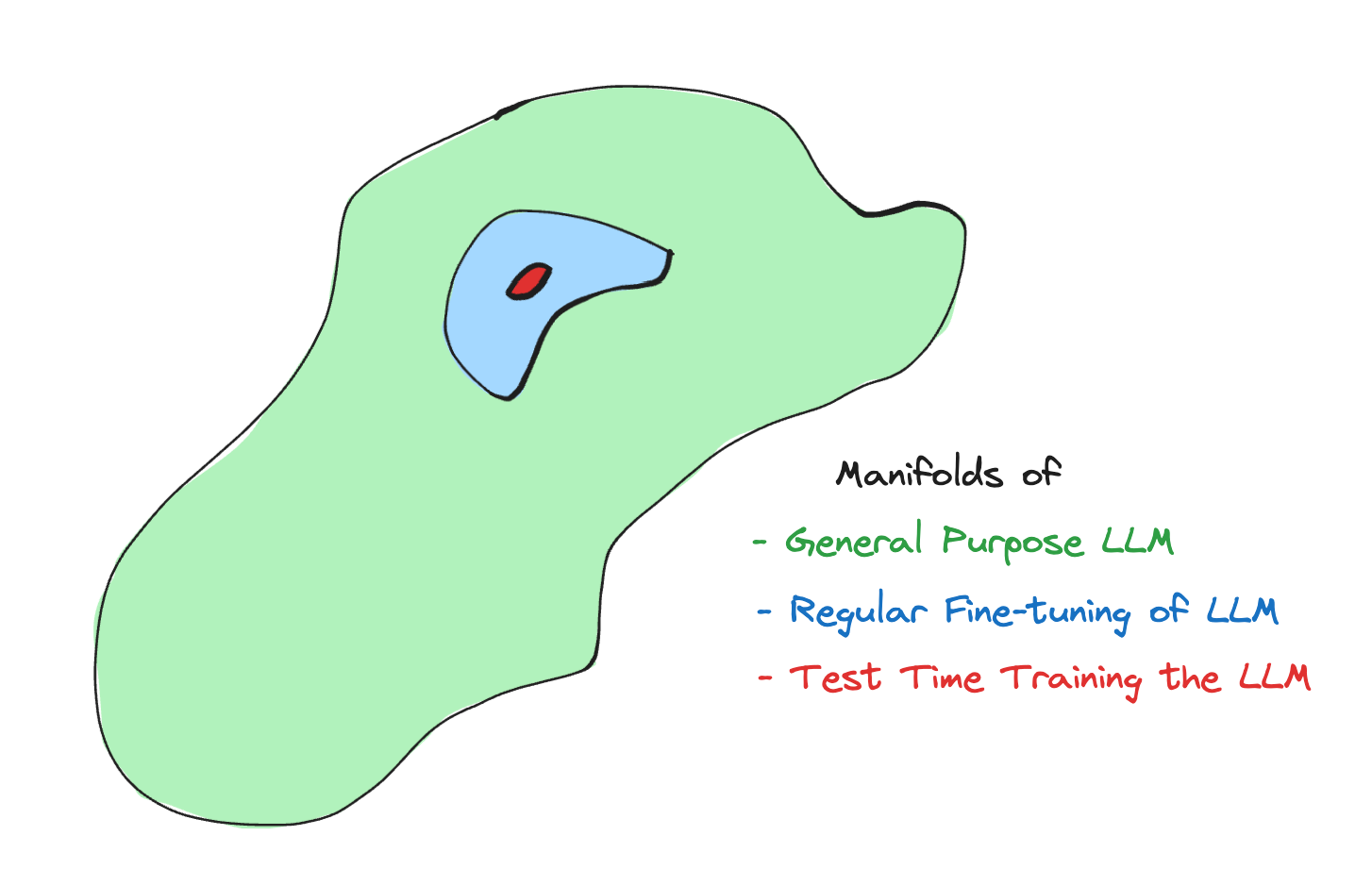

Within the area of LLMs, TTT is very helpful, when duties are advanced and outdoors what an LLM has seen earlier than.

In-Context Studying, few-shot prompting, Chain of Thought reasoning, and Retrieval Augmented Technology have been requirements for enhancing LLMs throughout inference. These methods enrich context earlier than arriving at a last reply however fail in a single side—the mannequin isn’t adapting to the brand new surroundings at check time. With TTT, we are able to make the mannequin be taught new ideas that may in any other case needlessly capturing an unlimited quantity of knowledge.

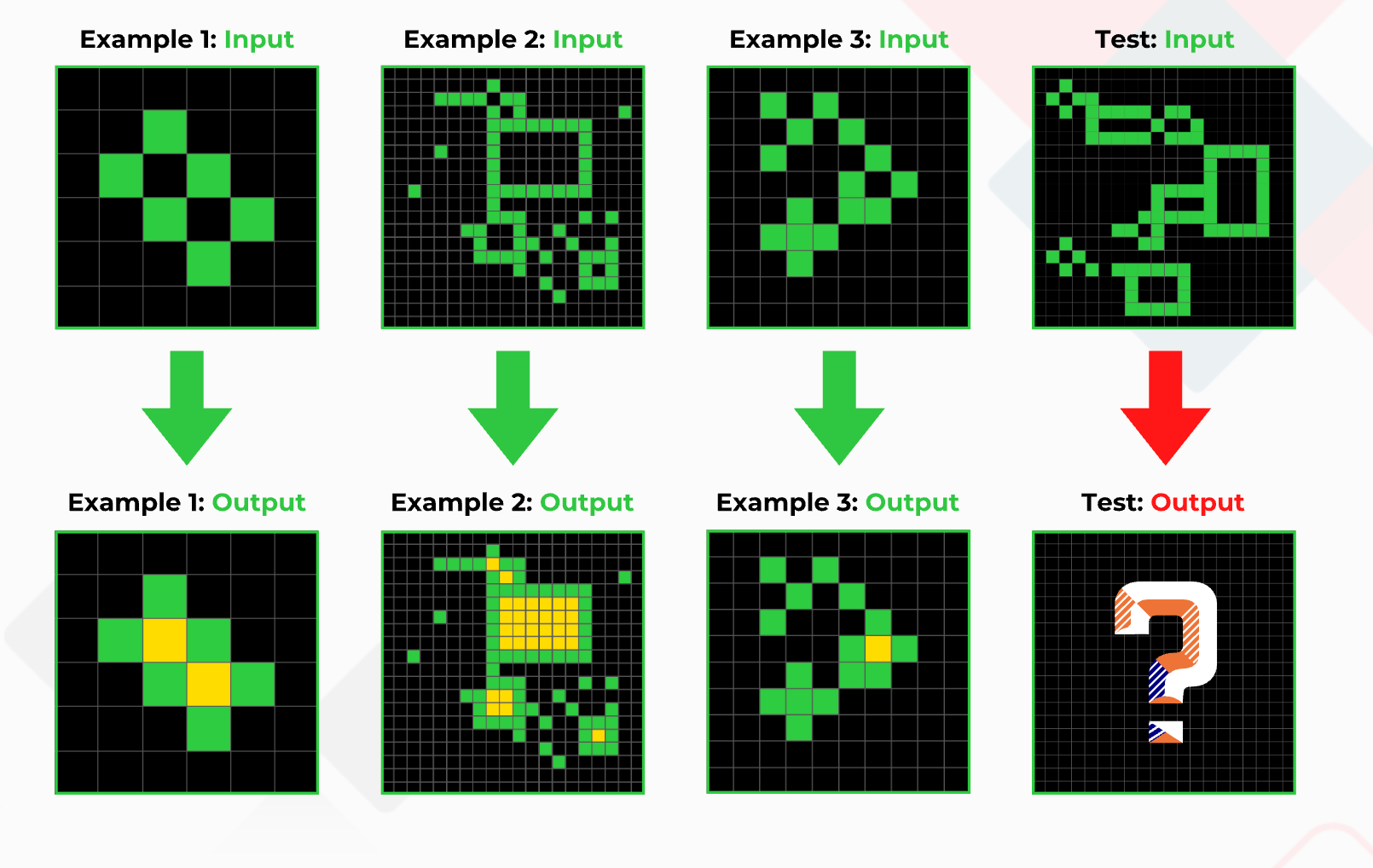

The ARC dataset is a perfect match for this paradigm, as every knowledge pattern is a set of few-shot examples adopted by a query that may solely be solved utilizing the given examples—much like how SAT exams require you to search out the following diagram in a sequence.

As proven within the picture above, one can use the primary three examples for coaching through the check time and predict on the fourth picture.

Tips on how to Carry out TTT

The brilliance of TTT lies in its simplicity; it extends studying into the check section. Thus, any normal coaching methods are relevant right here, however there are sensible elements to think about.

Since coaching is computationally costly, TTT provides extra overhead since, in principle, it’s good to prepare for each inference. To mitigate this price, think about:

- Parameter-Environment friendly Tremendous Tuning (PEFT): Throughout the coaching of LLMs, coaching with LoRA is significantly cheaper and quicker. Coaching solely on a small subset of layers, like in PEFT, is all the time advisable as an alternative of full mannequin tuning.

def test_time_train(llm, test_input, nearest_examples, loss_fn, OptimizerClass):

lora_adapters = initialize_lora(llm)

optimizer = OptimizerClass(lora_adapters, learning_rate)

new_model = merge(llm, lora_adapters)

for nearest_example_input, nearest_example_target in nearest_examples:

nearest_example_prediction = new_model(nearest_example_input)

loss = loss_fn(nearest_example_prediction, nearest_example_target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

predictions = new_model(test_input)

return predictionsPsuedo-code for check time coaching with LLMs

- Switch Studying: Throughout standard switch studying, one can change/add a brand new activity head and prepare the mannequin

def test_time_train(base_model, test_input, nearest_examples, loss_fn, OptimizerClass):

new_head = clone(base_model.head)

optimizer = OptimizerClass(new_head, learning_rate)

for nearest_example_input, nearest_example_target in nearest_examples:

nearest_example_feature = base_model.spine(nearest_example_input)

nearest_example_prediction = new_head(nearest_example_feature)

loss = loss_fn(nearest_example_prediction, nearest_example_target)

optimizer.zero_grad()

loss.backward()

optimizer.step()

test_features = base_model.spine(test_input)

predictions = new_head(test_features)

return predictionsPsuedo-code for check time coaching with standard switch studying

- Embedding Reuse: Monitor which inferences had been made, i.e., which LoRAs had been used. Throughout inference, if a brand new knowledge level’s embedding is shut sufficient to current ones, an current LoRA/Activity-Head could be reused.

- Check Time Augmentations (TTA): TTA clones the inference picture and applies augmentations. The typical of all predictions gives a extra sturdy consequence. In TTT, this could enhance efficiency by enriching the coaching knowledge.

Actual-World Makes use of

- Medical Prognosis: Tremendous-tuning common diagnostic fashions for particular affected person circumstances or uncommon illnesses with restricted knowledge.

- Customized Training: Adapting an academic AI to a scholar’s studying fashion utilizing particular examples.

- Buyer Assist Chatbots: Enhancing chatbots for area of interest queries by retraining on particular points throughout a session.

- Autonomous Autos: Adapting car management fashions to native site visitors patterns.

- Fraud Detection: Specializing fashions for a particular enterprise or uncommon transaction patterns.

- Authorized Doc Evaluation: Tailoring fashions to interpret case-specific authorized precedents.

- Inventive Content material Technology: Personalizing LLMs to generate contextually related content material, like advertisements or tales.

- Doc Information Extraction: Tremendous-tuning for particular templates to extract knowledge with larger precision.

Benefits

- Hyper-specialization: Helpful for uncommon knowledge factors or distinctive duties.

- Information Effectivity: Tremendous-tuning with minimal knowledge for particular eventualities.

- Flexibility: Improves generalization by means of a number of specializations.

- Area Adaptation: Addresses distribution drift throughout lengthy deployments.

Disadvantages

- Computational Value: Extra coaching at inference could be expensive.

- Latency: Not appropriate for real-time LLM purposes with present know-how.

- Threat of Poor Adaptation: Tremendous-tuning on irrelevant examples might degrade efficiency.

- Threat of Poor Efficiency on Easy Fashions: TTT shines when the mannequin has a lot of parameters to be taught and the information throughout check time is of excessive diploma variance. If you attempt to apply TTT with easy fashions similar to linear regression it can solely overfit on the native knowledge and that is nothing greater than over-fitting a number of fashions utilizing KNN sampled knowledge.

- Advanced Integration: Requires cautious design for integrating coaching into inference and monitoring a number of fashions.

TTT is a promising software, however with important overhead and dangers. When used properly, it might push mannequin efficiency in difficult eventualities past what standard strategies can obtain.