There’s a secret in machine studying nobody desires to confess:

The mannequin is the straightforward half.

Coaching a flowery deep studying mannequin? Nice !!

Getting it to run in manufacturing, at scale, throughout environments, with monitoring, drift detection and retraining logic? That’s the place the true recreation begins.

That is the story of how I constructed an end-to-end deep studying MLOps pipeline utilizing TensorFlow, Databricks, and MLflow – all with out dropping my thoughts (or my metrics).

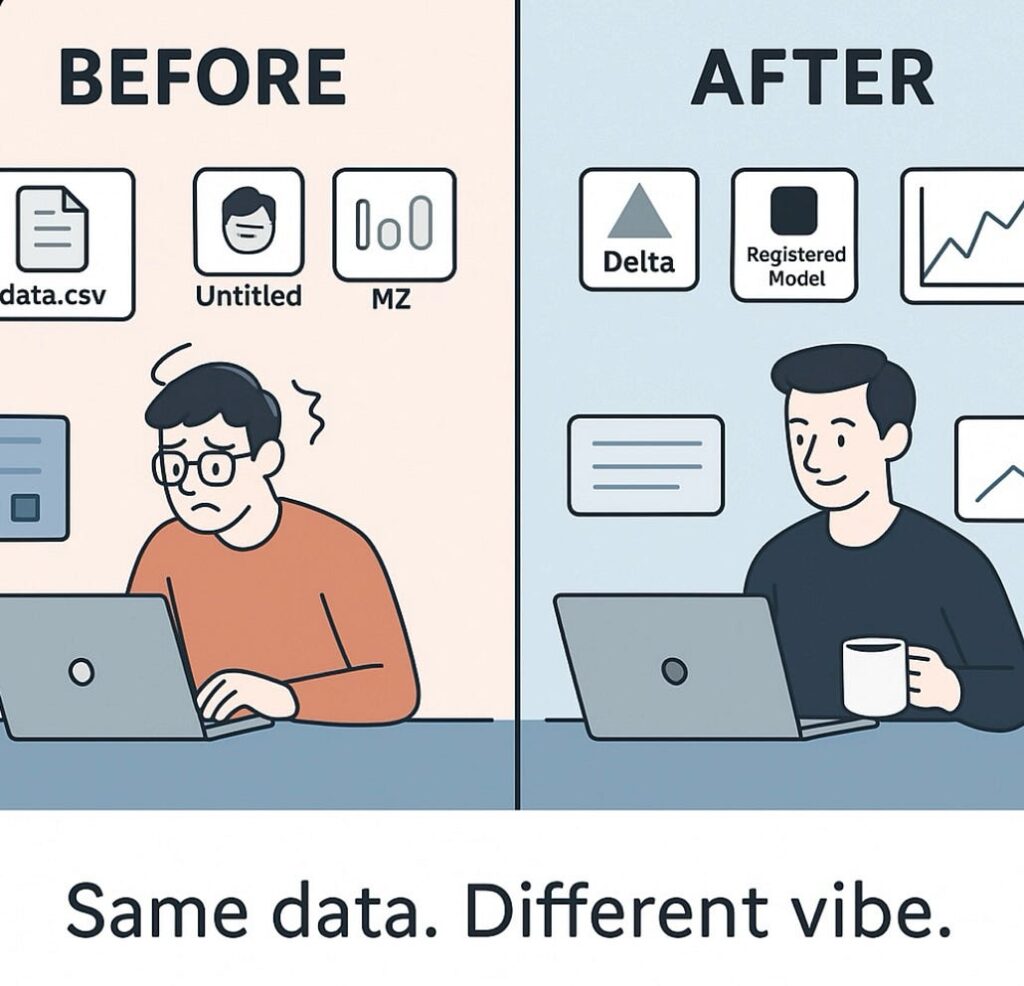

Earlier than vs After MLOps –

- It All Begins With a Pocket book… Till It Doesn’t

I started, like most of us do – inside a comfortable Jupyter pocket book.

It labored. The mannequin skilled. The plots regarded good.

However then got here the true questions:

• How do I scale this to 10x the information?

• How do I keep away from retraining the identical mannequin repeatedly?

• How do I belief this factor as soon as it’s stay?

Spoiler: None of those questions are answered inside your pocket book.

2. Preprocessing Like a Professional (Or at Least Like an Engineer)

Step one was to industrialize the preprocessing pipeline:

• Information got here in quick, messy, and continuous.

• I used Spark to wrangle it – filter, clear, and rework based mostly on situations.

• Processed options have been standardized, versioned, and reused.

Key lesson: Preprocessing have to be repeatable and debuggable. In case your mannequin fails, it’s often not the mannequin – it’s the pipeline.

Manufacturing ML is a layered sandwich – skip one, and the entire thing collapses.

3. Designing the Deep Studying Mannequin (With out Going Overboard)

I didn’t need to construct a spaceship – only a mannequin that labored.

So I used:

• Dense layers with swish activations (as a result of ReLU was too 2017)

• Dropout + batch norm for regularization

• Output-specific loss features

• Analysis metrics like MAE, SMAPE, and R² for transparency

Tip: Don’t obsess over structure. Obsess over what your mannequin is definitely studying.

4. Enter MLflow: The Lifesaver I Didn’t Know I Wanted

Right here’s what modified every thing: I began logging.

• Each coaching run

• Each hyperparameter and metric

• Mannequin artifacts and model management

With MLflow, I might hint the what, why, and the way for each experiment.

Dev → QA → Prod promotions have been now not guesswork – they have been coverage.

5. Inference within the Actual World Isn’t Simply .predict()

As soon as deployed, inference jobs kicked in every day – however this wasn’t nearly outputs.

• Metadata like timestamps and IDs have been saved with each prediction

• Outcomes have been written again into Delta tables

• These tables powered dashboards, metrics, and belief

Lesson: Manufacturing inference = prediction + context + observability.

6. Drift Occurs. Be Prepared.

Your mannequin will fail. That’s not pessimism – that’s chance.

• I monitored residuals and metrics repeatedly

• If drift was detected, a retraining job was triggered

• The brand new “challenger” mannequin needed to beat the “champion” to earn a promotion

This wasn’t chaos. It was managed evolution.

Conclusion: The Mannequin Was Simply the Starting

What began as a easy neural community grew to become a production-grade pipeline:

• Modular preprocessing

• Logged coaching experiments

• Each day inference

• Auto-retraining

• Metric-driven mannequin promotion

Right here’s the exhausting reality:

Machine studying is 10% modeling and 90% every thing else.

And when you embrace that, you cease being a modeler… and begin being an ML engineer.