Logistic regression is by far essentially the most extensively used machine studying mannequin for binary classification datasets. The mannequin is comparatively easy and is predicated on a key assumption: the existence of a linear determination boundary (a line or a floor in a higher-dimensional function house) that may separate the lessons of the goal variable y based mostly on the options within the mannequin.

In a nutshell, the choice boundary will be interpreted as a threshold at which the mannequin assigns a knowledge level to 1 class or the opposite, conditional on the anticipated chance of belonging to a category.

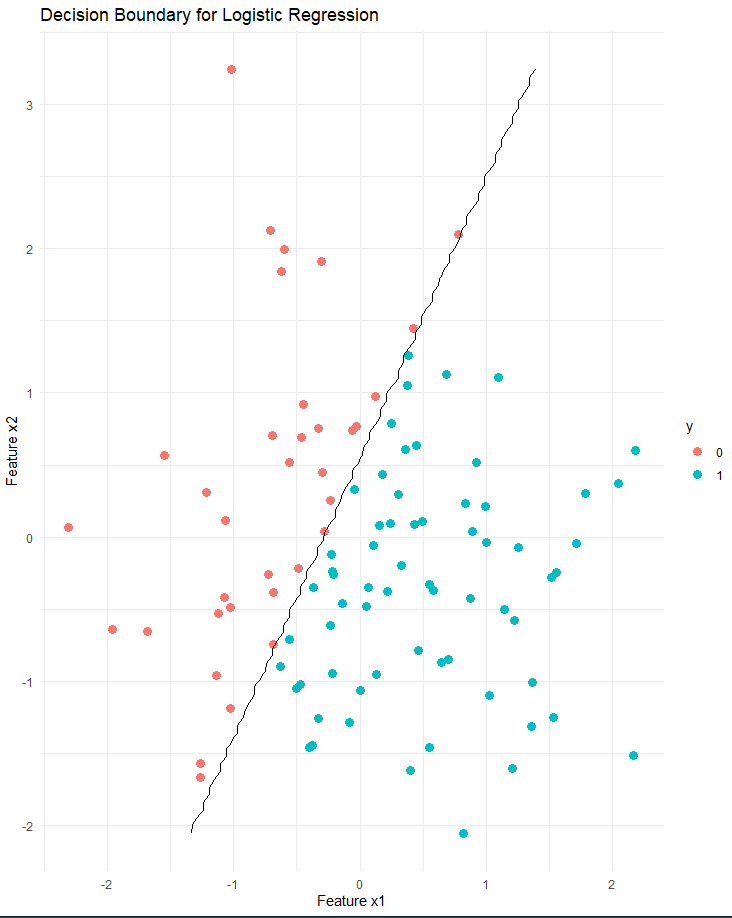

The determine beneath presents a schematic illustration of the choice boundary that separates the goal variable into two lessons. On this case the mannequin is predicated on a set of two options (x1 and x2). The goal variable will be clearly separated into two lessons based mostly on the values of the options.

Nonetheless, in your every day modeling actions, the scenario would possibly look quite much like the determine beneath.