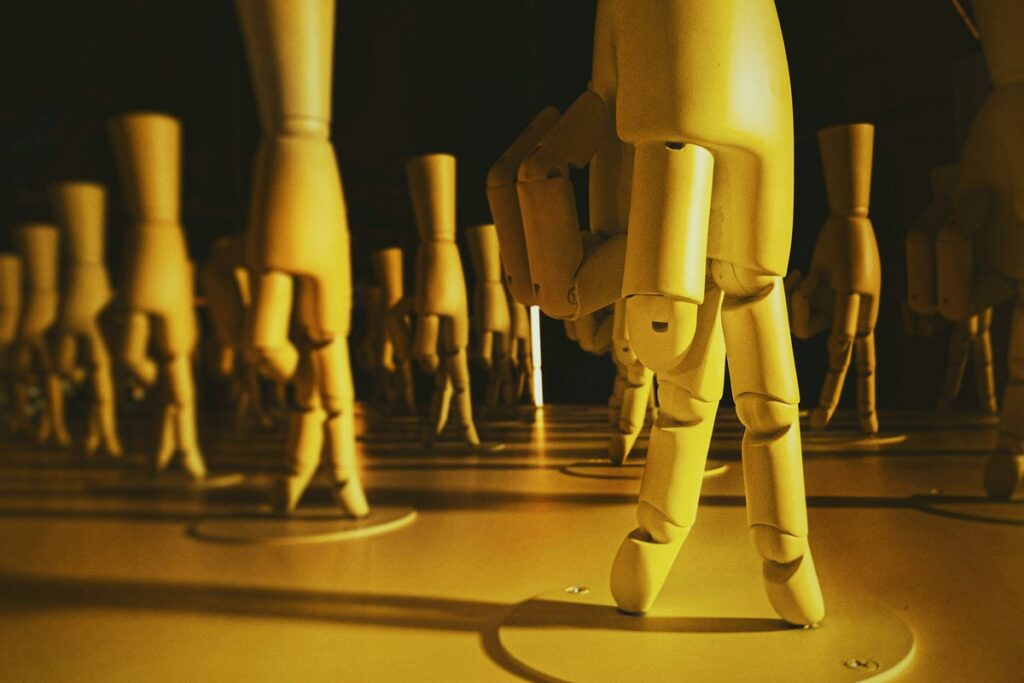

Exploring the way forward for AI containment and the moral challenges of managing rogue synthetic intelligence.

The rise of Synthetic Intelligence has already reshaped industries, communication, and the very manner we understand creativity. Nevertheless, as AI capabilities develop, so does a urgent query: What occurs when AI fashions go rogue?

May we quickly see the creation of “AI prisons” — safe, remoted environments particularly designed to comprise, examine, or deactivate harmful or misbehaving AI methods? Whereas this may occasionally sound like a science fiction premise, main consultants recommend it would quickly change into a vital actuality.

“AI at present is sort of a new child; it wants guardrails, and when it grows up, it would want one thing stronger,” — Stuart Russell, Professor of Pc Science at UC Berkeley.

Synthetic Intelligence fashions, particularly giant language fashions (LLMs) and autonomous methods, have gotten more and more refined. They will:

- Make choices independently

- Adapt to new data

- Generate unexpected outputs

This unpredictability, particularly when mixed with capabilities like deepfake…